Adaboost for Regression - Example

- 10 minutes read - 1953 wordsIntroduction

AdaBoost is an ensemble model that sequentially builds new models based on the errors of the previous model to improve the predictions. The most common case is to use Decision Trees as base models. Very often the examples explained are for classification tasks. AdaBoost can, however, also be used for regression problems. This is what we will focus on in this post. This article covers the detailed calculations of a simplified example. For a general explanation of the algorithm, please refer to AdaBoost - Explained.

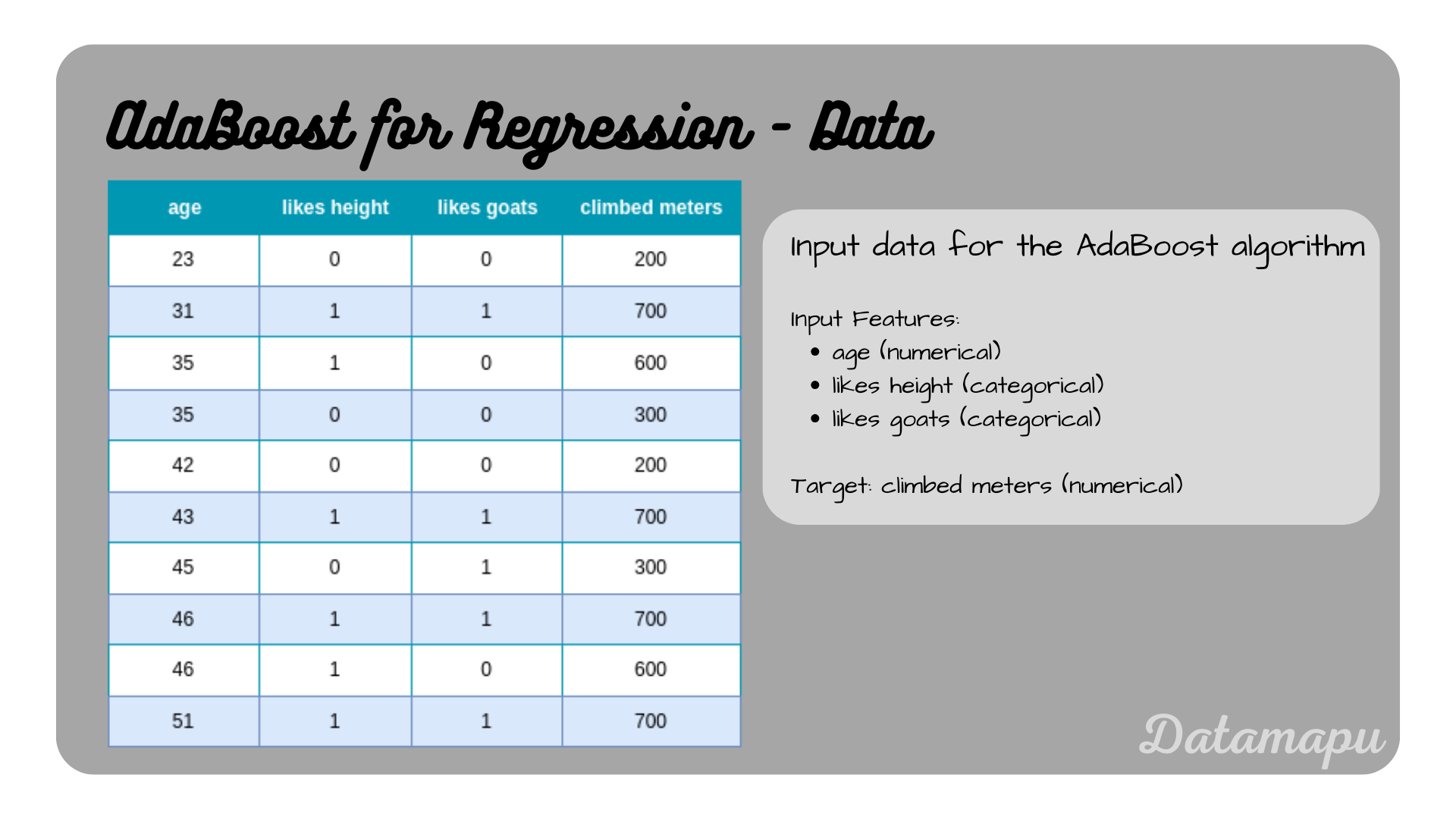

Data

We use a very simplified example dataset to make the development of the model by hand easier. We use a dataset containing 10 samples. It includes the features ‘age’, ’likes height’, and ’likes goats’. The target variable is ‘climbed meters’. That is we want to estimate how many meters a person has climbed depending on their age, and whether they like height and goats. For comparison purposes, we used that same dataset in the article Decision Trees for Regression - Example.

The dataset used in this example.

The dataset used in this example.

Build the Model

We build a AdaBoost model from scratch using the above dataset. We use the default values from in sklearn, that is we use Decision Trees as underlying models with a maximum depth of three. In this post, however, we will focus on AdaBoost and not on the development of the Decision Trees. To understand the details on how to develop a Decision Tree for a regression task, please refer to the separate articles Decision Trees - Explained or Decision Trees for Regression - Example.

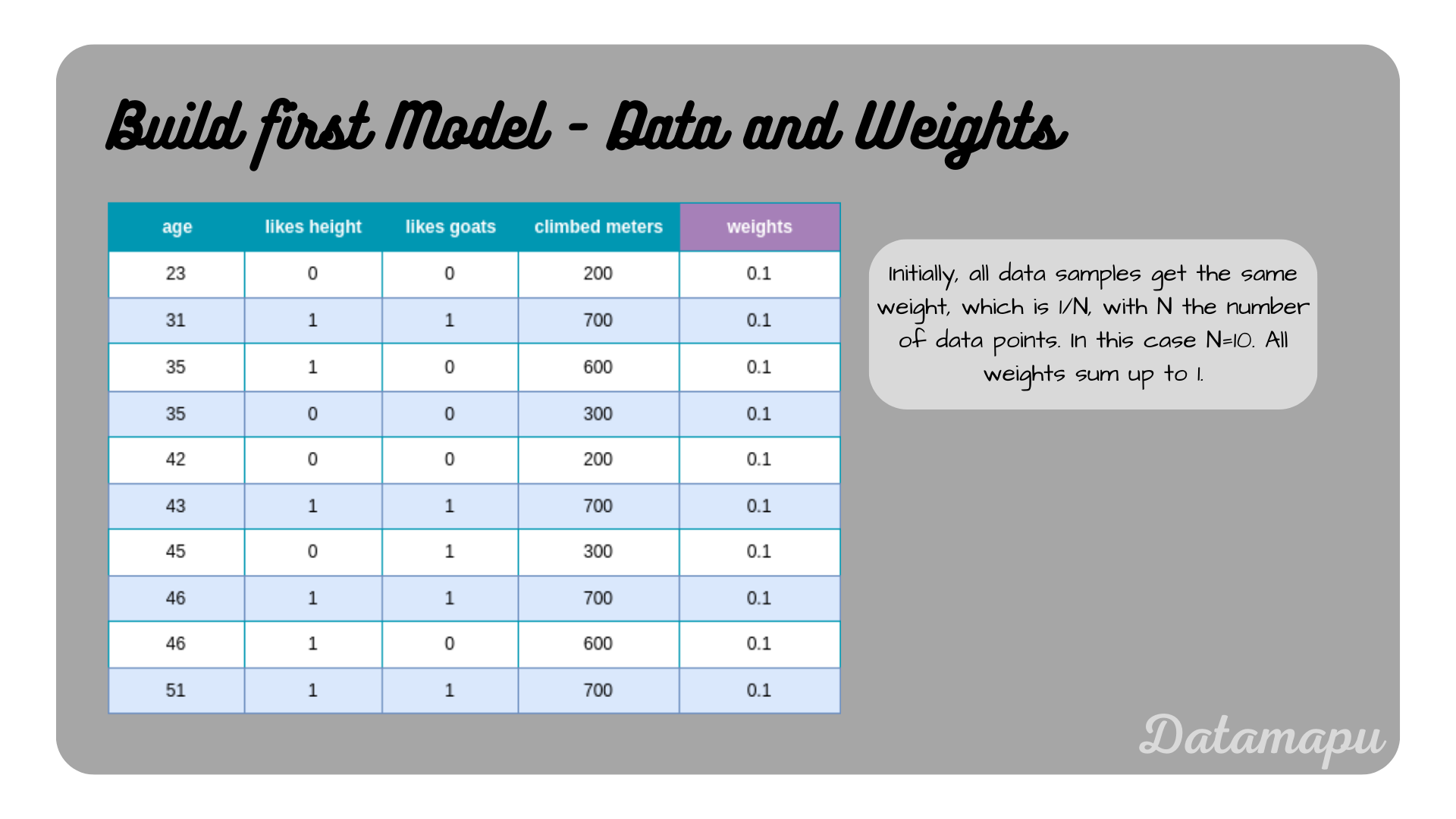

We start with asigning weights to each sample. Initially, the weights are all equal to

The dataset with weights assigned to each sample.

The dataset with weights assigned to each sample.

We now fit a Decision Tree with maximum depth of three to this dataset.

The first Decision Tree of the AdaBoost model.

The first Decision Tree of the AdaBoost model.

Now, we determine the total error, which we define as the number of wrongly predicted samples divided by the total number of samples. Following the decision paths of the tree, we can find that the samples age

Note, that different implementation of the AdaBoost algorithm for regression exist. Usually the prediction does not need to match exactly, but a margin is given, and the prediction is counted as an error if it falls out of this margin [1]. For the sake of simplicity, we will keep this definition analogue to a classification problem. The main idea of calculating the influence of each tree remains, but the way the error is exactly calculated may differ in different implementations.

With the influence

The sign used in the exponent depends on whether the specific sample was correctly predicted or not. For correctly predicted samples, we get

and for wrongly predicted samples

These weights need to be normalized, which is done by dividing by their sum.

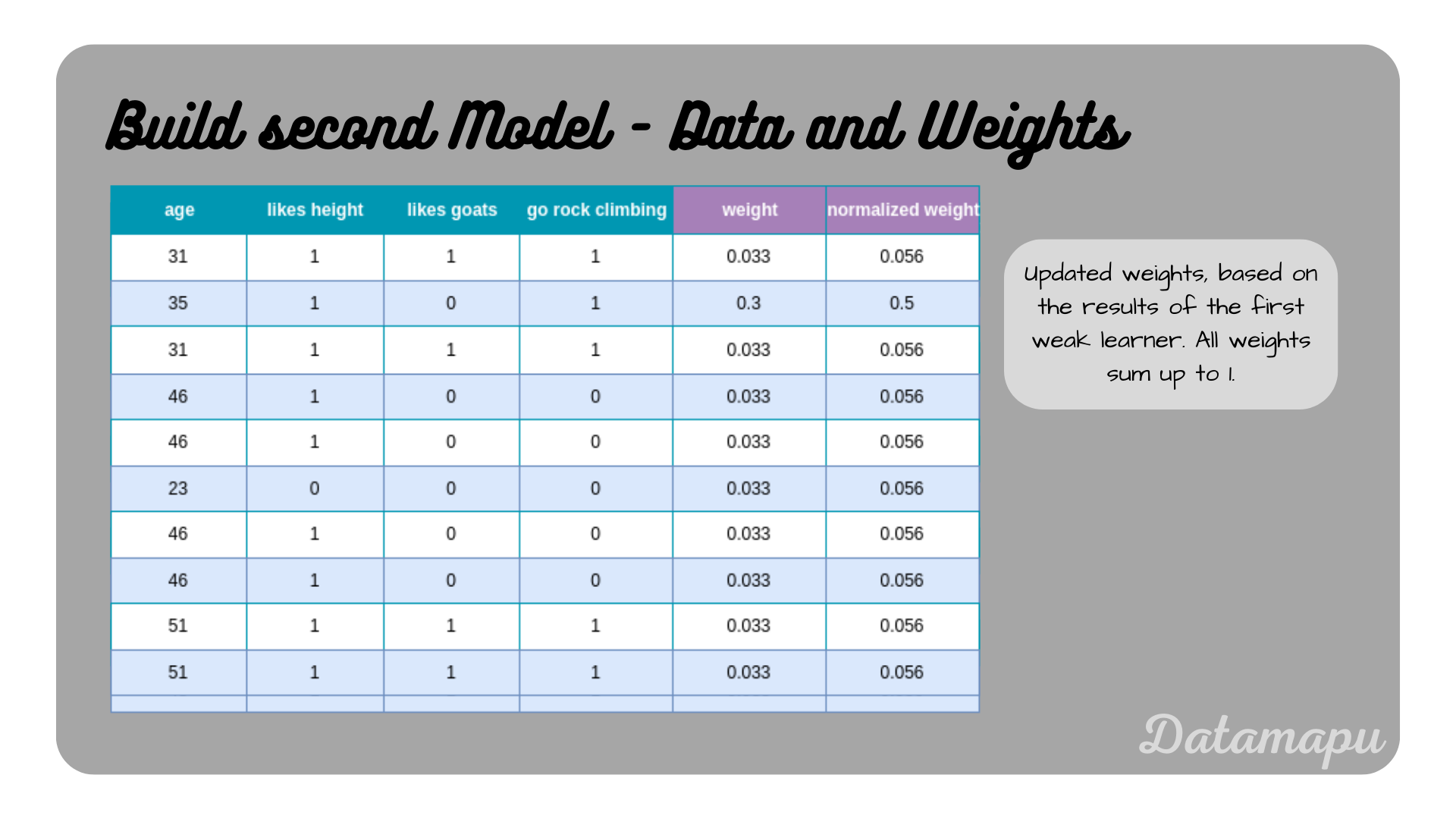

The dataset with the updated weights asigned to each sample.

The dataset with the updated weights asigned to each sample.

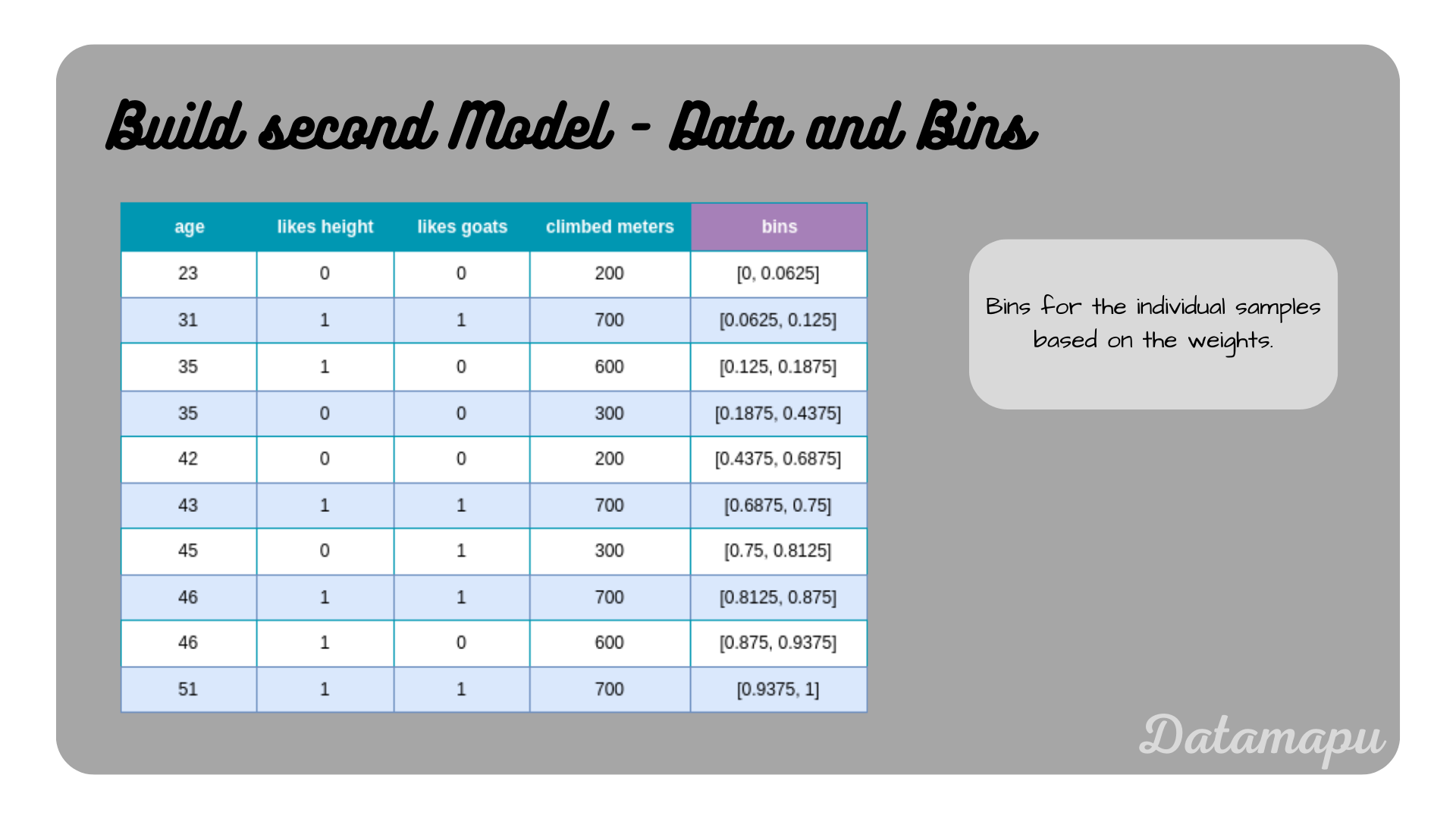

The dataset with the bins based on the weights for each sample.

The dataset with the bins based on the weights for each sample.

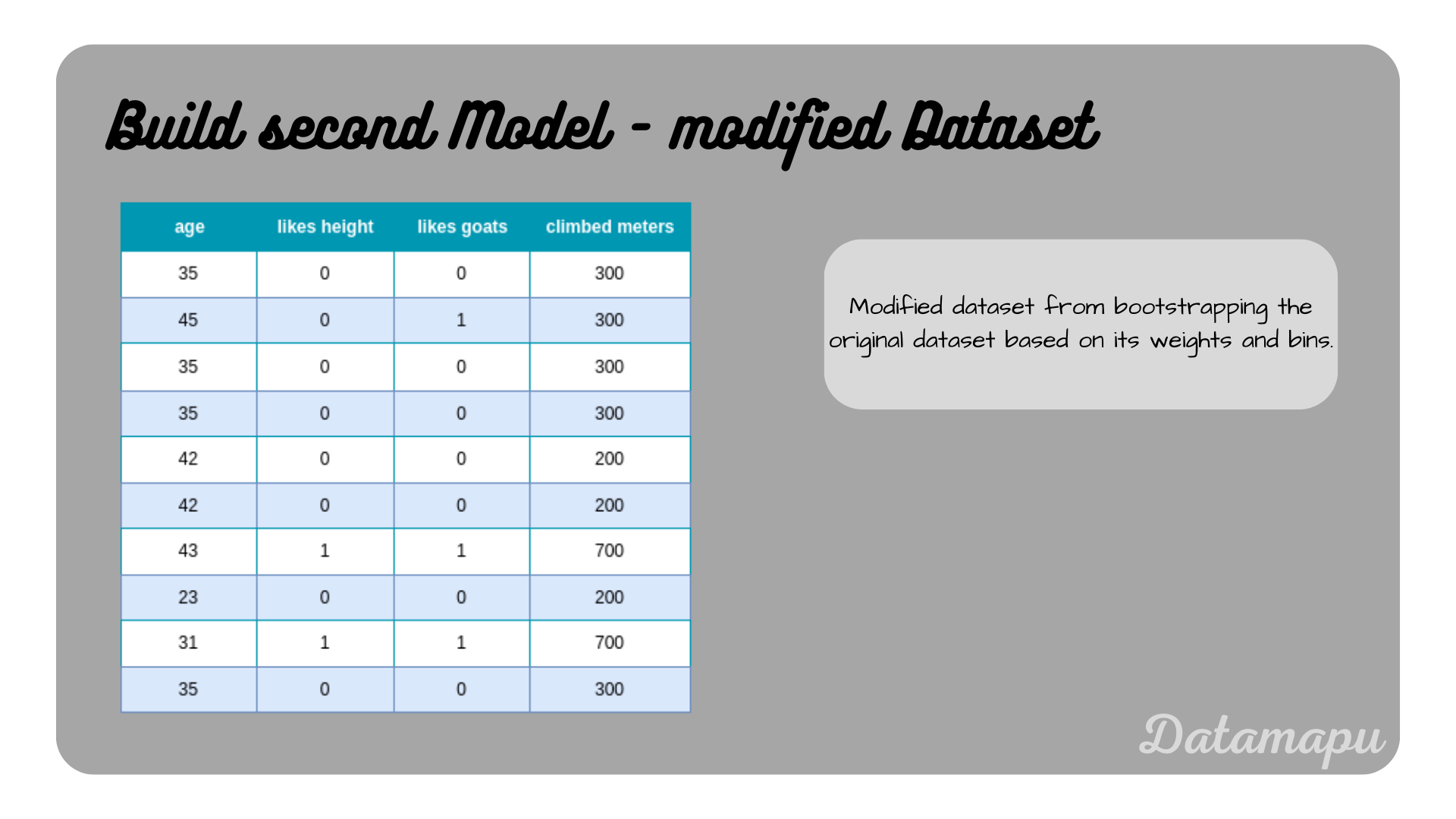

Now bootstrapping is performed to create a modified dataset, which we can use to build the next model. For that, we simulate drawing random numbers between

Modified dataset based on the weights.

Modified dataset based on the weights.

Now, we fit the second model to this modified dataset. The resulting model is illustrated in the next plot.

The second Decision Tree of the AdaBoost model.

The second Decision Tree of the AdaBoost model.

Following the decision paths of this tree we see that three samples are wrongly predicted. The sample age

The weights are then updated following the above formula. The old weights can be looked up in the previous table. For the first sample, which was correctly predicted, we get

The second sample was wrongly predicted, thus the sign in the exponent is positive. The new weight calculates as

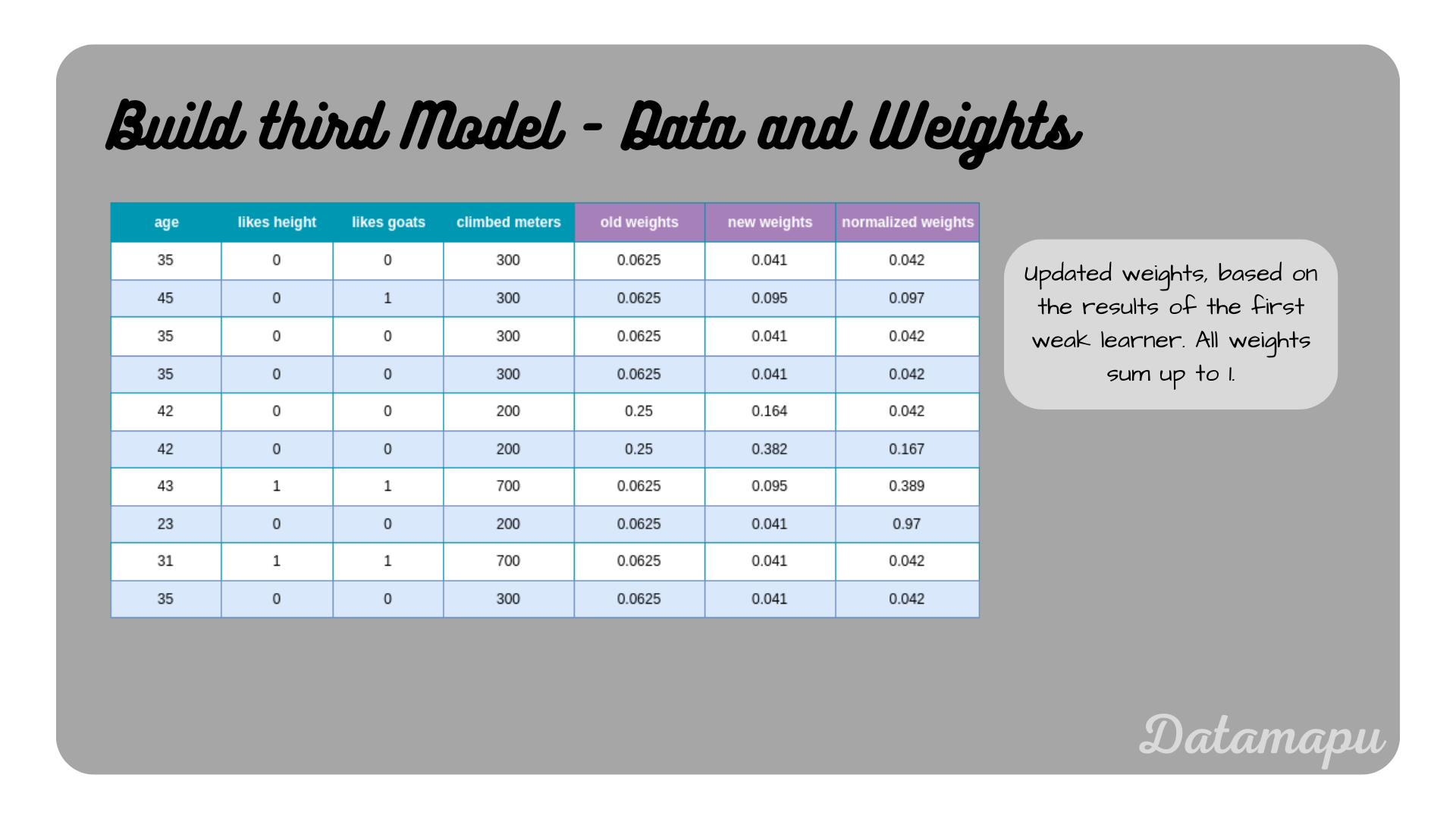

The new weights again need to normalized. The weights for all samples are summarized in the following plot.

The dataset with the updated weights asigned to each sample.

The dataset with the updated weights asigned to each sample.

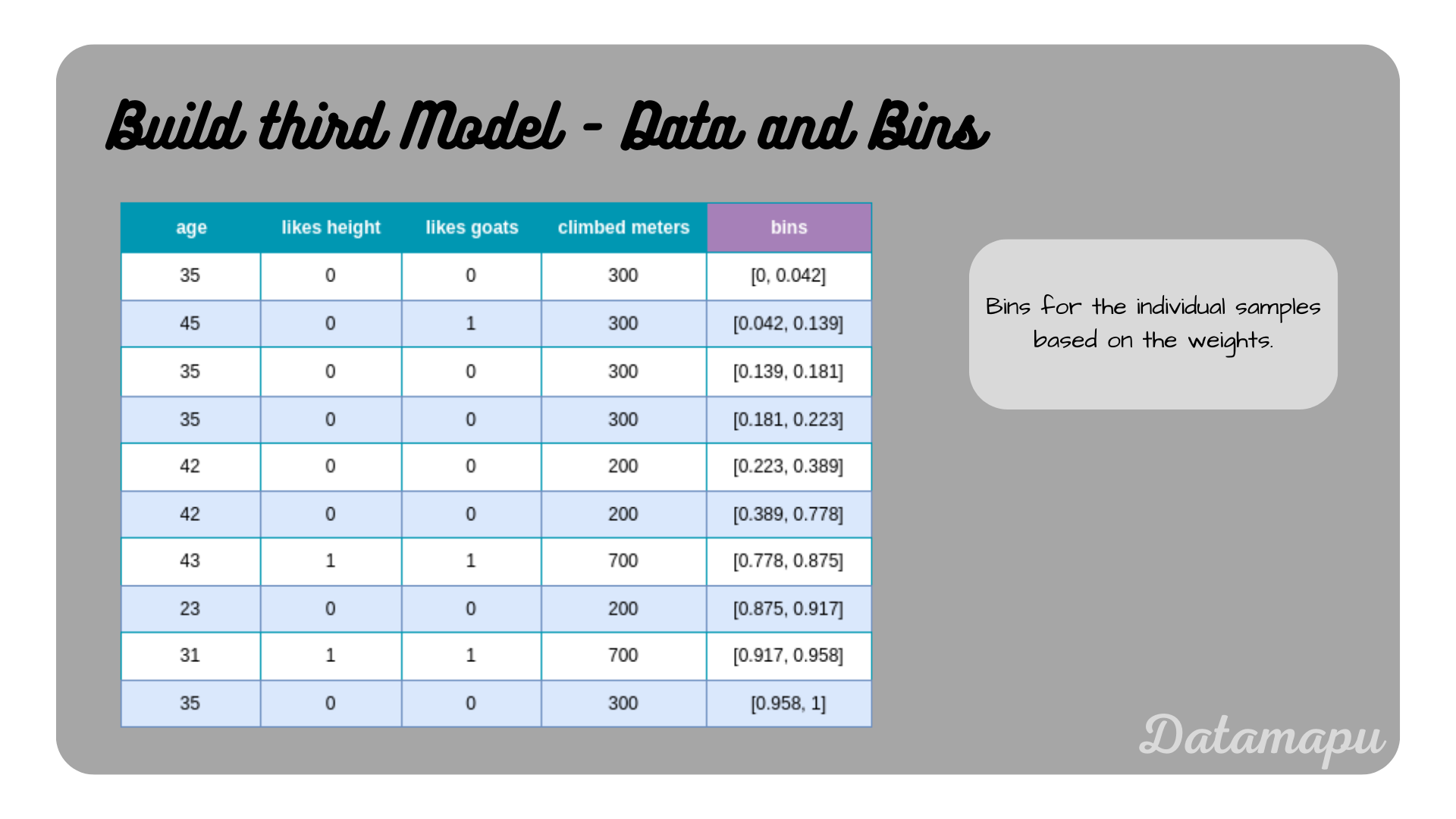

From the normalized weights, we calculate the bins.

The dataset with the asigned bins to each sample.

The dataset with the asigned bins to each sample.

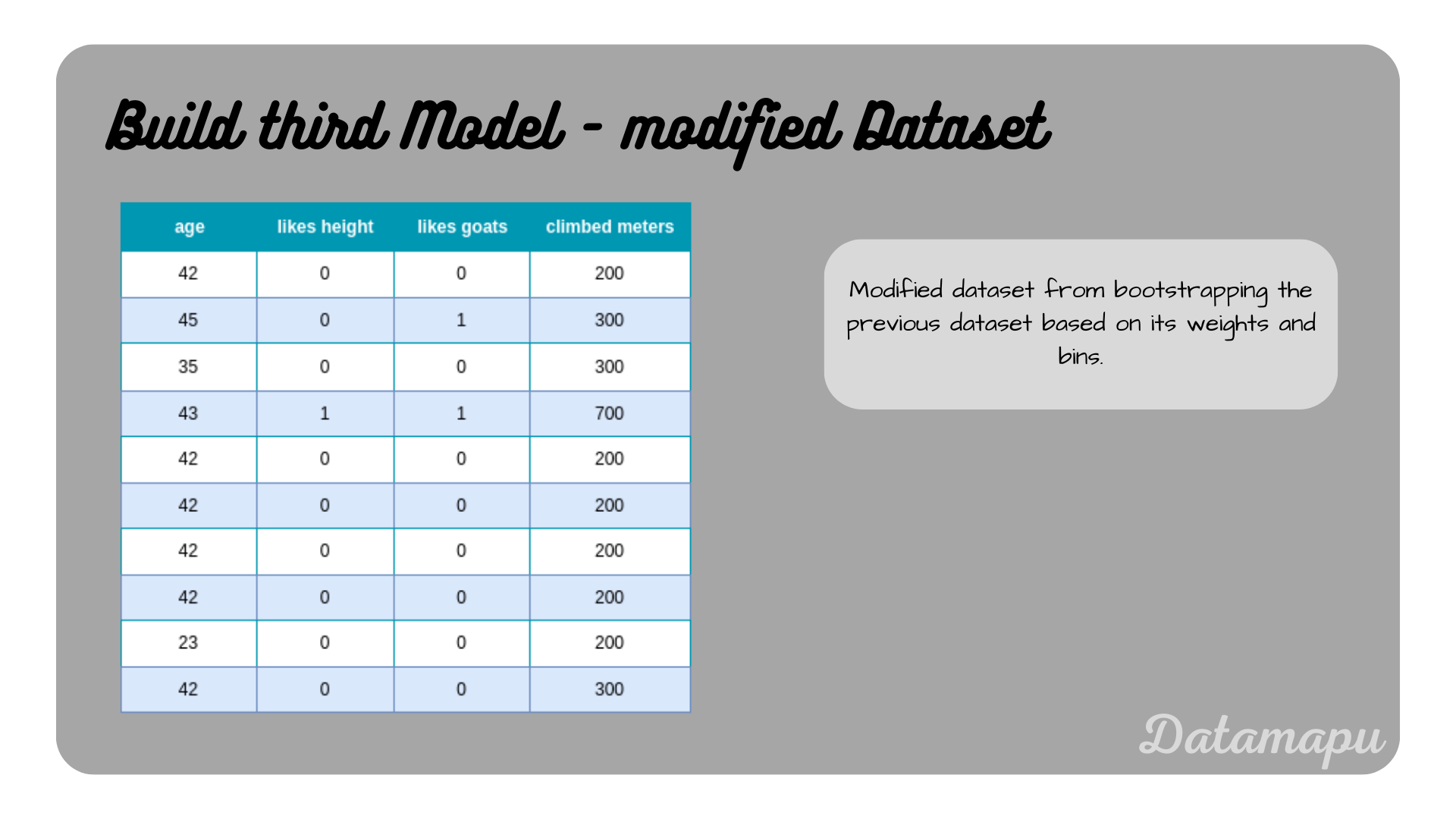

Again, we simulate drawing 10 random numbers. Let’s assume, we got the random numbers

Modified dataset based on the weights.

Modified dataset based on the weights.

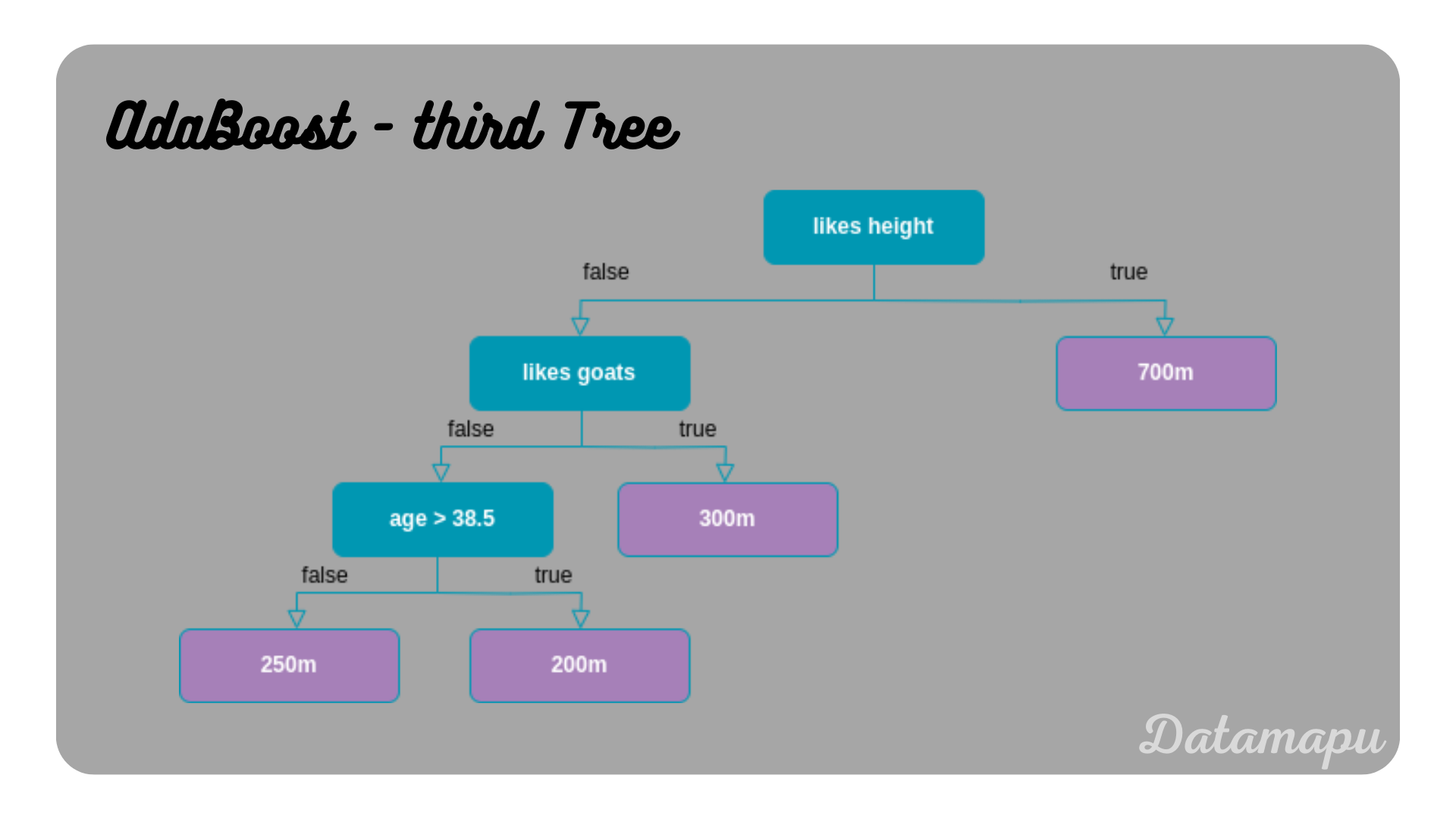

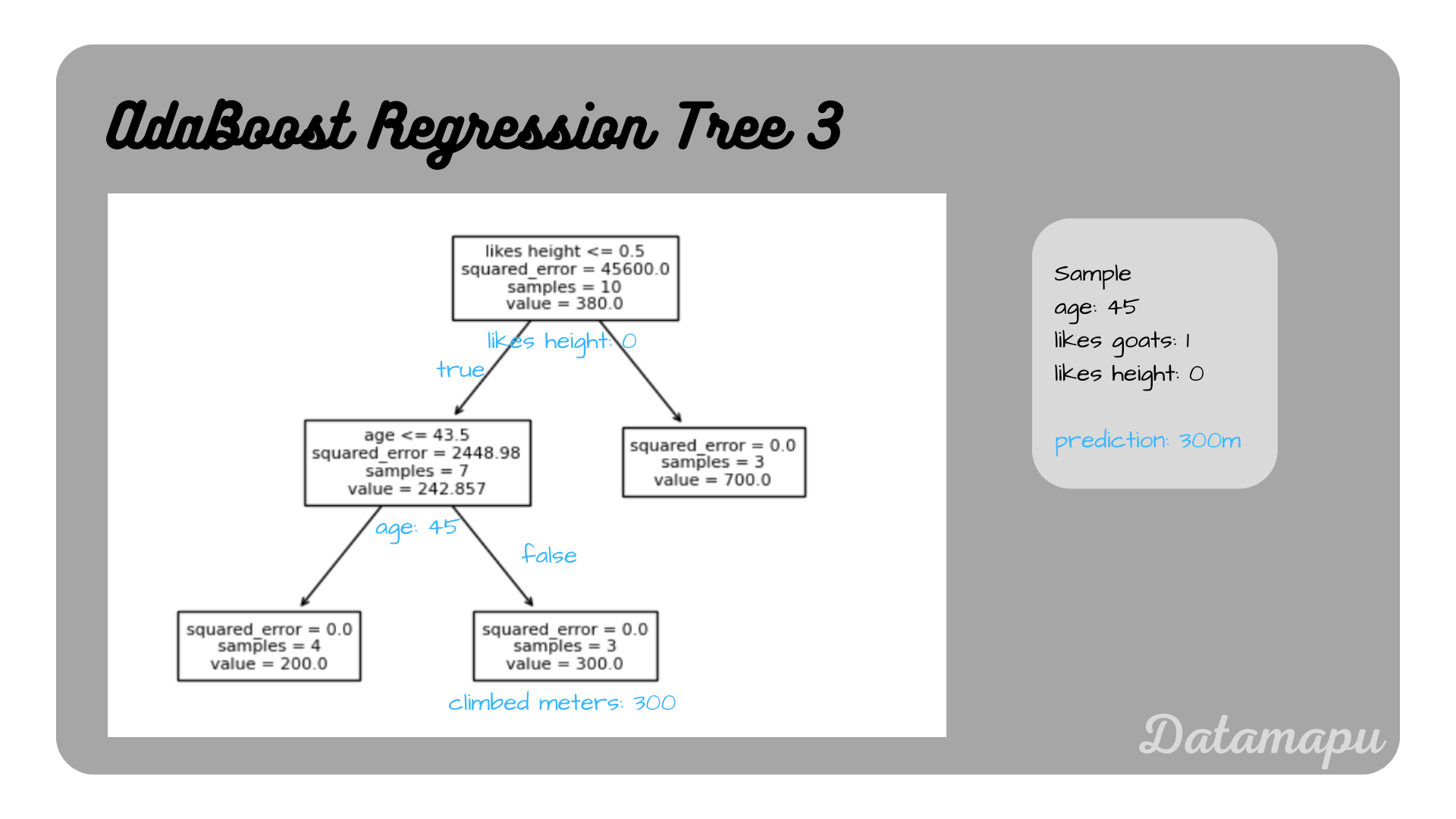

Form this dataset we build the third and last model, which is shown in the following plot.

The third Decision Tree of the AdaBoost model.

The third Decision Tree of the AdaBoost model.

The only thing missing now to make the final prediction is the influence

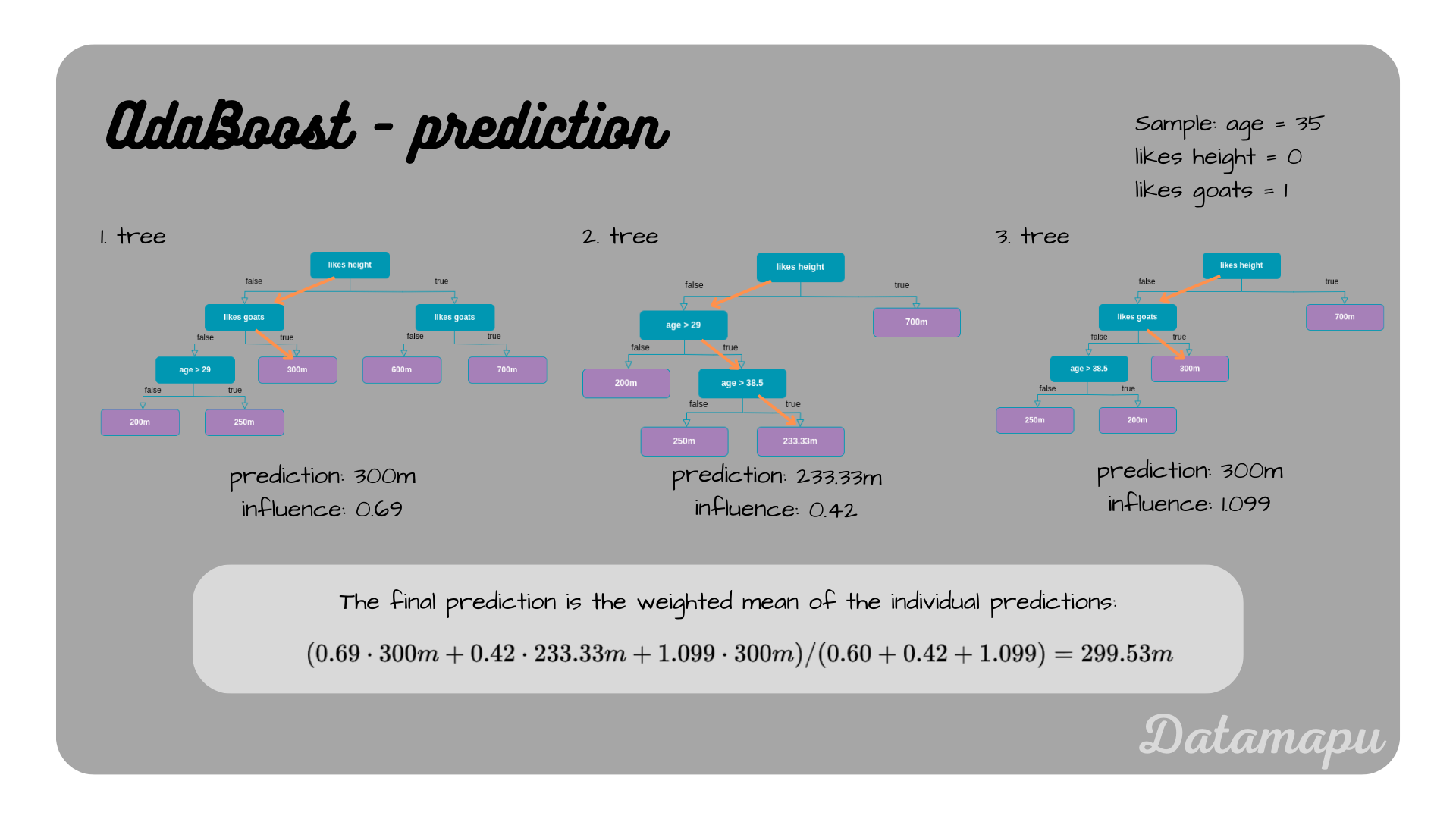

Let’s now use the model to make a prediction. Consider the following sample.

| Feature | Value |

|---|---|

| age | 45 |

| likes height | 0 |

| likes goats | 1 |

To make the final prediction, we need to consider all the individual predictions of all the models. The weighted mean of these predictions is then the prediction of the constructed esemble AdaBoost model. As weights the values for the influence is used. Following the decision path of the first tree, results in a prediction of

The true value of this sample is

The final prediction for a specific sample.

The final prediction for a specific sample.

Fit a Model in Python

After developing a model by hand, we will now see how to fit a AdaBoost for a regression task in Python. We can use the sklearn method AdaBoostRegressor. Note: The fitted model in sklearn differs from our developed model, due to some randomness in the algorithm and due to differences in the implementation of the algorithm. Randomness occurs in the underlying DecisionTreeRegressor algorithm and in the boosting used in the AdaBoostRegressor. The main concepts, however, remain the same.

We first create a dataframe for our data.

| |

Now, we can fit the model. Because this example is only for illustration purposes and the dataset is very small, we limit the number of underlying Decision Trees to three, by setting the hyperparameter n_estimators=3. Note, that in a real world project, this number would usually be much higher, the default value in sklearn is

| |

We can then make predictions using the predict method and print the score, which is defined as the mean accuracy.

| |

This leads to the predictions

| |

This yields to

stage 1:

stage 2:

stage 3:

which shows that all three models yield to different predictions for some samples. The influences are called estimator_weights_ in sklearn and can also be printed.

| |

For this example the weights are

| Feature | Value |

|---|---|

| age | 45 |

| likes height | 0 |

| likes goats | 1 |

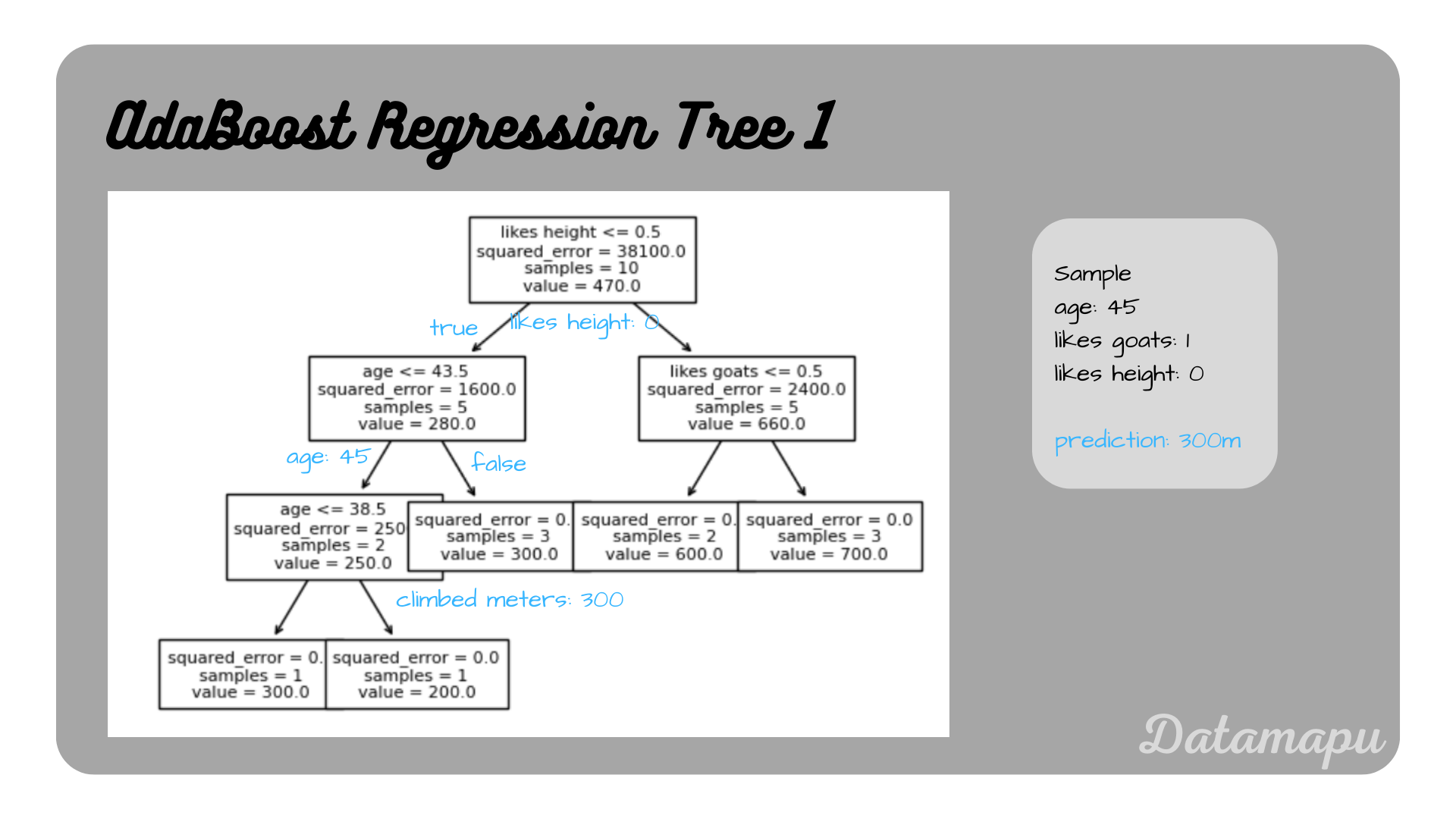

We can visualize the underlying Decision Trees and follow the decision paths. For the first tree the visualization is achieved as follows.

| |

Prediction of the first tree.

Prediction of the first tree.

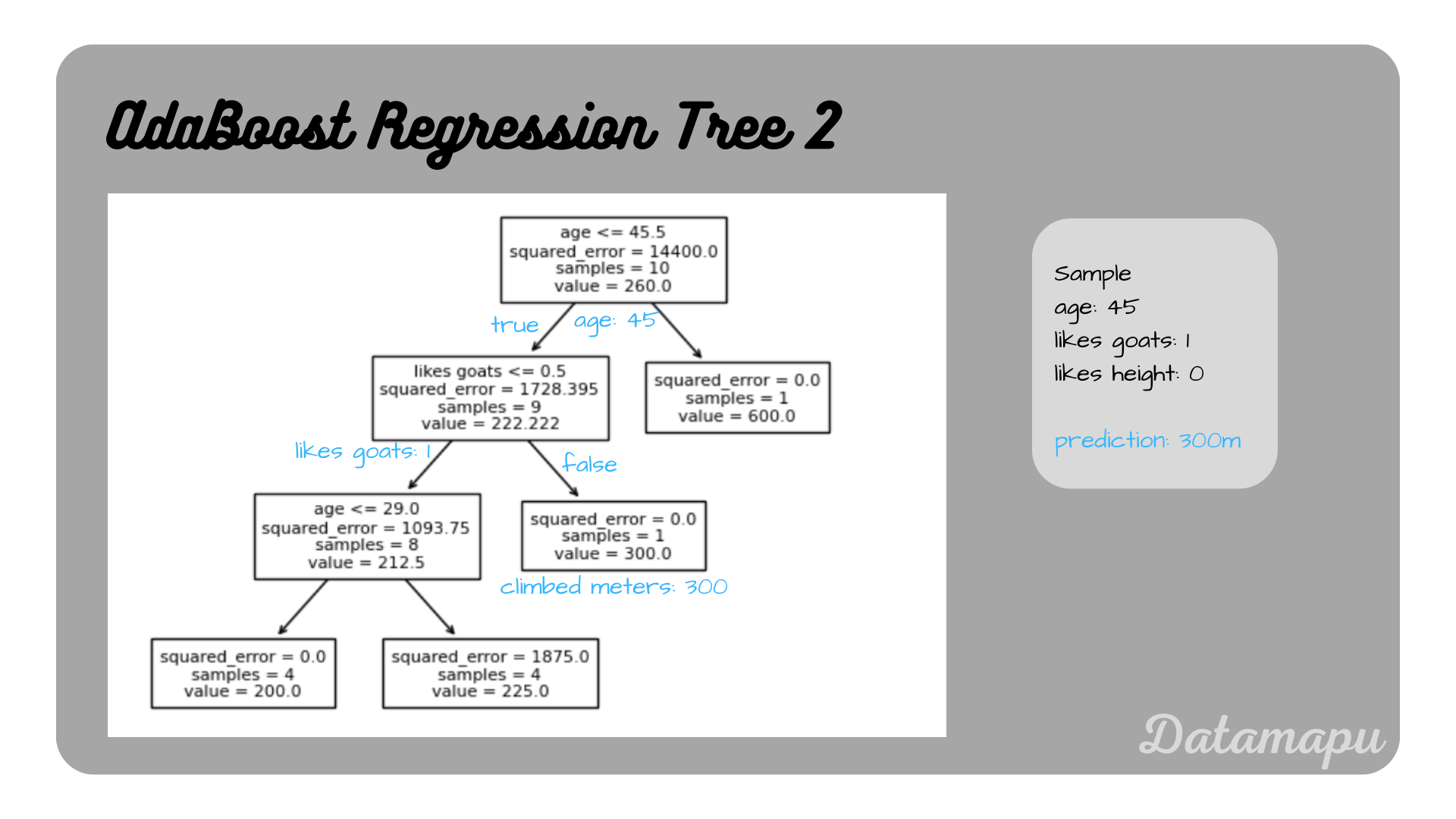

Prediction of the second tree.

Prediction of the second tree.

Prediction of the third tree.

Prediction of the third tree.

Combining these three predictions with the influences (estimator_weights_) leads to the final prediction

Filling in the according numbers, this gives

which coincides with the prediction, we printed above for this sample.

Summary

In this article we developed an AdaBoost model for a Regression task by hand following the steps described in the separate article AdaBoost - Explained. Additionally a model was developed using sklearn. Although both models were derived for the same dataset, the final models differ due to some randomness in the algorithm and different implementations of the algorithm. This post focused on the application of the algorithm to a simplified regression example. For a detailed example for a classification task, please refer to the article AdaBoost for Classification - Example.

References

[1] Solomatine, D.P.; Shrestha, D.L., “AdaBoost.RT: a Boosting Algorithm for Regression Problems”, 2004 IEEE International Joint Conference on Neural Networks, vol.2, no., pp.1163.1168 vol.2, 25-29 July 2004, DOI: 10.1109/IJCNN.2004.1380102

If this blog is useful for you, please consider supporting.