Logistic Regression - Explained

- 10 minutes read - 1986 wordsIntroduction

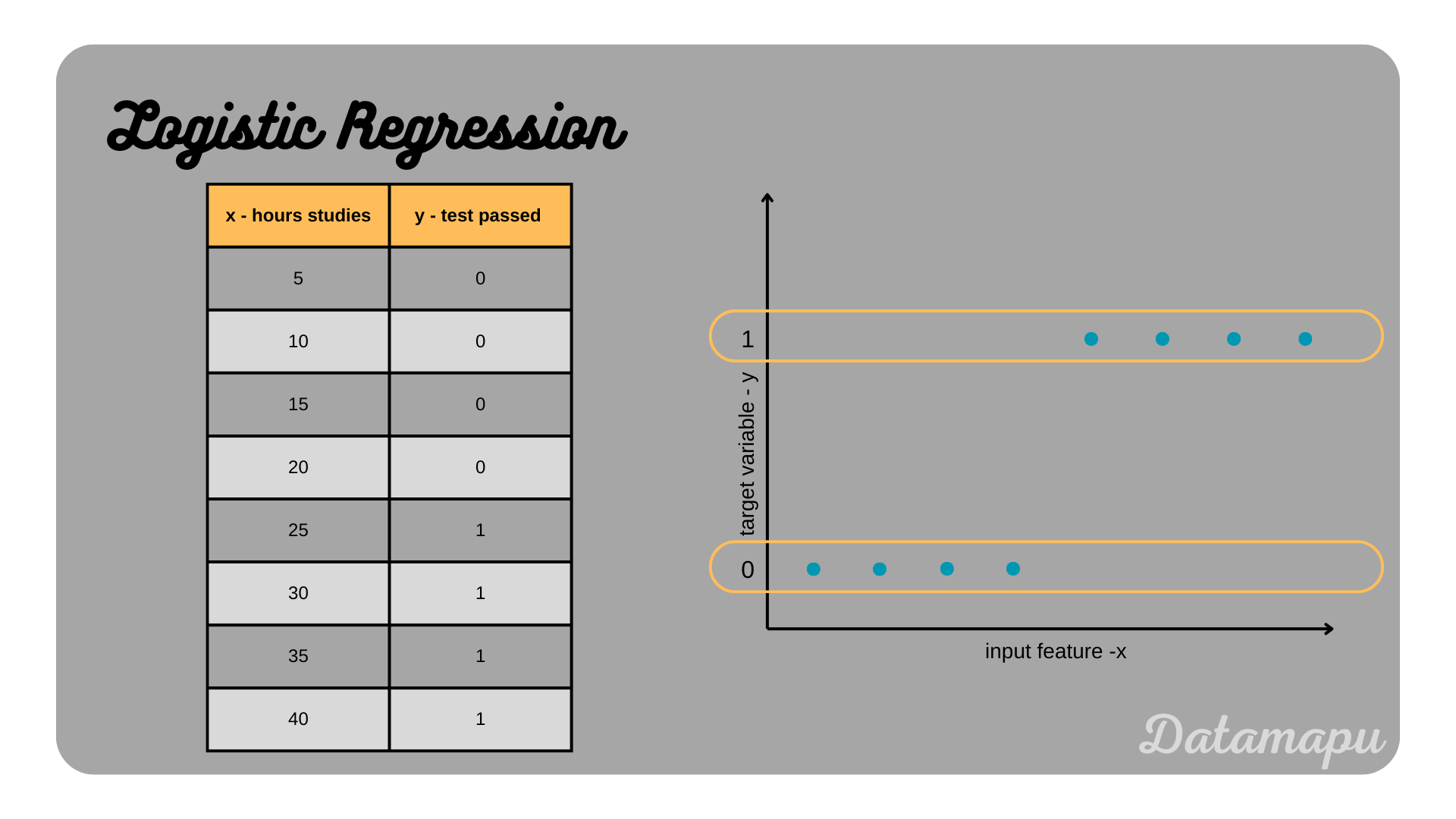

Logistic Regression is a Supervised Machine Learning algorithm, in which a model is developed, that relates the target variable to one or more input variables (features). However, in contrast to Linear Regression the target (dependent) variable is not numerical, but categorical. That is the target variable can be classified in different categories (e.g.: ’test passed’ or ’test not passed’). An idealized example of two categories for the target variable is illustrated in the plot below. The relation described in this example is whether a test is passed or not, depending on the amount of hours studied. Note, that in real world examples the border between the two classes depending on the input feature (independ variable) will usually not be as clear as in this plot.

Simplified and idealized example of a logistic regression

Simplified and idealized example of a logistic regression

Binary Logistic Regression

If the target variable contains two classes we speak of a Binary Logistic Regression. The target values for binary classification are usually denominated as 0 and 1. In the previous plot ’test passed’ is classified as 1 and ’test not passed’ is classified as 0. To develop a Logistic Regression model we start with the equation of a Linear Regression. First, we assume that we only have one input feature (independent variable), that is we use the equation for a Simple Linear Regression.

with

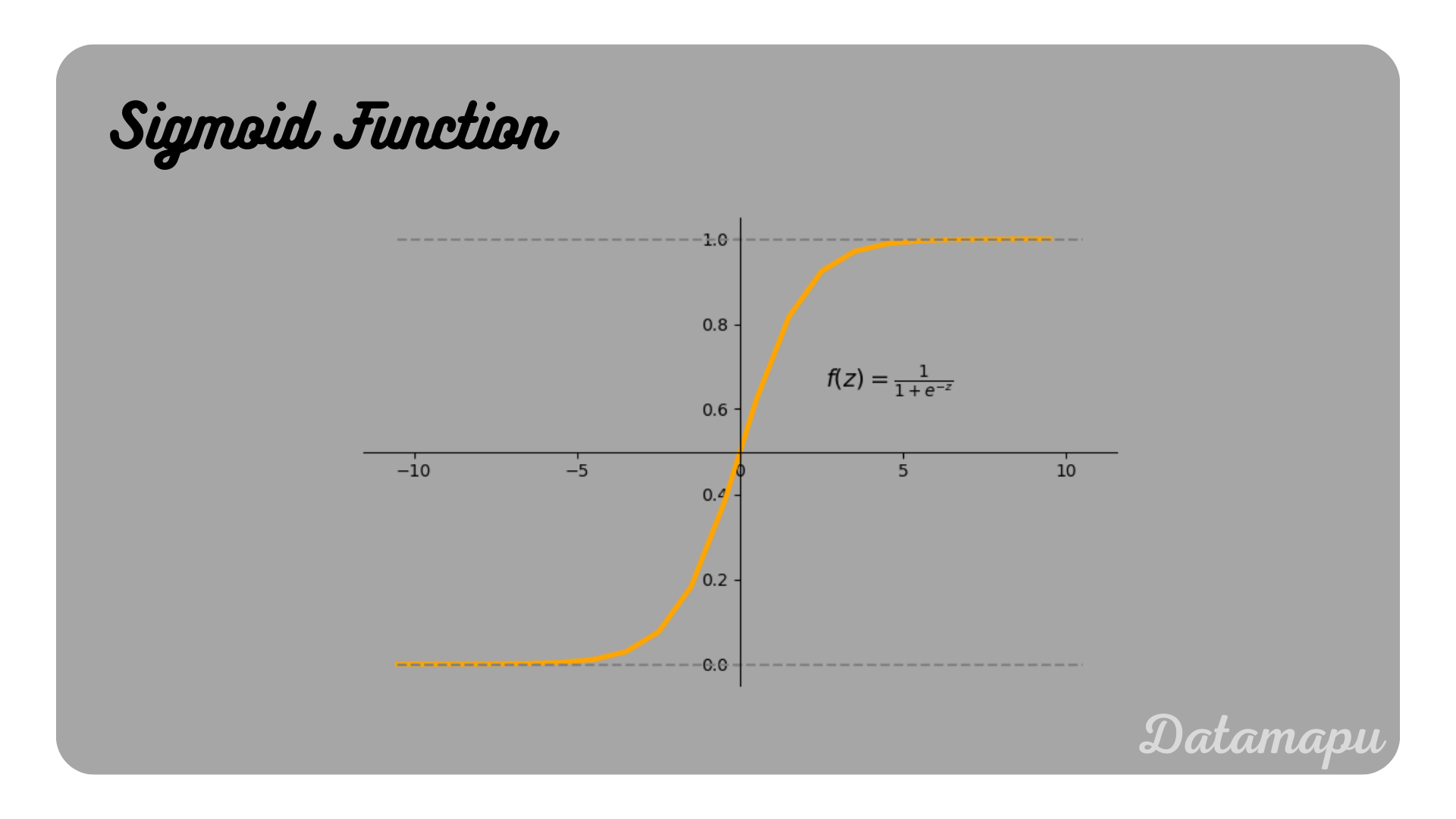

The sigmoid Function.

The sigmoid Function.

We then take our Linear Regression equation and insert it into the logistic equation. With that we get

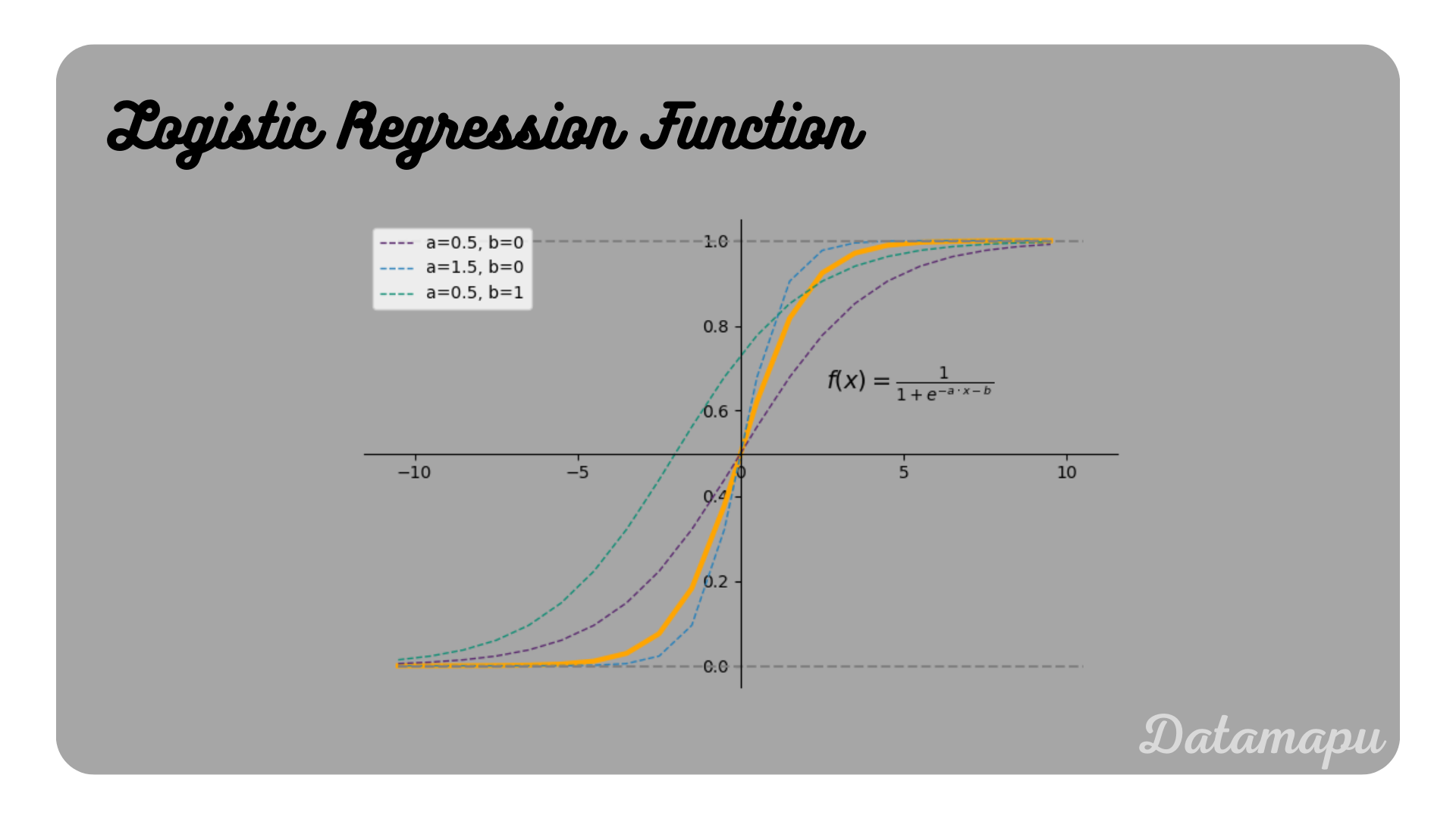

a Sigmoid Function depending on the parameters

The function for Logistic Regression illustrated for different parameters.

The function for Logistic Regression illustrated for different parameters.

This is our final Logistic Regression model.

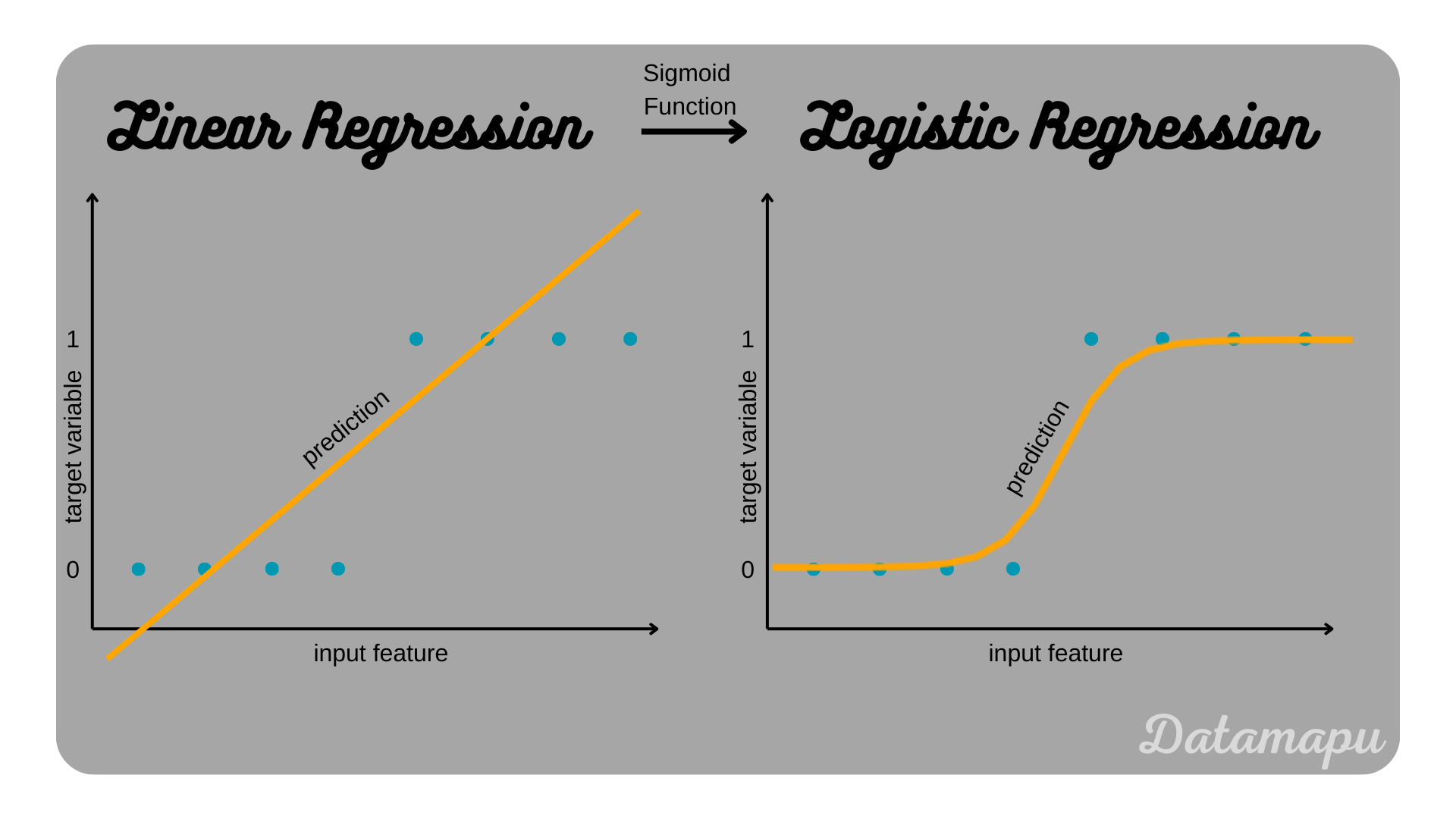

From Linear Regression to Logistic Regression

From Linear Regression to Logistic Regression

If more than one independent variable (input feature) is considered, the input features can be numerical or categorical as in a Linear Regression. The exact same idea as described above is followed, but using the equation for Multiple Linear Regression as input for the Sigmoid function. This results in

Multinomial Logistic Regression

If the target variable can take more than two classes, we speak of Multinomial Logistic Regression. By definition, a Logistic Regression is designed for binary target variables. If the target contains more than two classes, we need to adapt the algorithm. This can be done by splitting the multiple classification problem into several binary classification problems. The two most common ways to do that are the One-vs-Rest and One-vs-One strategies. As example consider predicting one of the animals dog, cat, rabbit, or ferret by specific criteria, that is we have four classes in our target data.

One-vs-Rest. This approach creates

- Classifier 1: class 1: dog, class 2: cat, rabbit, or ferret

- Classifier 2: class 1: cat, class 2: dog, rabbit, or ferret

- Classifier 3: class 1: rabbit, class 2: dog, cat, or ferret

- Classifier 4: class 1: ferret, class 2: dog, cat, or rabbit

With these classifiers, we get probabilities for each category (dog, cat, rabbit, ferret) and the maximum of these probabilities is taken for the final outcome. For example, consider the following outcome:

- Classifier 1: probability for dog (class 1): 0.5, probability for cat, rabbit, or ferret (class 2): 0.5

- Classifier 2: probability for cat (class 1): 0.7, probability for cat, rabbit, or ferret (class 2): 0.3

- Classifier 3: probability for rabbit (class 1): 0.2, probability for cat, rabbit, or ferret (class 2): 0.8

- Classifier 4: probability for ferret (class 1): 0.3, probability for dog, cat, or rabbit (class 2): 0.7

In this case, the highest probability achieves classifier 2, that is the final outcome would be a probability of

One-vs-One. In this approach, a binary model is fitted for each binary combination of the output classes. That is for

- Classifier 1: class 1: dog, class 2: cat

- Classifier 2: class 1: dog, class 2: rabbit

- Classifier 3: class 1: dog, class 2: ferret

- Classifier 4: class 1: cat, class 2: rabbit

- Classifier 5: class 1: cat, class 2: ferret

- Classifier 6: class 1: rabbit, class 2: ferret

To define the prediction of the multiclass classification problem from these classifiers, the number of predictions for each class is counted and the class with the highest number of correct predictions among classifiers is the final prediction. This procedure is also known as “Voting”.

Note that a disadvantage of these methods is that they require to fit multiple models, which takes long if the dataset considered is large. For the One-vs-One even more than for the One-vs-Rest method.

Find Best Fit

As in all supervised Machine Learning models we estimate the model parameters, in this case,

with

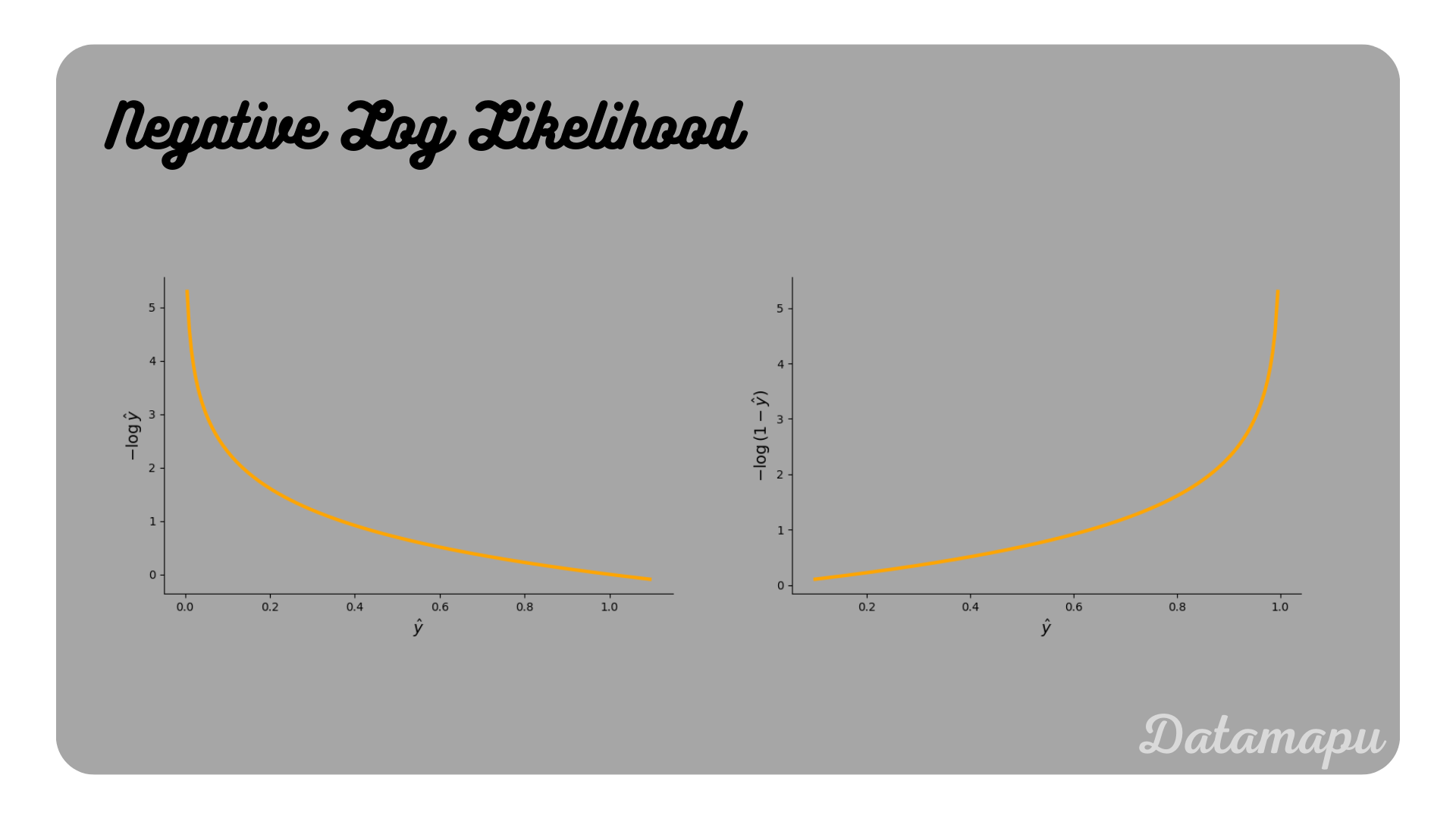

When the data sample belongs to the first class, i.e.

Note, that minimizing the NLL is equally to maximizing the likelihood, which is also known as Maximum Likelihood Estimation. Maximizing the likelihood is more intuitive, it is however common to minimize the loss and not to maximize it. The NNL can be derived from the likelihood function. This is however out of the scope of this article.

Interpretation

To understand Logistic Regression better, let’s consider the defined model function again

with

This last equation describes the chances

This expression is known as the log-odds. That is, although Linear Regression and Logistic Regression are used for different types of problems (regression vs. classification), they still have a lot in common. In both cases a line (one input feature) or a hyperplane (more than one input feature) is used. In a Linear Regression however this line / hyperplane is used to predict the target variable, while in Logistic Regression it is used to separate two classes. In Logistic Regression the interpreation of the coefficients

Evaluation

The evaluation of a Logistic Regression model can be done with any metric suitable for classification problems. The most common metrics are

Accuracy. The fraction of correct predicted items to all items. How many items were correctly classified?

Recall. The fraction of true positive items out of all actual positive items. How many items are relevant?

Precission. The fraction of true positive items out of all positive predicted items. How many of the positive predicted items are really positive?

F1-Score. The harmonic mean of Precision and Recall.

Which metric is suitable depends on the considered problem. In a separate article you can find a more detailed overview and explanation about the most common metrics for classification problems.

Example

In Python you can fit a Logistic Regression using the sklearn library. Here is a simplified example for illustration purposes:

| |

The predict_proba method gives the probabilites for each of the two classes, if we want the probabilites of a

You can find a more detailed elabarated example for a Logistic Regression on kaggle.

Summary

In this article we learned about Logistic Regression. Logistic Regression is a supervised Machine Learning Algorithm, that is build for binary classification, but can be extendet to multi-classification. It is based on predicting probabilites using the Sigmoid function. These probabilities are then mapped to the final target classes.

If this blog is useful for you, please consider supporting.