AdaBoost for Classification - Example

- 8 minutes read - 1589 wordsIntroduction

AdaBoost is an ensemble model that is based on Boosting. The individual models are so-called weak learners, which means that they have only little predictive skill, and they are sequentially built to improve the errors of the previous one. A detailed description of the Algorithm can be found in the separate article AdaBoost - Explained. In this post, we will focus on a concrete example for a classification task and develop the final ensemble model in detail. A detailed example of a regression task is given in the article AdaBoost for Regression - Example.

Data

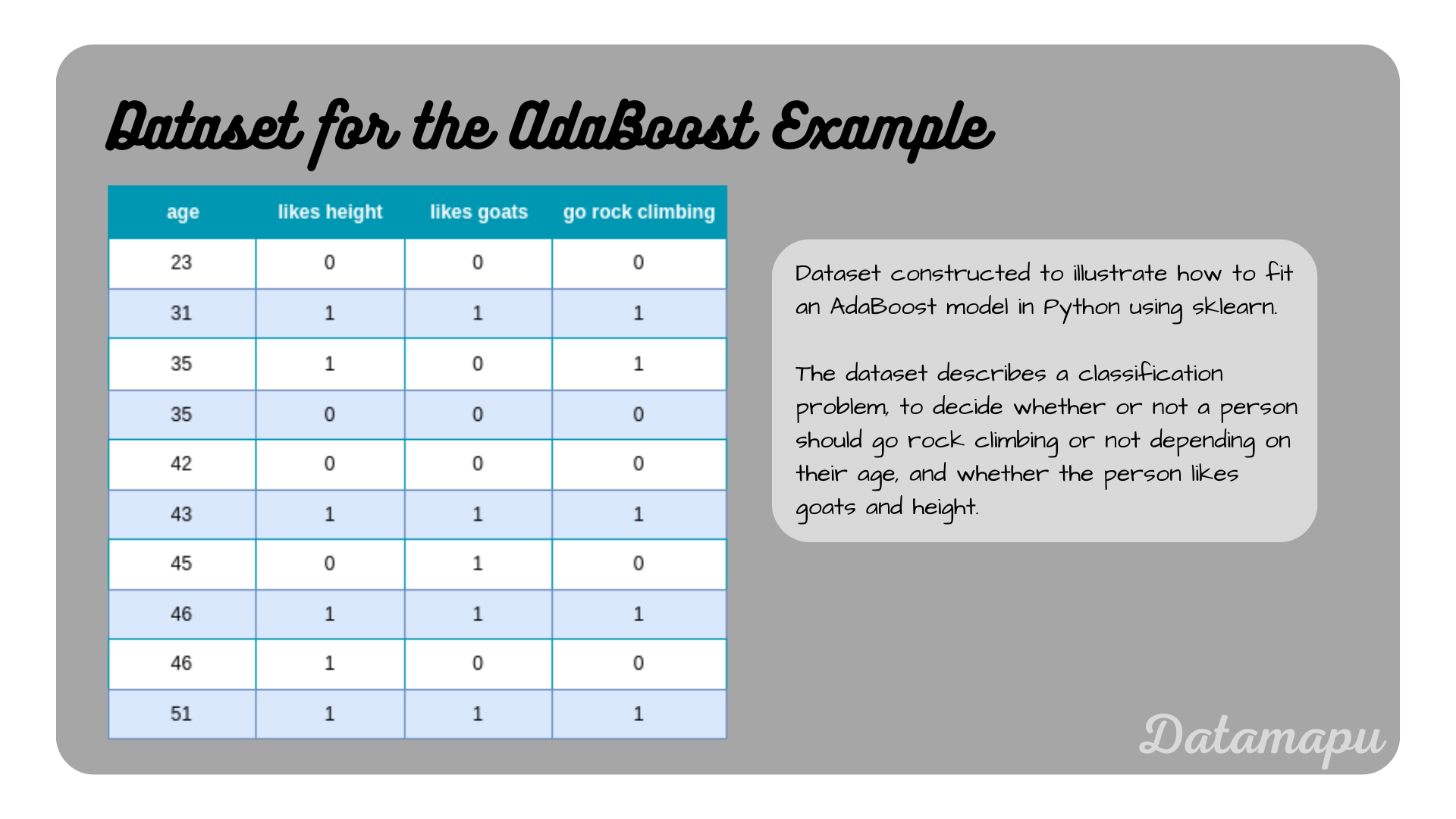

The dataset used in this example contains only 10 samples, to make the calculations by hand more feasible. It describes the problem of whether a person should go rock climbing or not, depending on their age, and whether the person likes height and goats. This dataset was also in the article Decision Trees for Classification - Example, which makes comparisons easier. The data is described in the plot below.

Dataset used to develop an AdaBoost model.

Dataset used to develop an AdaBoost model.

Build the Model

We build an AdaBoost model, constructed of Decision Trees as weak learners because this is the most common application. The underlying trees have depth one, that is only the stump of each tree is used as a weak learner. This is also the default configuration in sklearn, which we can use to fit a model in Python. Our ensemble model will consist of three weak learners. This number is chosen low for demonstration purposes.

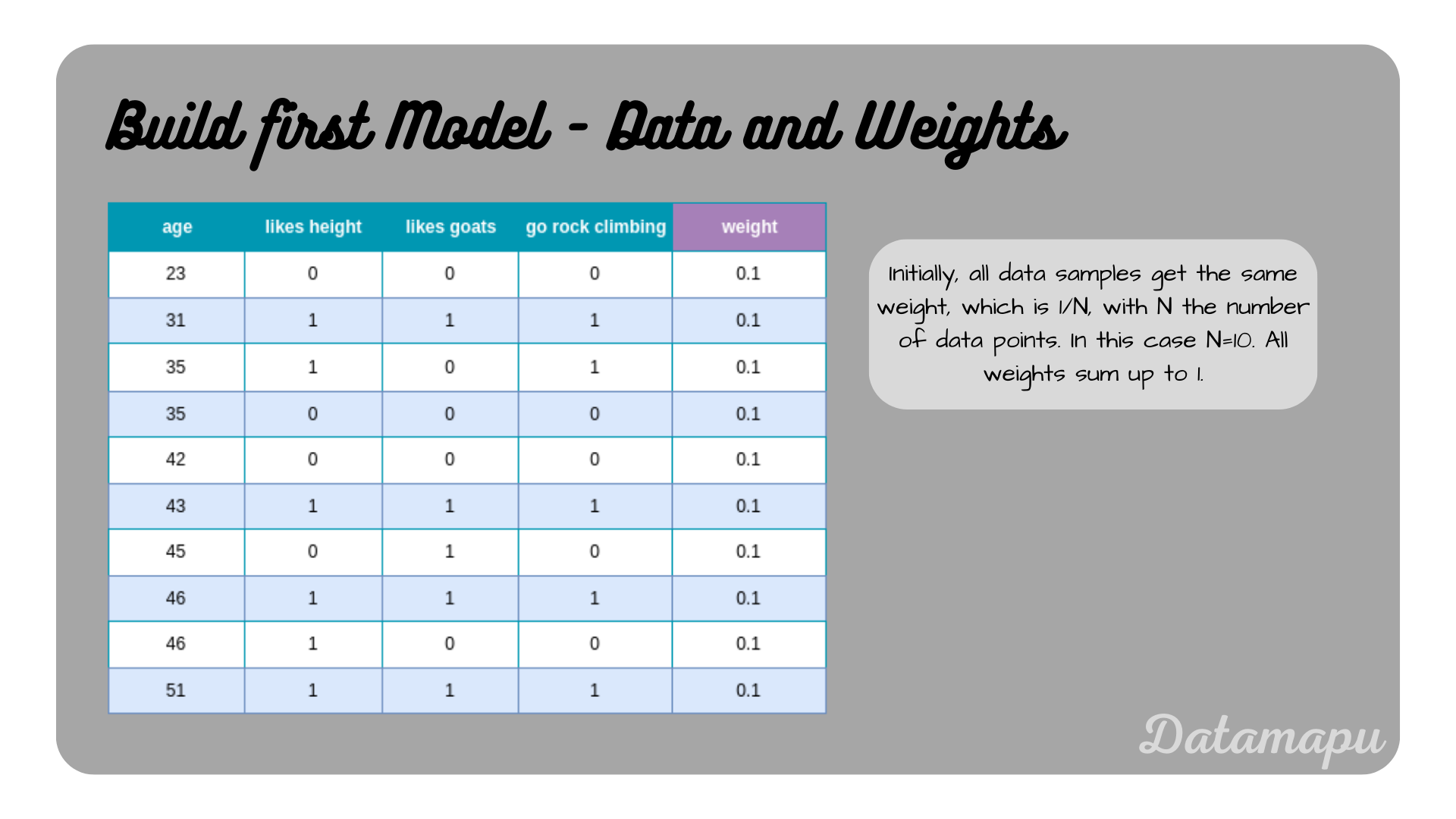

The first step in building an AdaBoost model is assigning weights to the individual data points. In the beginning, for the initial model, all data points get the same weight assigned, which is

Initial dataset and weights for the example.

Initial dataset and weights for the example.

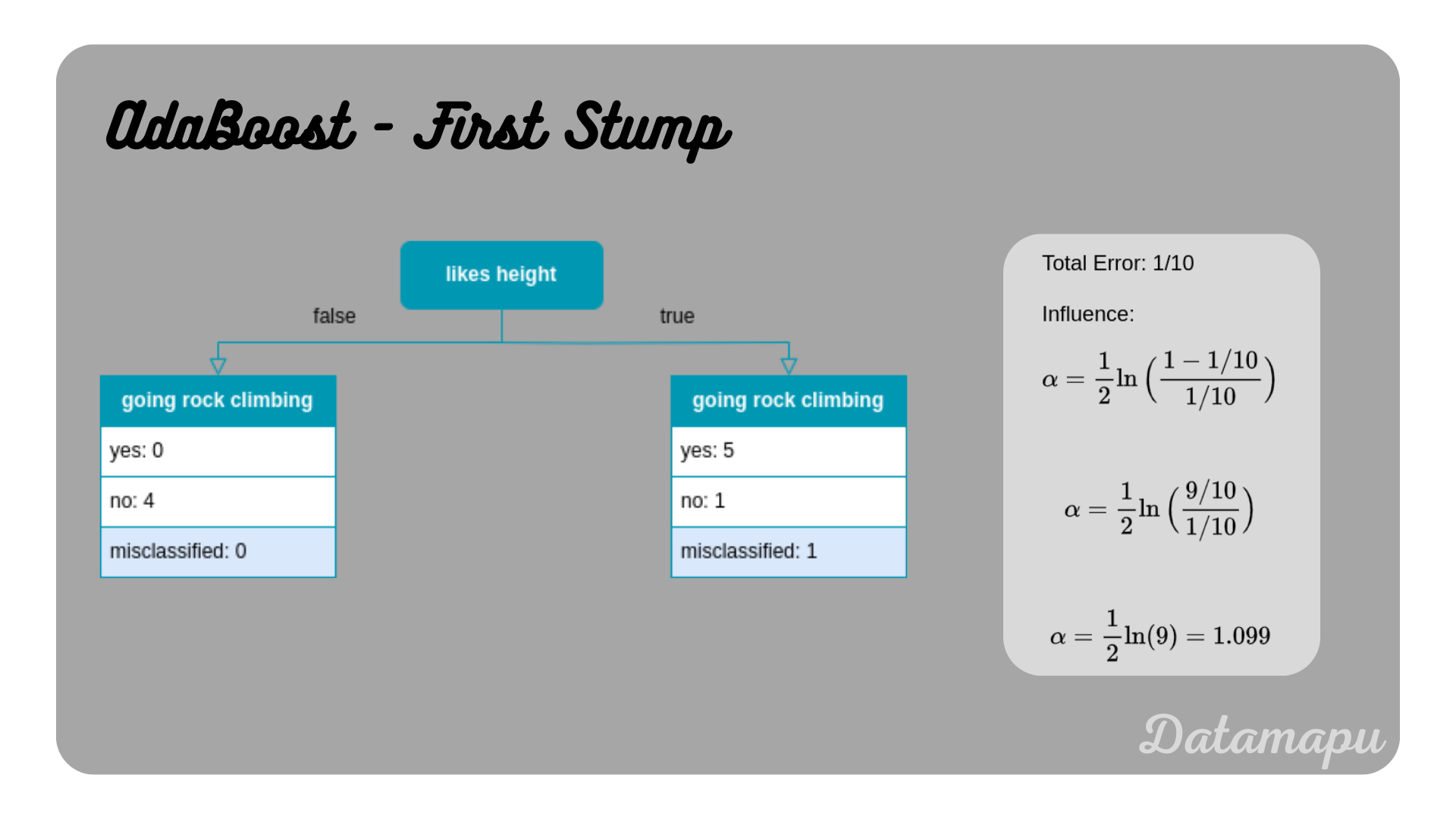

We now start with fitting a Decision Tree to this data. As stated earlier we will use decision stumps, that is we are only interested in the first split. The same data was used in Decision Trees for Classification - Example to develop a Decision Tree by hand, please check there for the details on how to develop a Decision Tree and how to find the best split. The resulting stump is shown in the following plot. In AdaBoost, each underlying model - in our case decision stumps - gets a different weight, which is the so-called influence

The first stump, i.e. the first weak learner for our AdaBoost algorithm.

The first stump, i.e. the first weak learner for our AdaBoost algorithm.

With the influence

The sign in this equation depends on whether a sample was correctly classified or not. For correctly classified samples, we get

and for wrongly classified samples

accordingly. These weights still need to be normalized, so that their sum equals

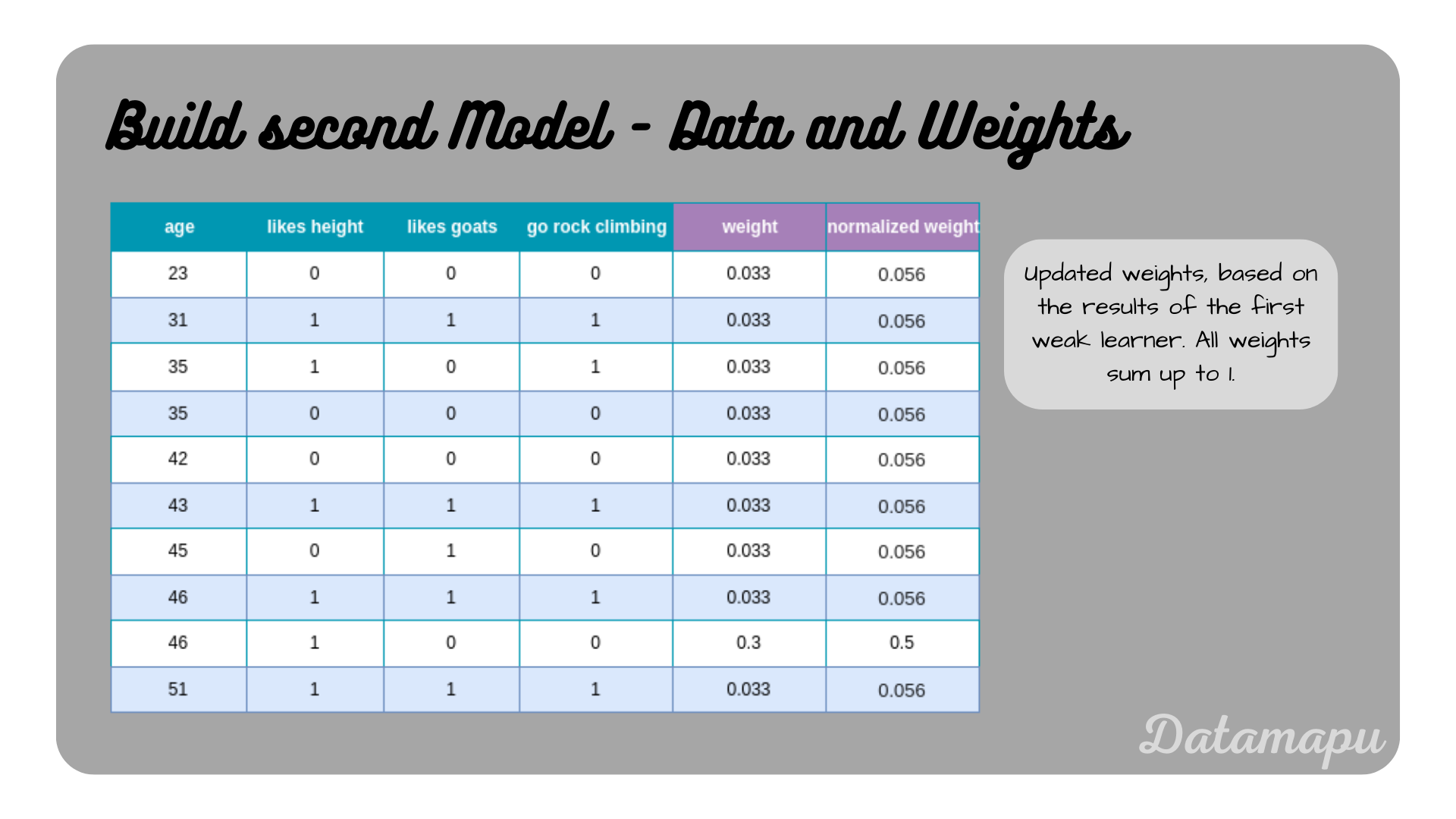

The dataset with updated weights based on the influence

The dataset with updated weights based on the influence

The weights are used to create bins. Let’s assume we have the weights

The dataset with bins based on the weights.

The dataset with bins based on the weights.

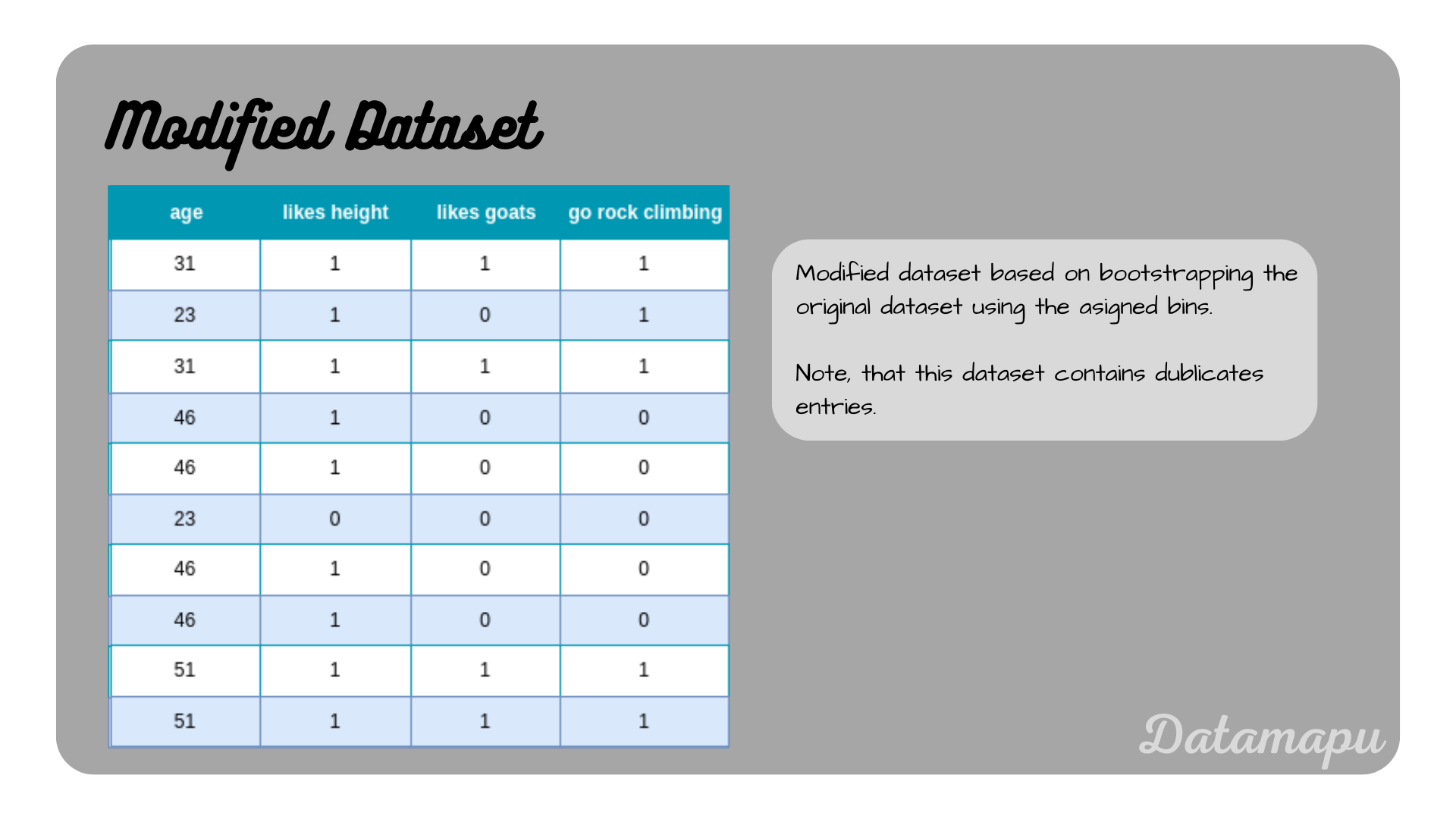

Now, some randomness comes into play. Random numbers between

Modified dataset to build the second stump.

Modified dataset to build the second stump.

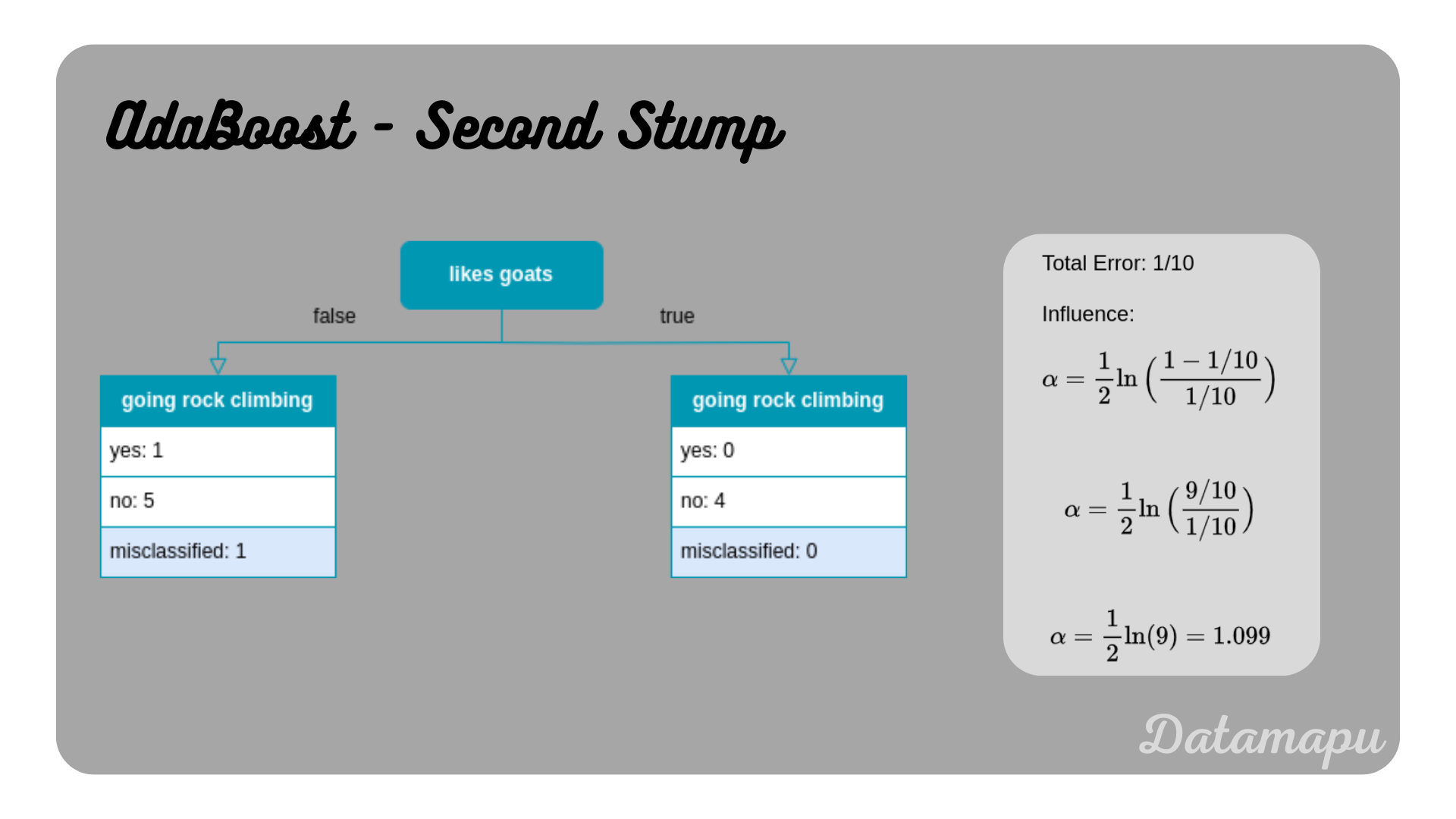

We now use this modified dataset to create the second stump. Following the steps described in Decision Trees for Classification - Example, we achieve the following decision stump. Additionally, the influence

The second stump, i.e. the second weak learner for our AdaBoost algorithm.

The second stump, i.e. the second weak learner for our AdaBoost algorithm.

As in the first stump, one sample is misclassified, so we get the same value for alpha as for the first stump. Accordingly, the weights are updated using the above formula.

For the first sample, this results in

The second sample was the only one misclassified, so the sign in the exponent needs to be changed

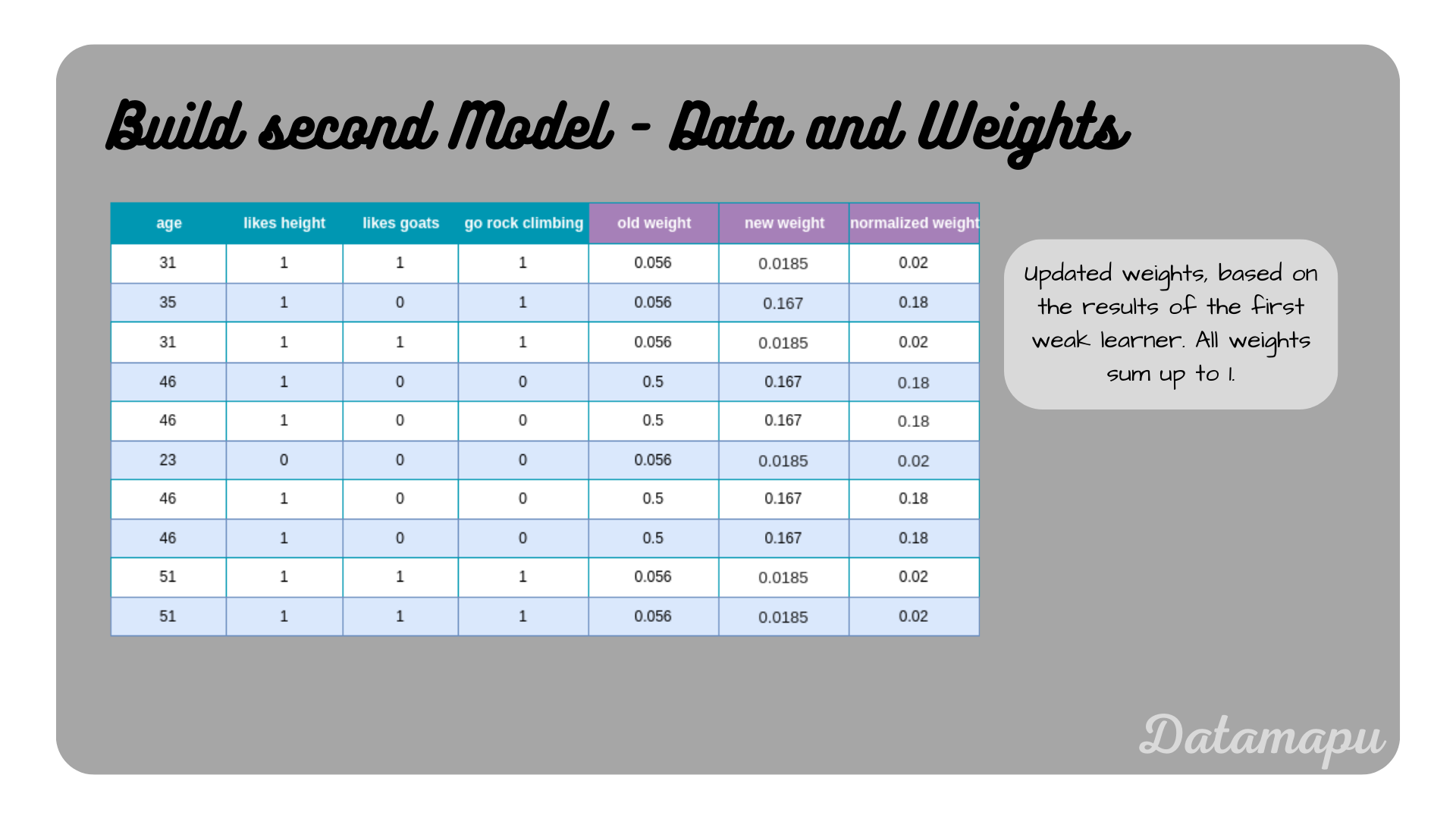

The following plot shows all samples together with their old weights, new weights, and normalized weights.

The dataset with updated weights based on the influence

The dataset with updated weights based on the influence

As in the first iteration, we convert the weights into bins.

The modified dataset with bins based on the weights.

The modified dataset with bins based on the weights.

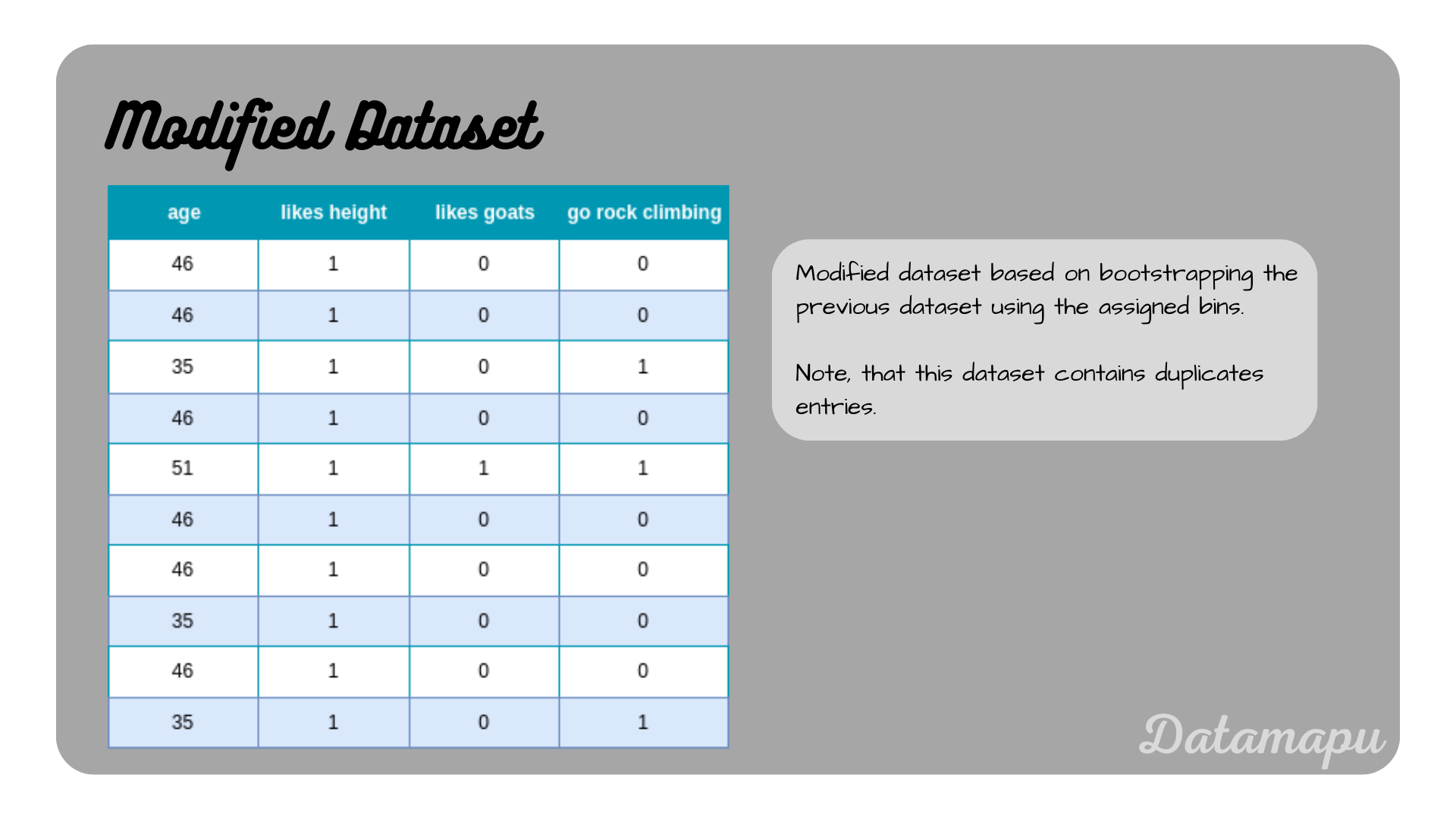

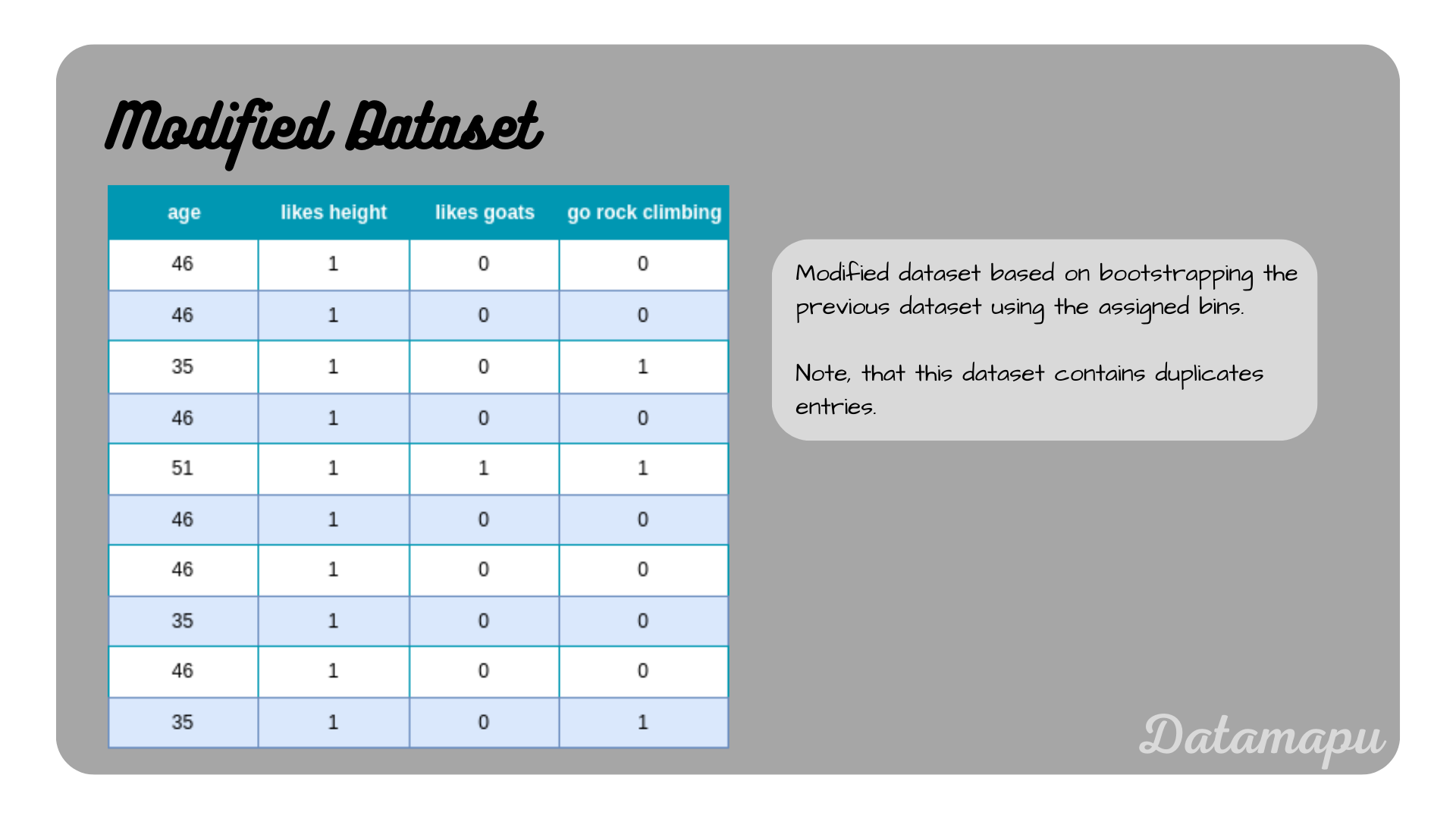

We repeat the bootstrapping and draw

Modified dataset based on the weights.

Modified dataset based on the weights.

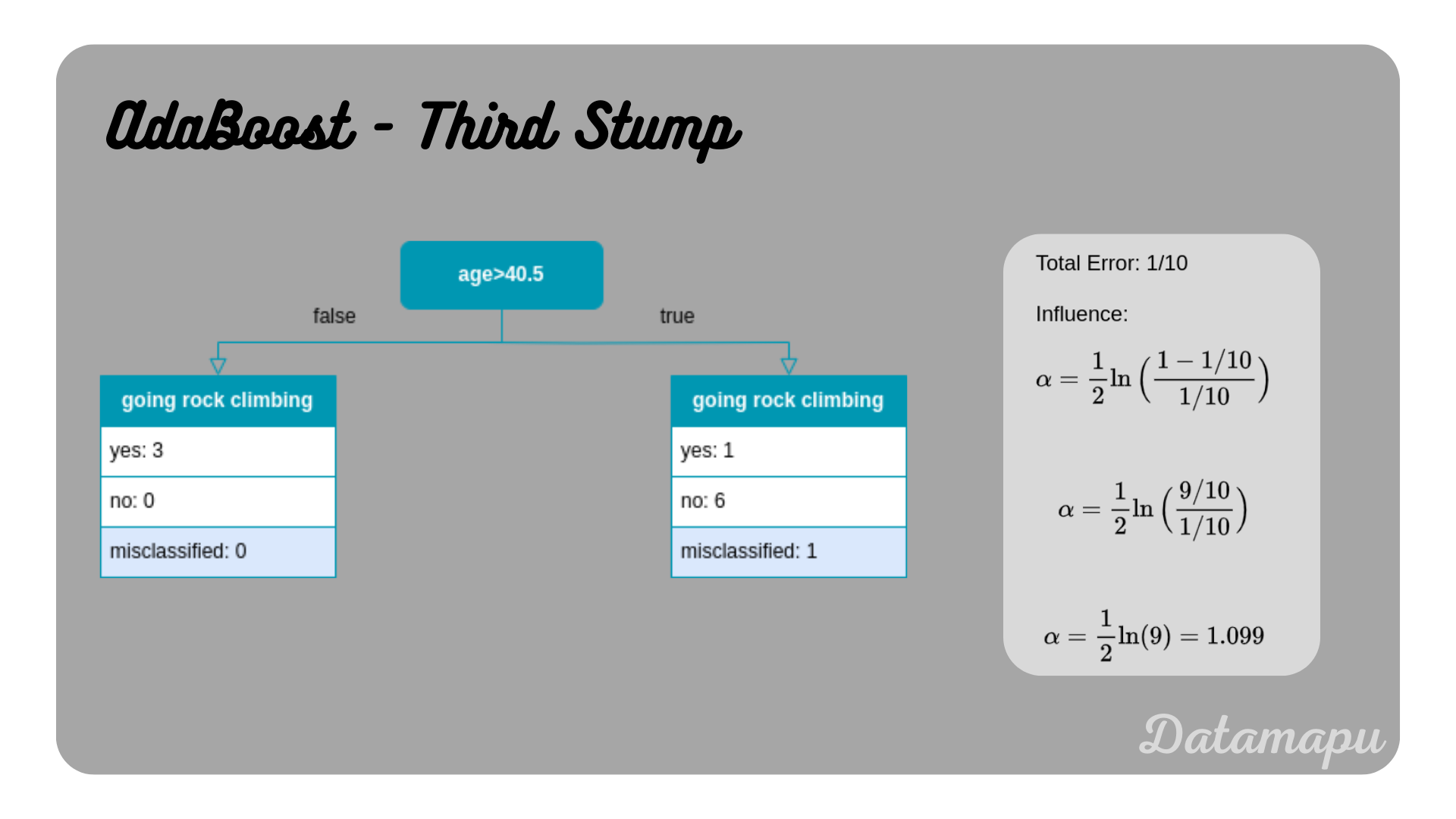

We can now fit the third and last stump of our model to this modified dataset and calculate its influence. The result is shown in the next plot.

The third stump, i.e. the last weak learner for our AdaBoost algorithm.

The third stump, i.e. the last weak learner for our AdaBoost algorithm.

Again, one sample is misclassified, which leads to the same influence

| Feature | Value |

|---|---|

| age | 35 |

| likes height | 1 |

| likes goats | 0 |

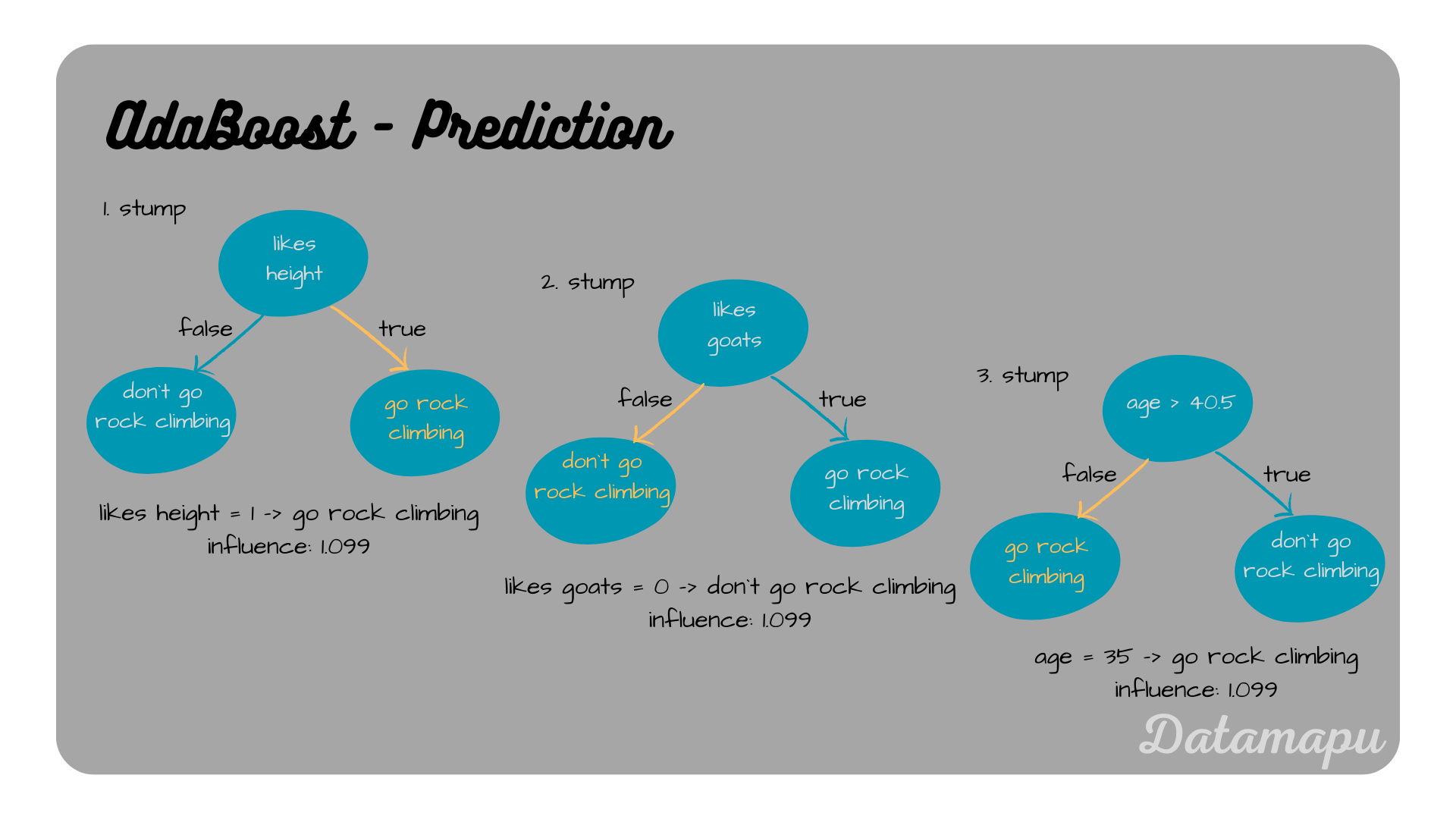

We now make predictions for each of the three stumps for this sample.

The three underlying models and their predictions for the sample.

The three underlying models and their predictions for the sample.

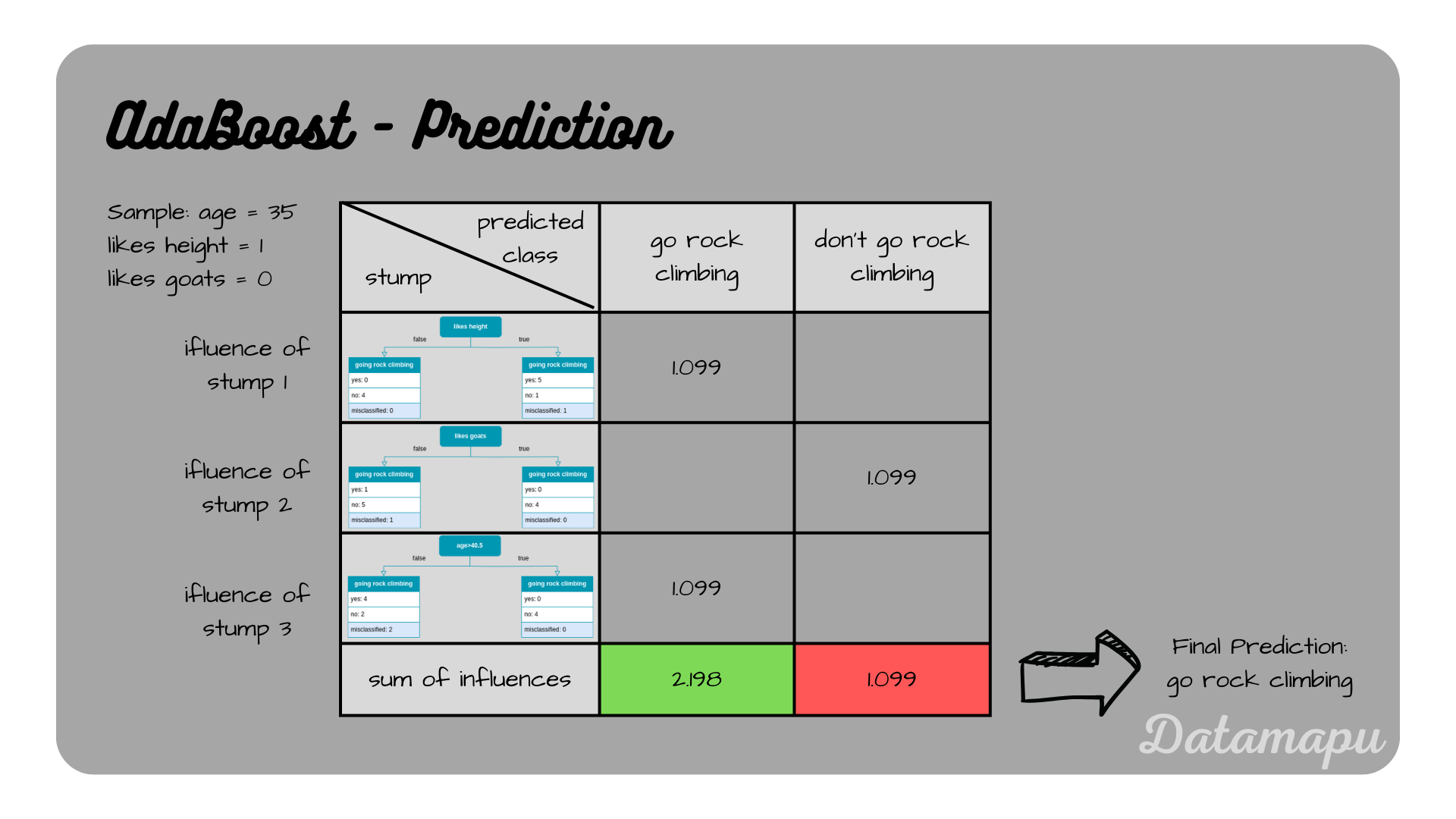

The final prediction is achieved by adding up the influences of each tree for the predicted classes. In this example the first and the third stump predict “go rock climbing” and the second stump predicts “don’t go rock climbing”. The first and the third stump have an influence of

The predictions and their influences to determine the final prediction.

The predictions and their influences to determine the final prediction.

Fit a Model in Python

We now fit the example in Python using sklearn. Note, that the results are not exactly the same due to the randomness in the bootstrapping and the implementation algorithm of the estimators used in sklearn, which at the time of writing this post has the default value of SAMME.R. To fit a model, we first define our dataset as a data frame.

| |

We use the sklearn method AdaBoostClassifier to fit a model to the data. According to our example, we set the hyperparameter n_estimators=3, which means that three Decision Tree stumps are used for the ensemble model. Note, that for a real-world project, this hyperparameter would be chosen larger, the default value is

| |

To get the predictions, we can use the predict method method.

| |

For this example, the predictions are

Summary

In this article, we illustrated in detail how to develop an AdaBoost model for a classification task. We used a simplified example to make the calculations easy to follow. We followed the most standard way of developing an AdaBoost ensemble model, that is the underlying base models were chosen as the stumps of Decision Trees. You can find a similar example for a regression task in the related article AdaBoost for Regression - Example and a detailed explanation and visualization of the algorithm in AdaBoost - Explained.

If this blog is useful for you, please consider supporting.