Gradient Boost for Classification Example

- 8 minutes read - 1548 wordsIntroduction

In this post, we develop a Gradient Boosting model for a binary classification. We focus on the calculations of each single step for a specific example chosen. For a more general explanation of the algorithm and the derivation of the formulas for the individual steps, please refer to Gradient Boost for Classification - Explained and Gradient Boost for Regression - Explained. Additionally, we show a simple example of how to apply Gradient Boosting for classification in Python.

Data

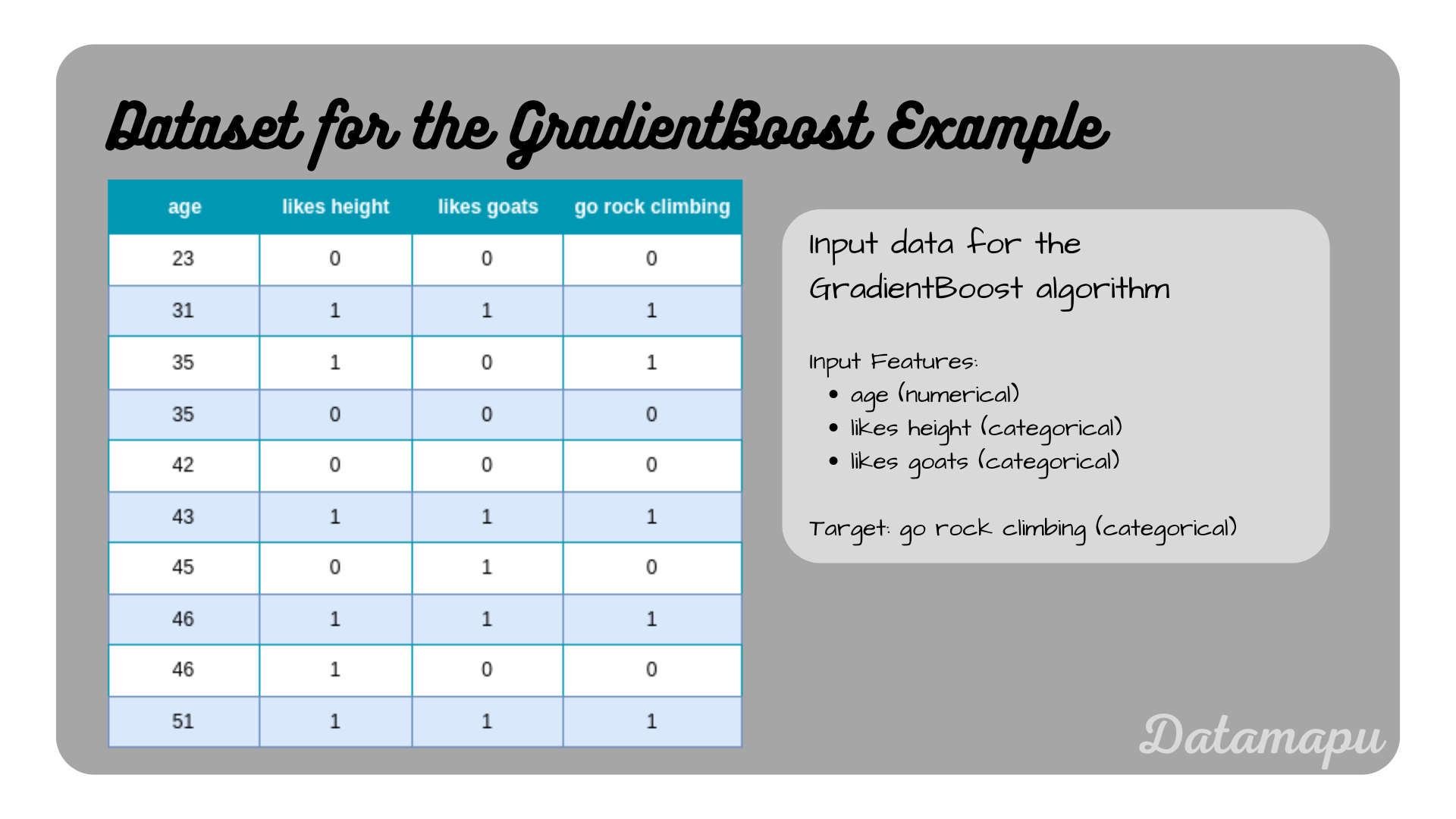

The dataset used for this example contains 10 samples and describes the task of whether a person should go rock climbing depending on their age, and whether the person likes height and goats. The same dataset was used in the articles Decision Trees for Classification - Example, and Adaboost for Classification - Example.

The data used in this post.

The data used in this post.

Build the Model

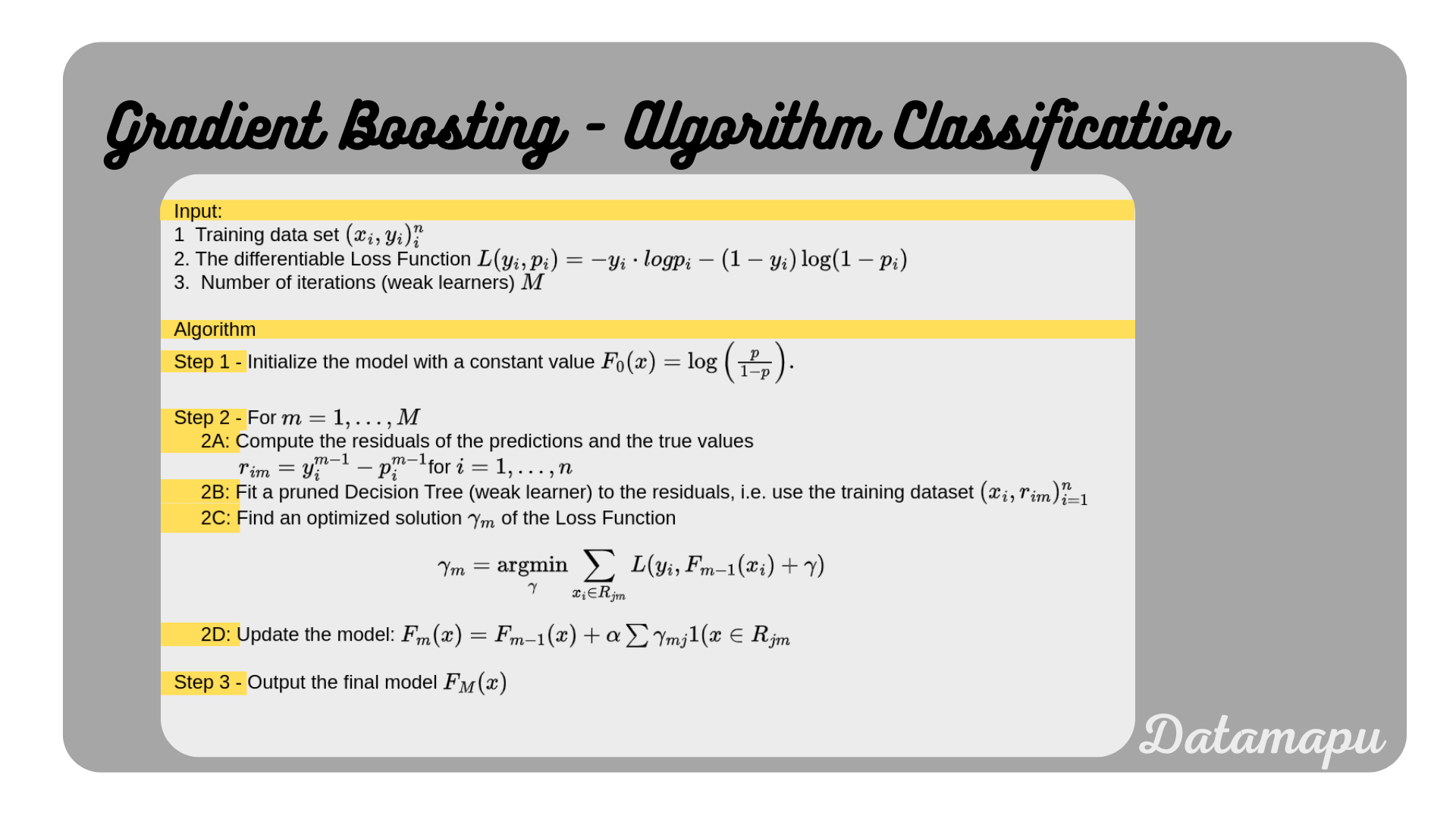

We build a Gradient Boosting model using pruned Decision Trees as weak learners for the above dataset. The steps to be performed are summarized in the plot below. For a more detailed explanation please refer to Gradient Boost for Classification - Explained.

Gradient Boosting Algorithm simplified for a binary classification task.

Gradient Boosting Algorithm simplified for a binary classification task.

Step 1 - Initialize the model with a constant value -

For the first initialization, we need the probability of “go rock climbing”. In the above dataset, we have five

The initial log-loss in this case is

To check the performance of our model, we use the accuracy score. We calculate the accuracy after each step, to see how the model evolves. For the initial predictions, we first calculate the probabilities.

As a threshold for predicting a

The accuracy is calculated as the fraction of the correct predicted values and the total number of samples

Step 2 - For

In this step we sequentially fit weak learners (pruned Decision Trees) to the residuals. The number of loops is the number of weak learners considered. Because the data considered in this post is so simple, we will only loop twice, i.e. .

First loop

Fit the first Decision Tree.

2A. Compute the residuals of the preditions and the true observations.

The residuals are calculated as

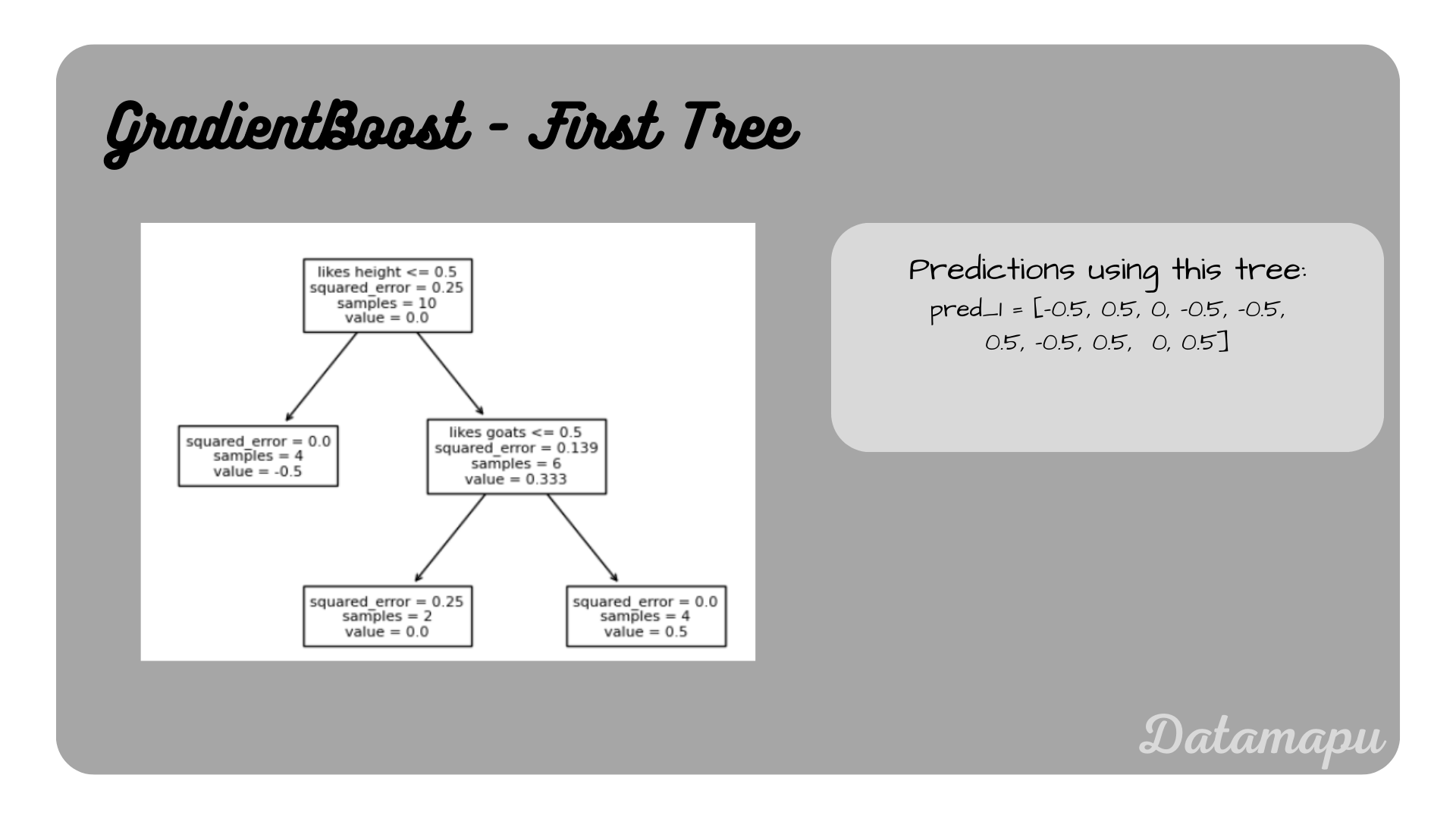

2B. and 2C. Fit a model (weak learner) to the residuals and find the optimized solution.

In this step, we use the above calculated residuals to fit a Decision Tree. We prune the tree to use it as a weak learner. We use a maximum depth of two. We don’t develop the Decision Tree by hand, but will use the sklearn method DecisionTreeClassifier. For a step-by-step example of building a Decision Tree for Classification, please refer to the article Decision Trees for Classification - Example.

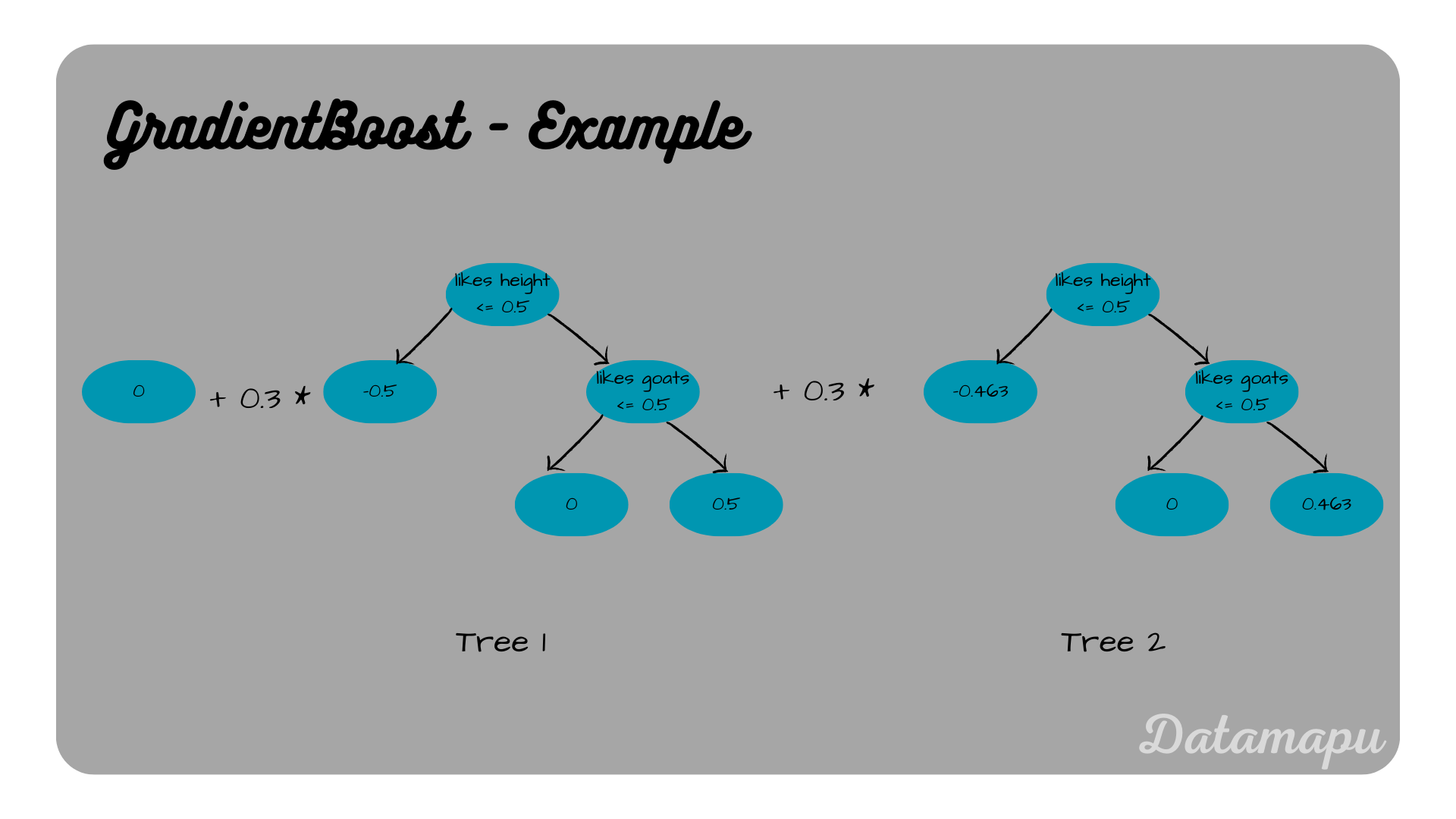

First Decision Tree, i.e. first weak learner

First Decision Tree, i.e. first weak learner

The resulting predictions are

2D. Update the model.

We update the model using the predictions of the above developed Decision Tree. We use a learning rate of

Using the calculated numbers, this leads to

The probabilies resulting from these log-loss are

With the threshold of

The accuracy after this step is

Second loop

Fit the second Decision Tree.

2A. Compute the residuals of the preditions and the true observations

The residuals are calculated as

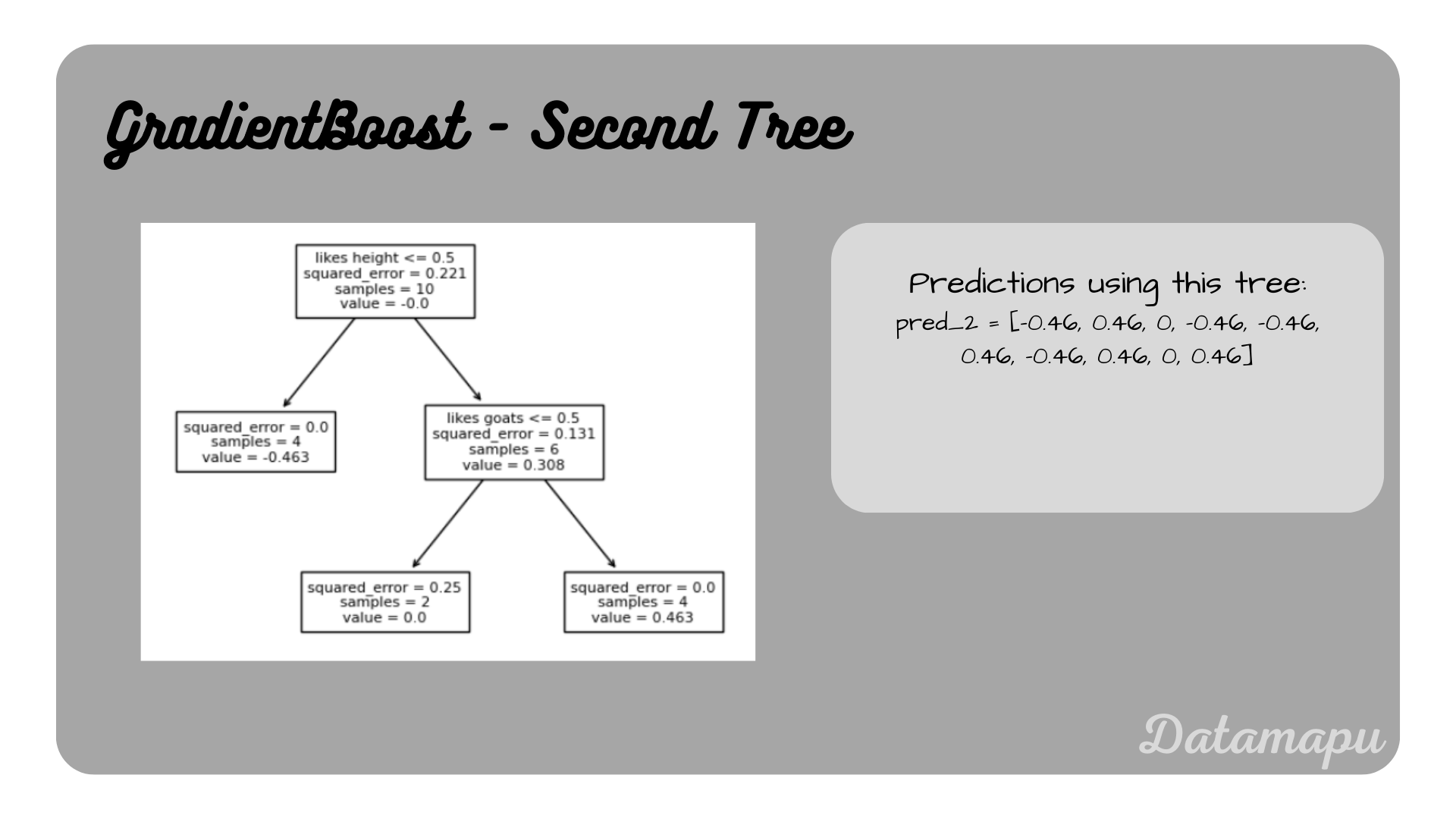

2B. and 2C. Fit a model (weak learner) to the residuals and find the optimized solution.

Now we fit the second Decision Tree with the residuals

Second Decision Tree, i.e. second weak learner

Second Decision Tree, i.e. second weak learner

The resulting predictions are

2D. Update the model.

We again update the model using the predictions of this second Decision Tree with a learning rate of

Using the calculated numbers, this leads to

The probabilies resulting from these log-loss are

With the threshold of

The accuracy after this step is

That is, this second step didn’t improve the accuracy of our model.

Step 3 - Output final model

The final model then in defined by the output of the last step

Using the input features

Final model.

Final model.

Accordingly the final probabilities

Fit a Model in Python

In this section, we will show how to fit a GradientBoostingClassifier from the sklearn library to the above data. In sklearn the weak learners are fixed to Decision Trees and cannot be changed. The GradientBoostingClassifier offers a set of hyperparameters, that can be changed and tested to improve the model. Note, that we are considering a very simplified example. In this case, we set the number of weak learners (n_estimators) to

We read the data into a Pandas dataframe.

| |

Now we can fit the model using the above defined hyperparameters.

| |

The GradientBoostingClassifier has a predict method, which we can use to get the predictions and the score method to calculate the accuracy.

| |

We get the predictions

Summary

In this post, we went through the calculations of the individual steps to build a Gradient Boosting model for a binary classification problem. A simple example was chosen to make explicit calculations feasible. Later, we showed how to fit a model using Python. In real-world examples, libraries like sklearn are used to develop such models. The development is usually not as straightforward as in this simplified example, but a set of hyperparameters are (systematically) tested to optimize the solution. For a more detailed explanation of the algorithm, please refer to the related articles Gradient Boost for Classification - Explained, Gradient Boost for Regression - Explained, Gradient Boost for Regression - Example. For a more practical approach, please check the notebook on kaggle, where a Gradient Boosting model for a regression problem is developed.

If this blog is useful for you, please consider supporting.