Gradient Boost for Regression - Example

- 8 minutes read - 1581 wordsIntroduction

In this post, we will go through the development of a Gradient Boosting model for a regression problem, considering a simplified example. We calculate the individual steps in detail, which are defined and explained in the separate post Gradient Boost for Regression - Explained. Please refer to this post for a more general and detailed explanation of the algorithm.

Data

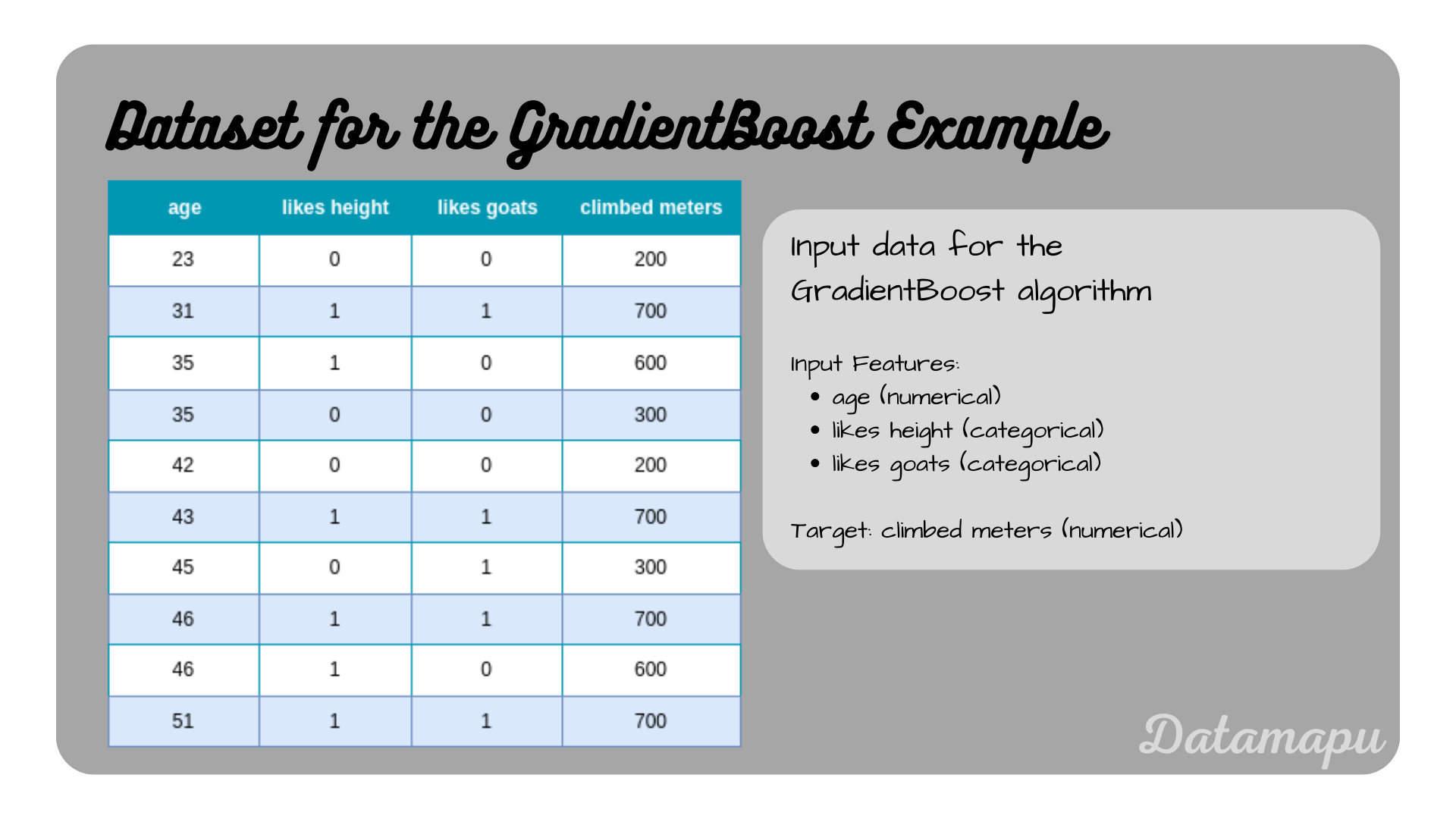

We will use a simplified dataset consisting of only 10 samples, which describes how many meters a person has climbed, depending on their age, whether they like height, and whether they like goats. We used that same data in previous posts, such as Decision Trees for Regression - Example, and Adaboost for Regression - Example.

The data used in this post.

The data used in this post.

Build the Model

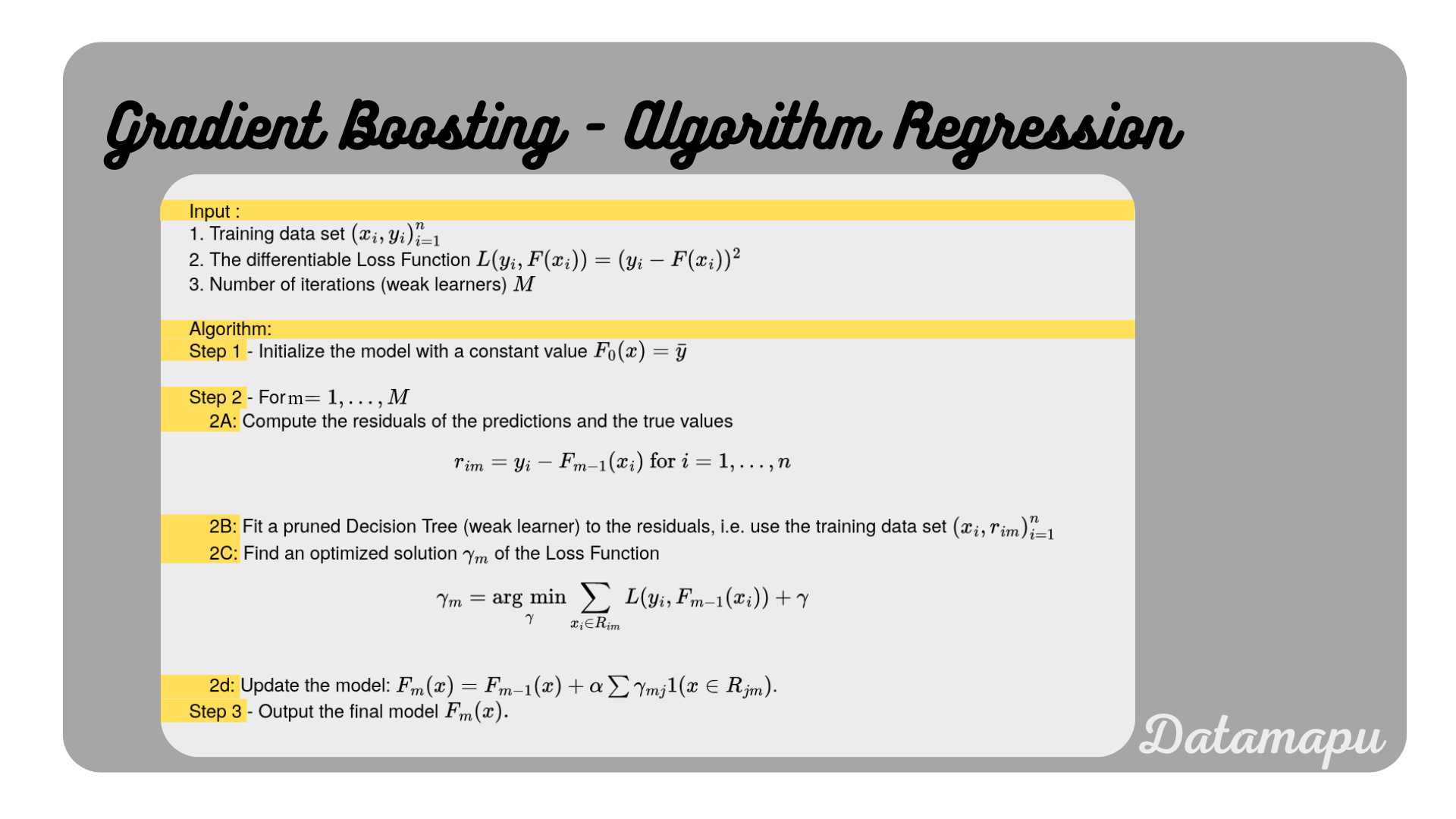

We build a Gradient Boost model with pruned Decision Trees as weak learners using the above dataset. For that, we follow the steps summarized in the following plot. For a more detailed explanation please refer to Gradient Boost for Regression - Explained.

Gradient Boosting Algorithm simplified for a regression task.

Gradient Boosting Algorithm simplified for a regression task.

Step 1 - Initialize the model with a constant value -

The initialization of the model is done by taking the means of all target values (

To evaluate how the model evolves, we calculate the mean squared error (MSE) after each iteration.

The MSE of this first estimate is

Step 2 - For

The second step is a loop, which sequentially updates the model by fitting a weak learner, in our case a pruned Decision Tree to the residual of the target values and the previous predictions. The number of loops is the number of weak learners considered. Because the data considered in this post is so simple, we will only loop twice, i.e.

First loop

2A. Compute the residuals of the preditions and the true observations.

With

This results in

2B. anc 2C. Fit a model (weak learner) to the residuals and find the optimized solution.

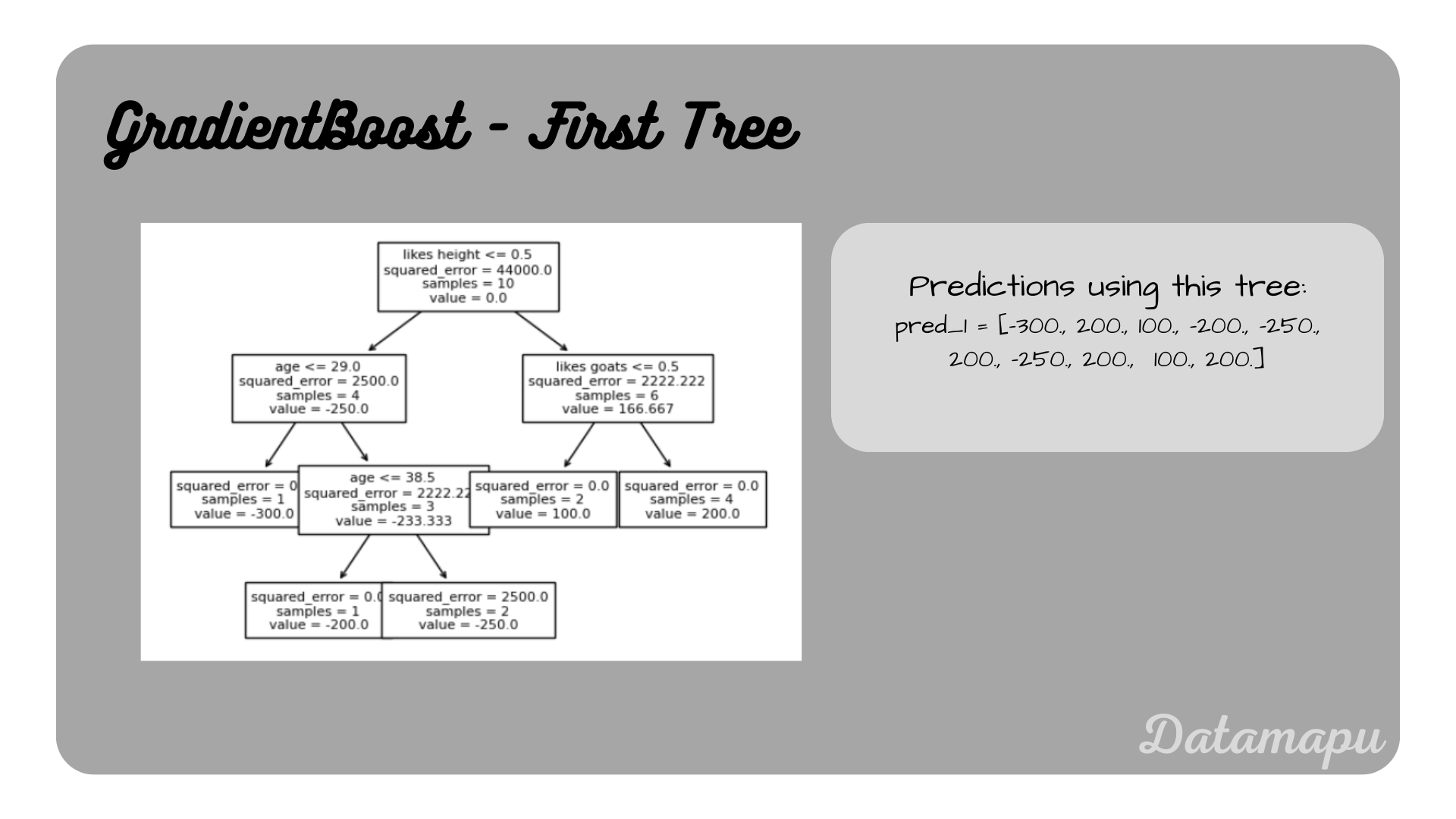

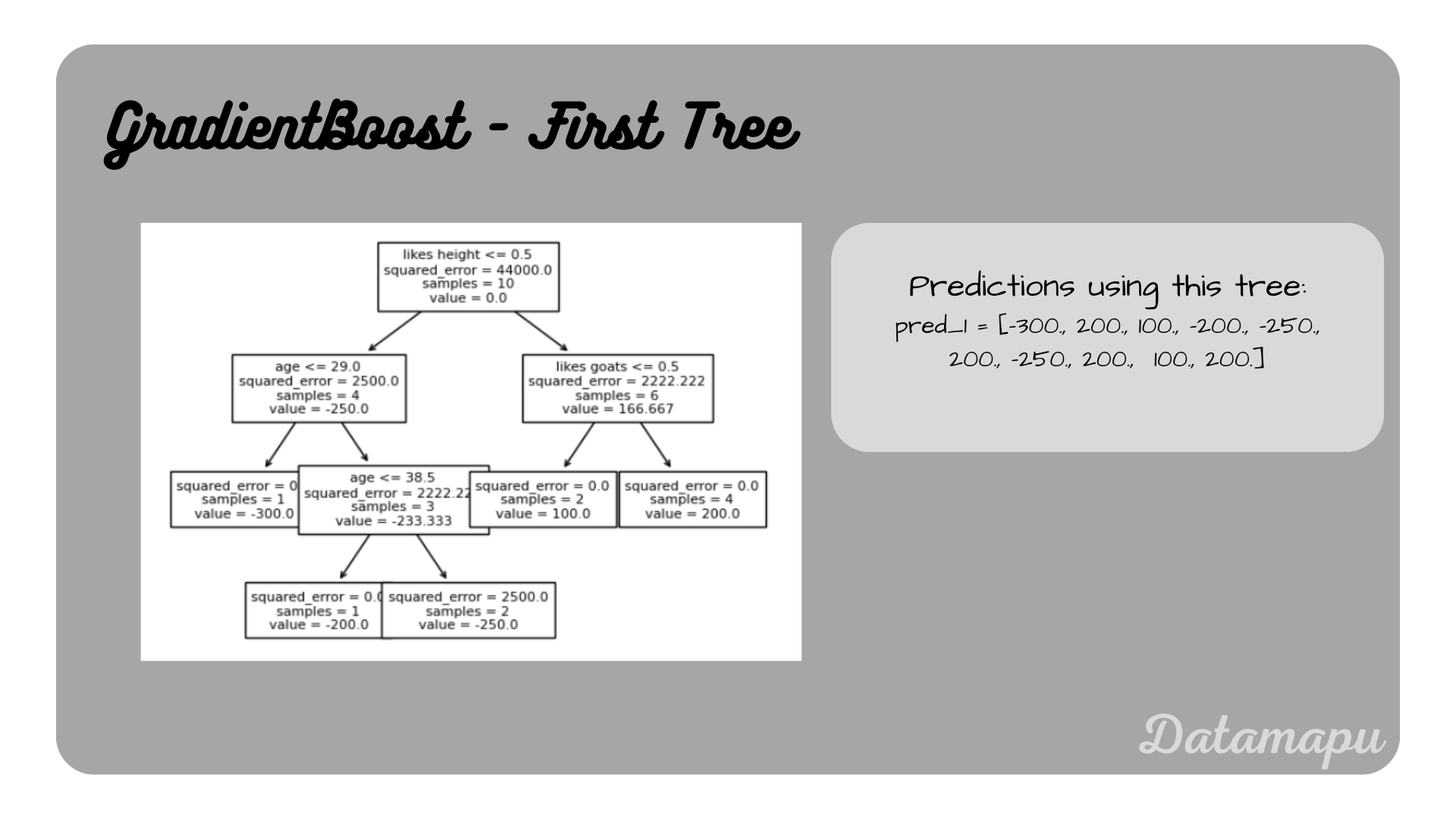

Now we fit a Decision Tree to the residuals (

First Decision Tree, i.e. first weak learner

First Decision Tree, i.e. first weak learner

2D. Update the model.

The next step is to update the model with the new prediction from the weak learner.

This results in

The MSE of these new predictions are

We can see that the error after this first update reduced to

Second loop

In this second loop the same steps as in the first one are performed.

2A. Compute the residuals of the preditions and the true observations.

We start with computing the residuals between the target values (

This results in

2B. and 2C. Fit a model (weak learner) to the residuals and find the optimized solution.

We fit a Decision Tree to the newly calculated residuals

Second Decision Tree, i.e. second weak learner

Second Decision Tree, i.e. second weak learner

2D. Update the model.

The current model is updated using the predictions obtained from the above Decision Tree.

This results in

The MSE of this updated prediction is

That is we see another reduction in the error. Because of the simplicity of the data, in this case, the error is already

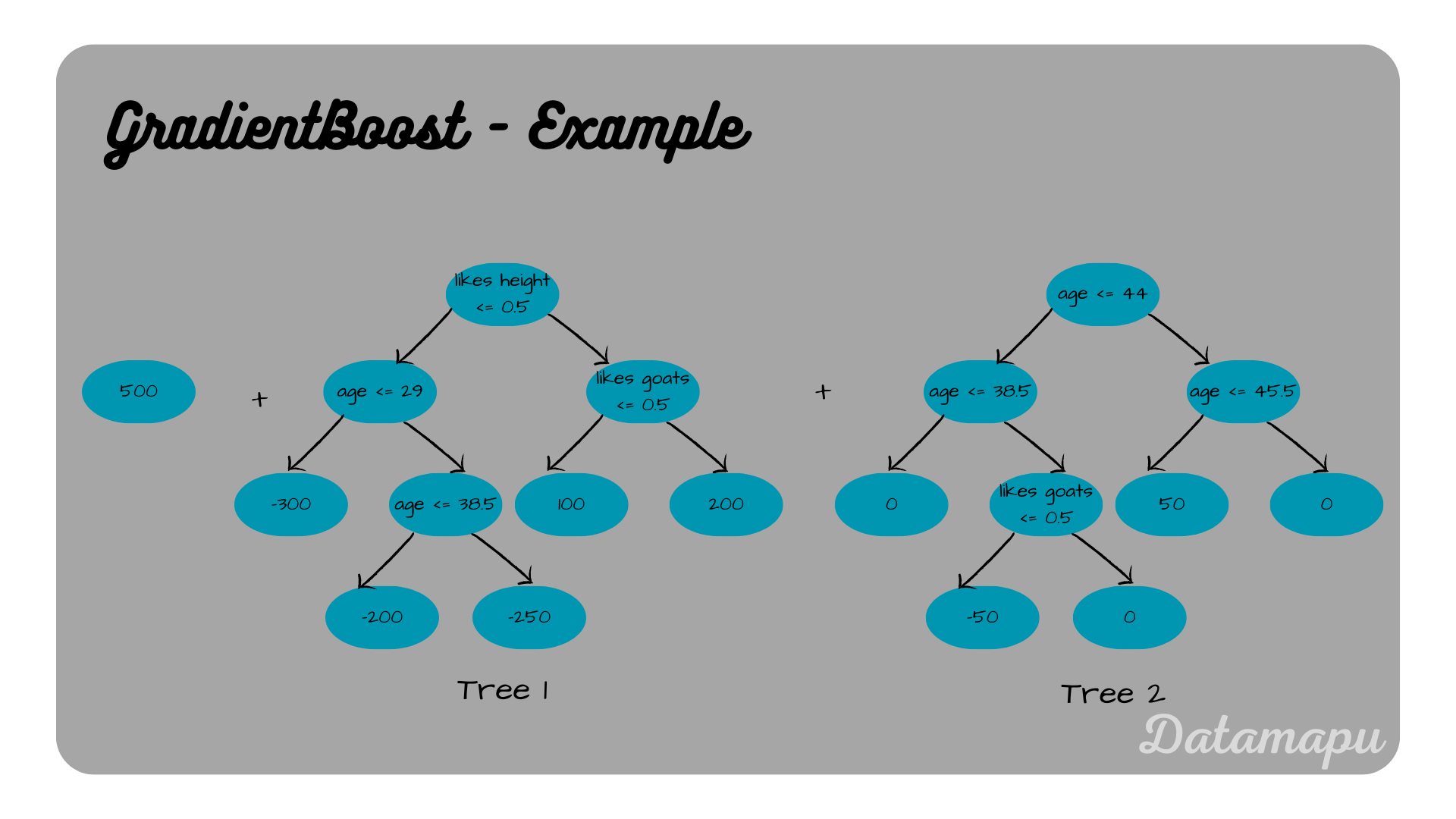

Step 3 - Output final model

The result of this last step in the loop, then defines the final model.

For

Final model.

Final model.

Note, that usually an additional hyperparameter is used in Gradient Boosting, which is the learning rate. The learning rate determines the contribution of the trees. That is the predictions are scaled by the learning rate before adding them. In the above example, we thus used a learning rate of

Including a learning rate

with

Fit a Model in Python

Python’s sklearn library provides a gradient boosting package. We can use this library to fit a simple model to our example data. You can find a more complex example with a more realistic dataset on kaggle.

Let’s read the data as a Pandas dataframe.

| |

There are several hyperparameters that can be changed in the gradient boosting model of sklearn. A full list can be found in the sklearn documentation. In this example, we change the hyperparameters n_estimators, which defines the number of Decision Trees used as weak learners, max_depth, which defines the maximal depth of the Decision Trees, and the learning_rate, which defines the weight of each tree. The learning rate is a hyperparameter that we did not use in the algorithm above. The idea is similar as in the algorithm of Adaboost to give a weight to the weak learner. That is in the calculations above, this weight was set to

| |

Using the predict method gives us the predictions. We also calculate the score of the prediction. The score in this case is the Coefficient of Determination often abbreviated as

| |

In this case this leads to a perfect predictions

Summary

In this post, we calculated the individual steps for a Gradient Boosting model for a regression problem. We saw how updating the model by creating an ensemble model improves the results. This example was chosen on purpose for a very simple dataset in order to follow the calculations and understand each step. A more general description and explanation of the algorithm is given in the separate article Gradient Boost for Regression - Explained.

If this blog is useful for you, please consider supporting.