Backpropagation Step by Step

- 13 minutes read - 2637 wordsIntroduction

A neural network consists of a set of parameters - the weights and biases - which define the outcome of the network, that is the predictions. When training a neural network we aim to adjust these weights and biases such that the predictions improve. To achieve that Backpropagation is used. In this post, we discuss how backpropagation works, and explain it in detail for three simple examples. The first two examples will contain all the calculations, for the last one we will only illustrate the equations that need to be calculated. We will not go into the general formulation of the backpropagation algorithm but will give some further readings at the end.

This post is quite long because of the detailed examples. If you want to skip some parts, these are the links to the examples.

Main Concepts of Training a Neural Net

Before starting with the first example, let’s quickly go through the main ideas of the training process of a neural net. The first thing we need, when we want to train a neural net is the training data. The training data consists of pairs of inputs and labels. The inputs are also called features and are usually written as

If not mentioned differently, we use the following data, activation function, and loss throughout the examples of this post.

Training Data

We consider the most simple situation with one-dimensional input data and just one sample

Activation Function

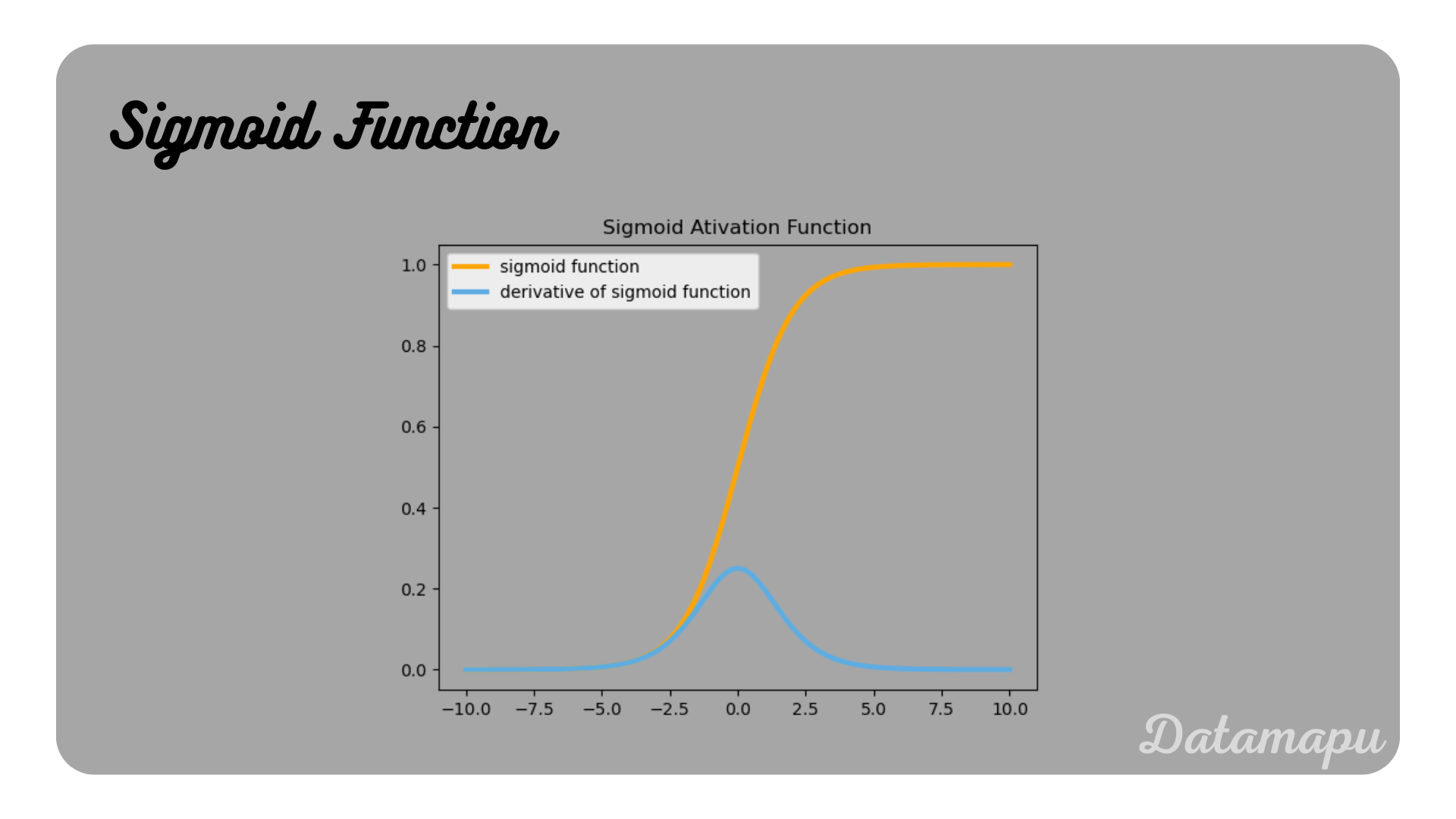

As activation function, we use the Sigmoid function

Loss Function

As loss function, we use the Sum of the Squared Error, defined as

with

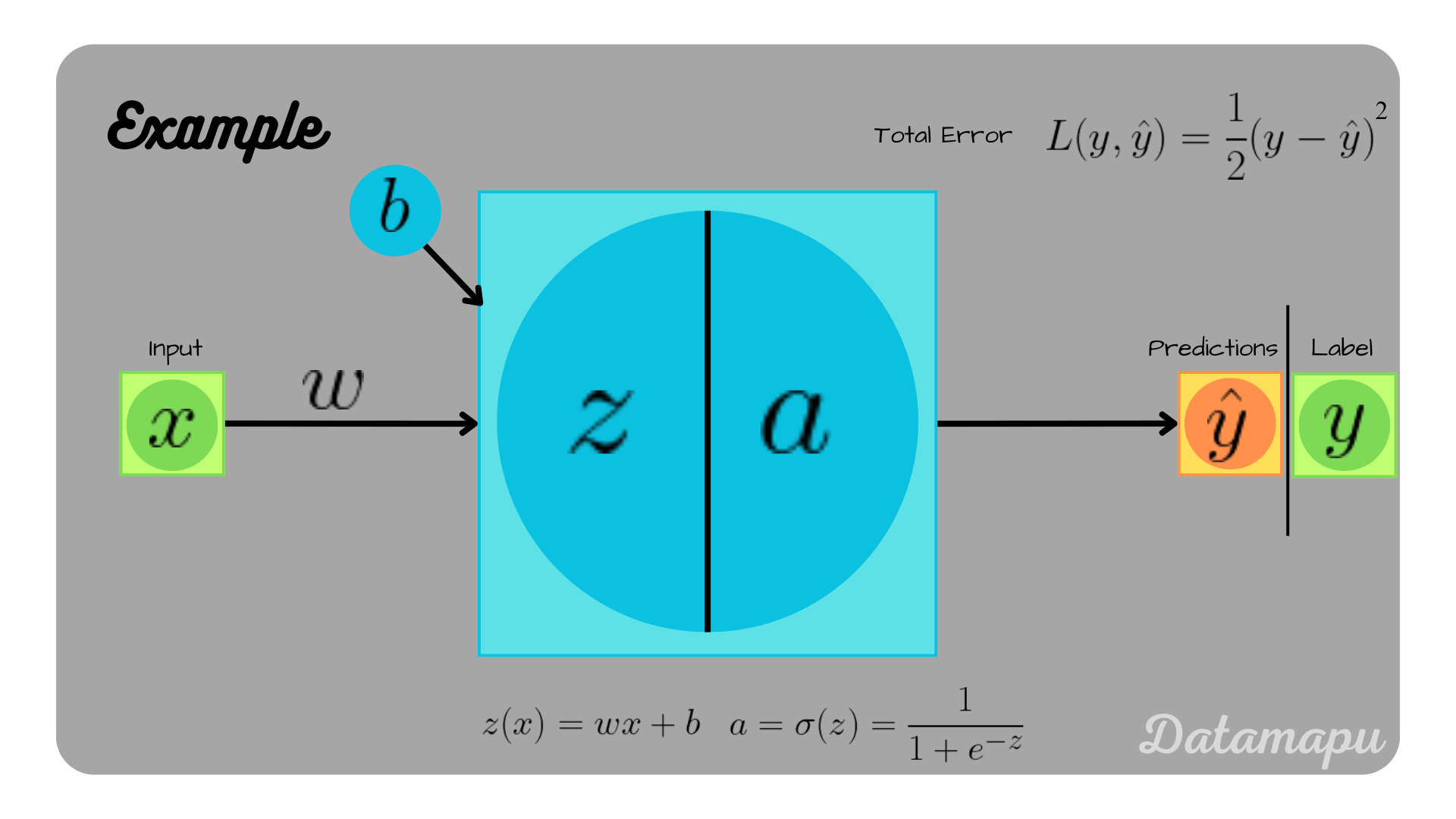

1. Example: One Neuron

To illustrate how backpropagation works, we start with the most simple neural network, which only consists of one single neuron.

Illustration of a Neural Network consisting of a single Neuron.

Illustration of a Neural Network consisting of a single Neuron.

In this simple neural net,

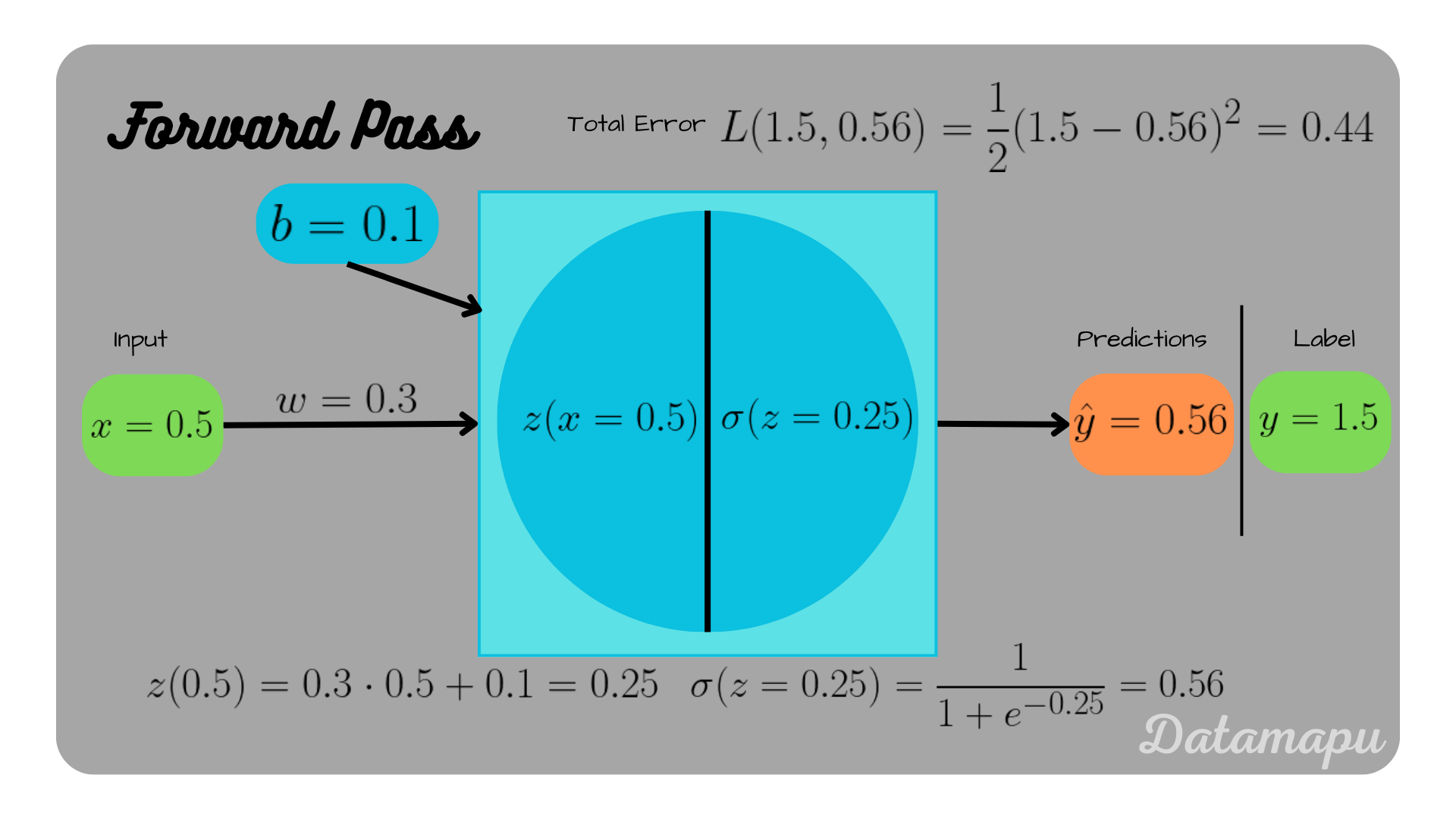

The Forward Pass

We can calculate the forward pass through this network as

Using the weight, and bias defined above, we get for

The error after this forward pass can be calculated as

Forward pass through the neural net.

Forward pass through the neural net.

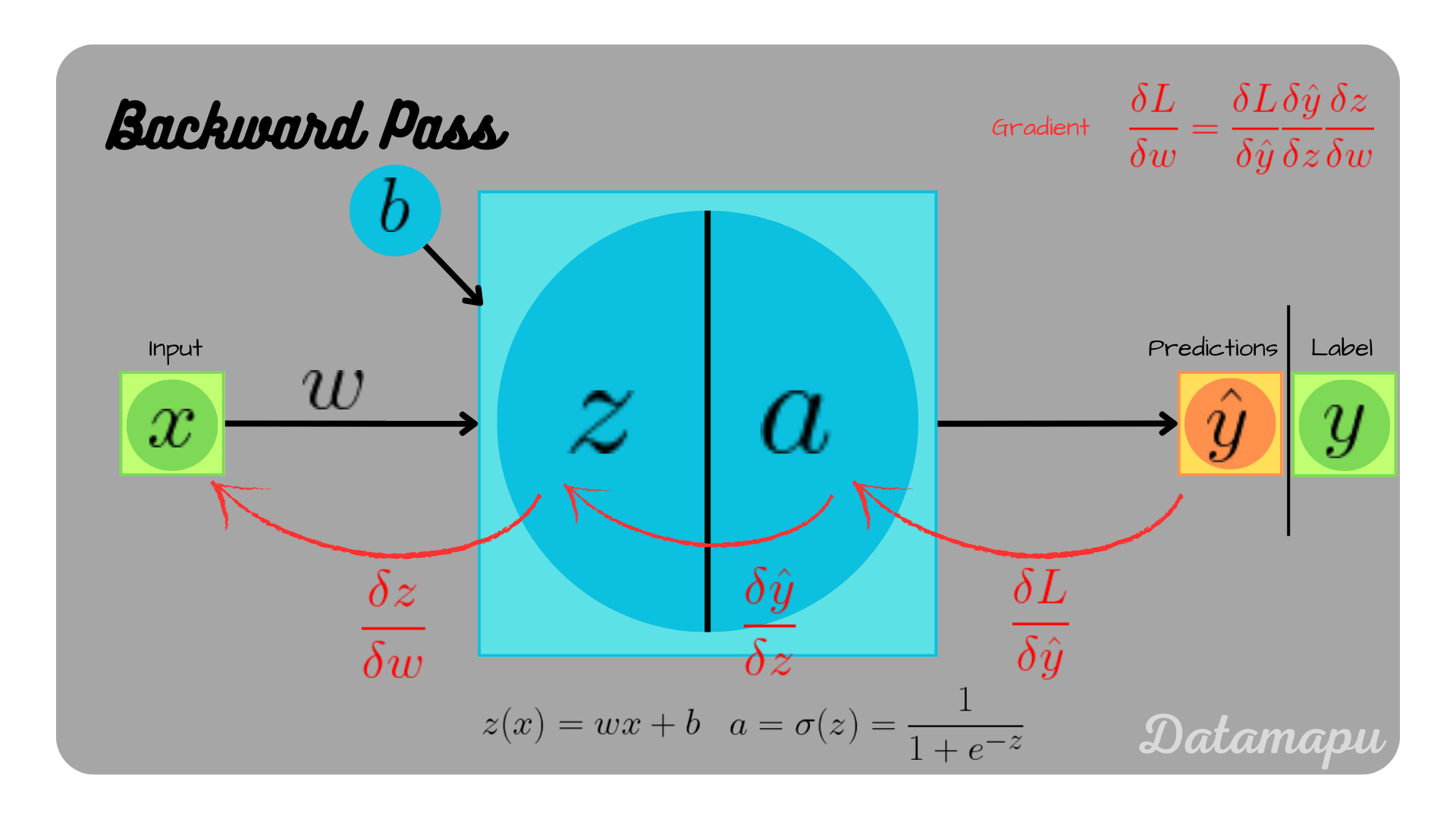

The Backward Pass

To update the weight and the bias we use Gradient Descent, that is

with

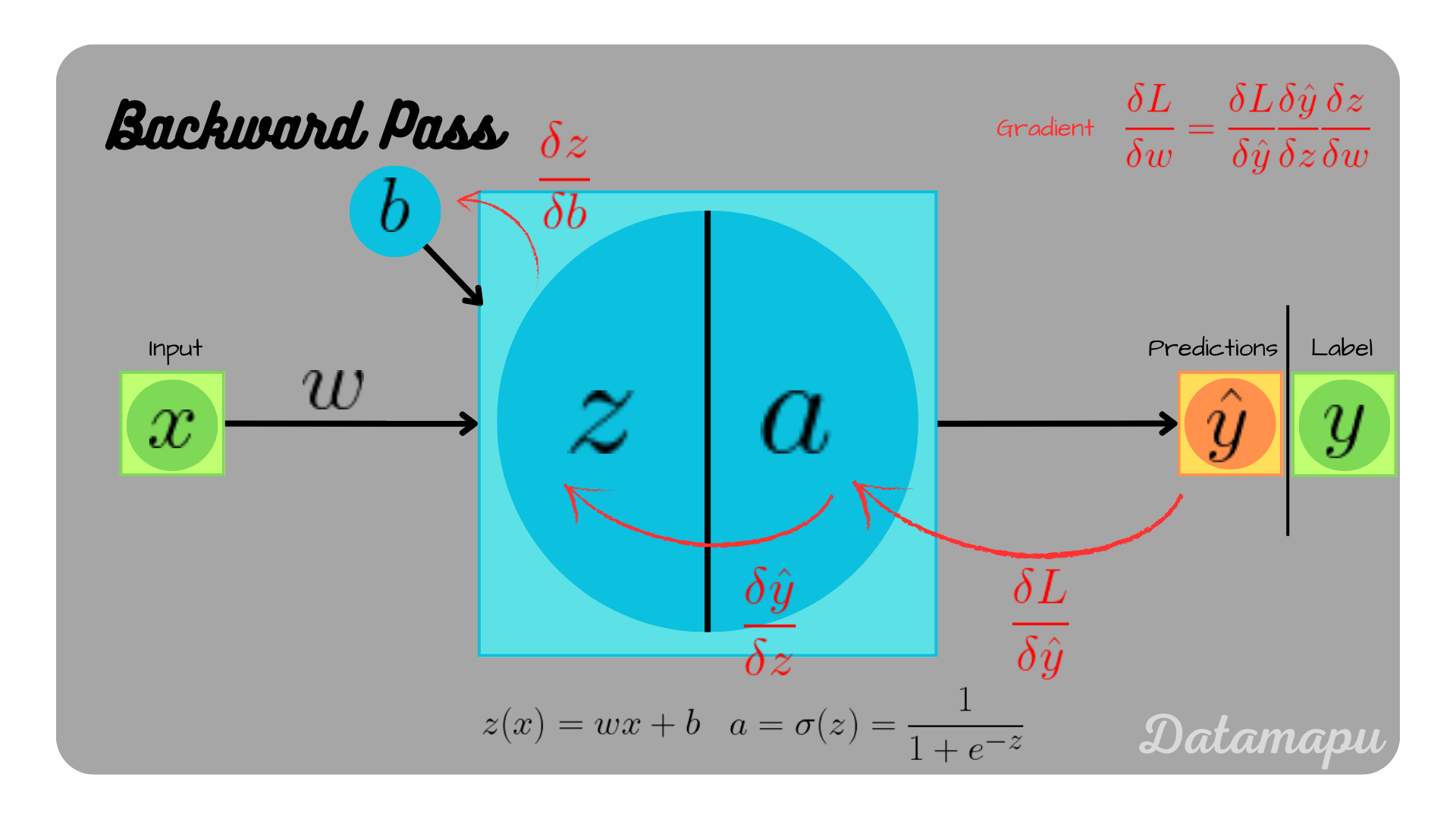

Illustration of backpropagation in a neural network consisting of a single neuron.

Illustration of backpropagation in a neural network consisting of a single neuron.

We can calculte the individual derivatives as

Please find the detailed calculation of the derivative of the sigmoid function in the appendix of this post.

For the data we are considering, we get for the first equation

The second equation leads to

and finally

Putting the equations back together, we get

The calculation for

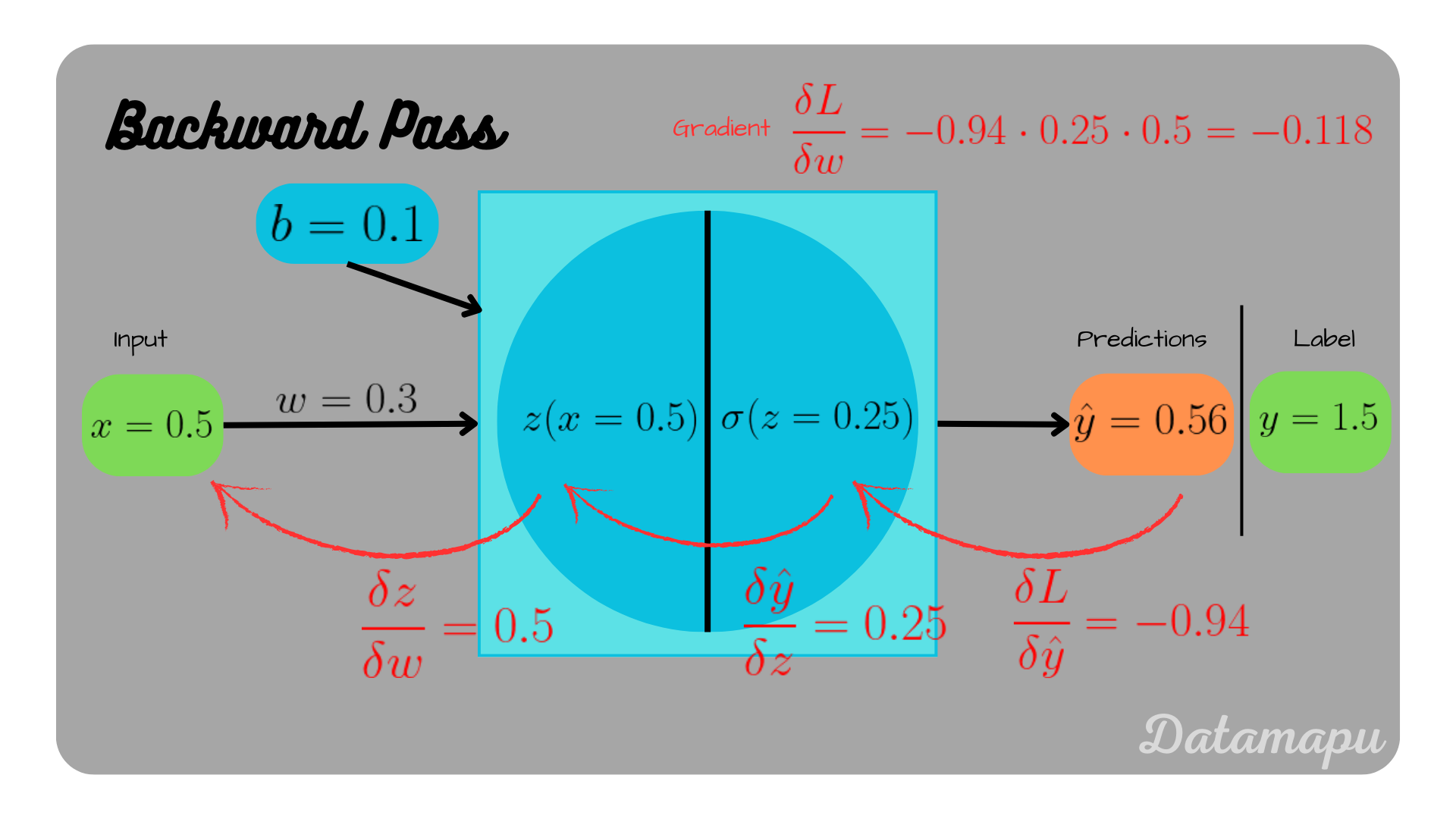

Backpropagation for the weight

Backpropagation for the weight

The weight and the bias then update to

Note

With this simple example, we illustrated one forward and one backward pass. It is a good example to understand the calculations, in real projects, however, data and neural nets are much more complex. In reality, one forward pass consists of processing all the

2. Example: Two Neurons

The second example we consider is a neural net, which consists of two neurons after each other, as illustrated in the following plot. Note, that the illustration is slightly different. We skipped the last arrow towards

A neural net with two layers, each consisting of one neuron.

A neural net with two layers, each consisting of one neuron.

The Forward Pass

The forward pass is calculated as follows

Together this leads to

Using the values define above, we get

The loss in this case is

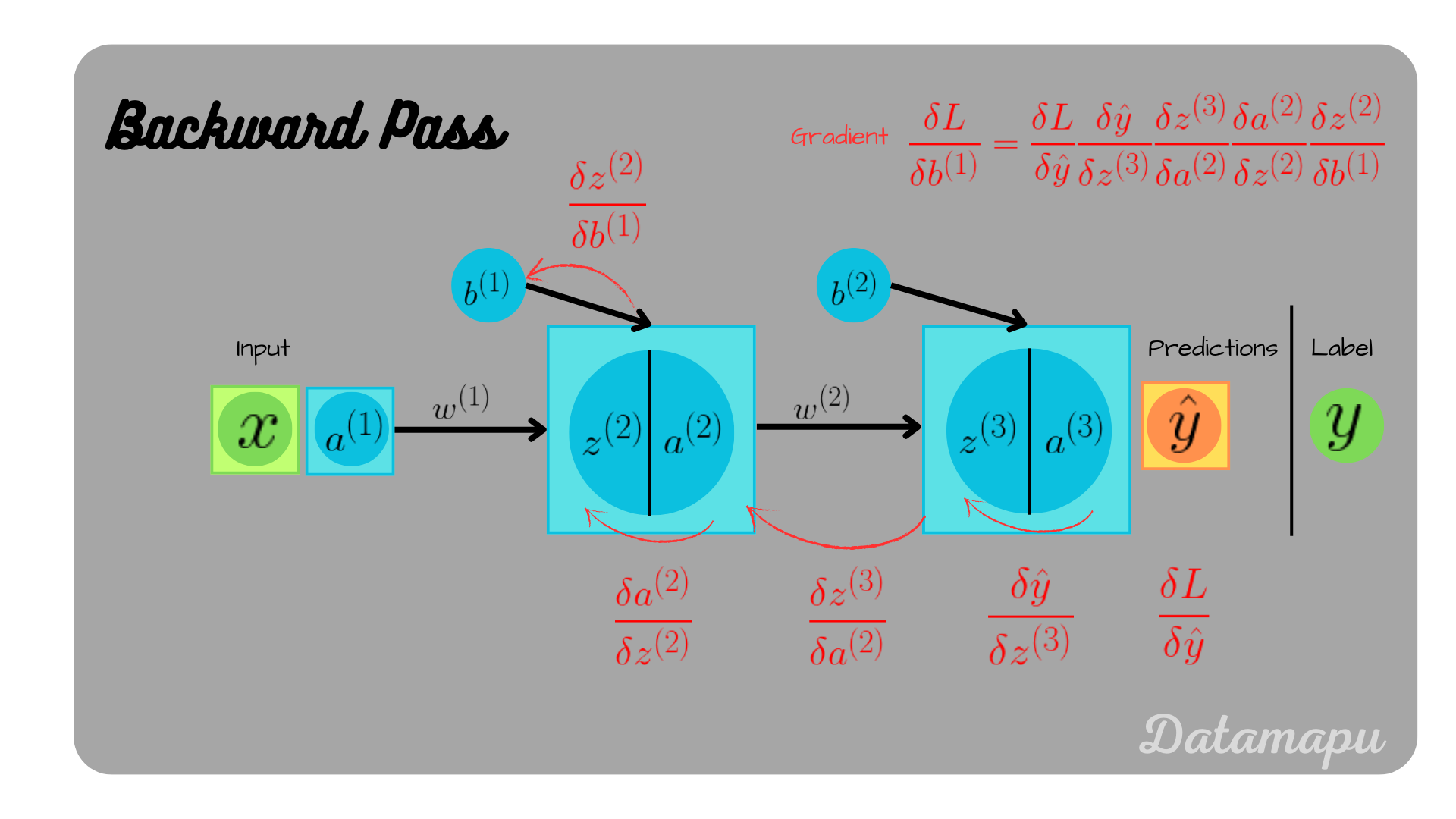

The Backward Pass

In the backward pass, we want to update all the four model parameters - the two weights and the two biases.

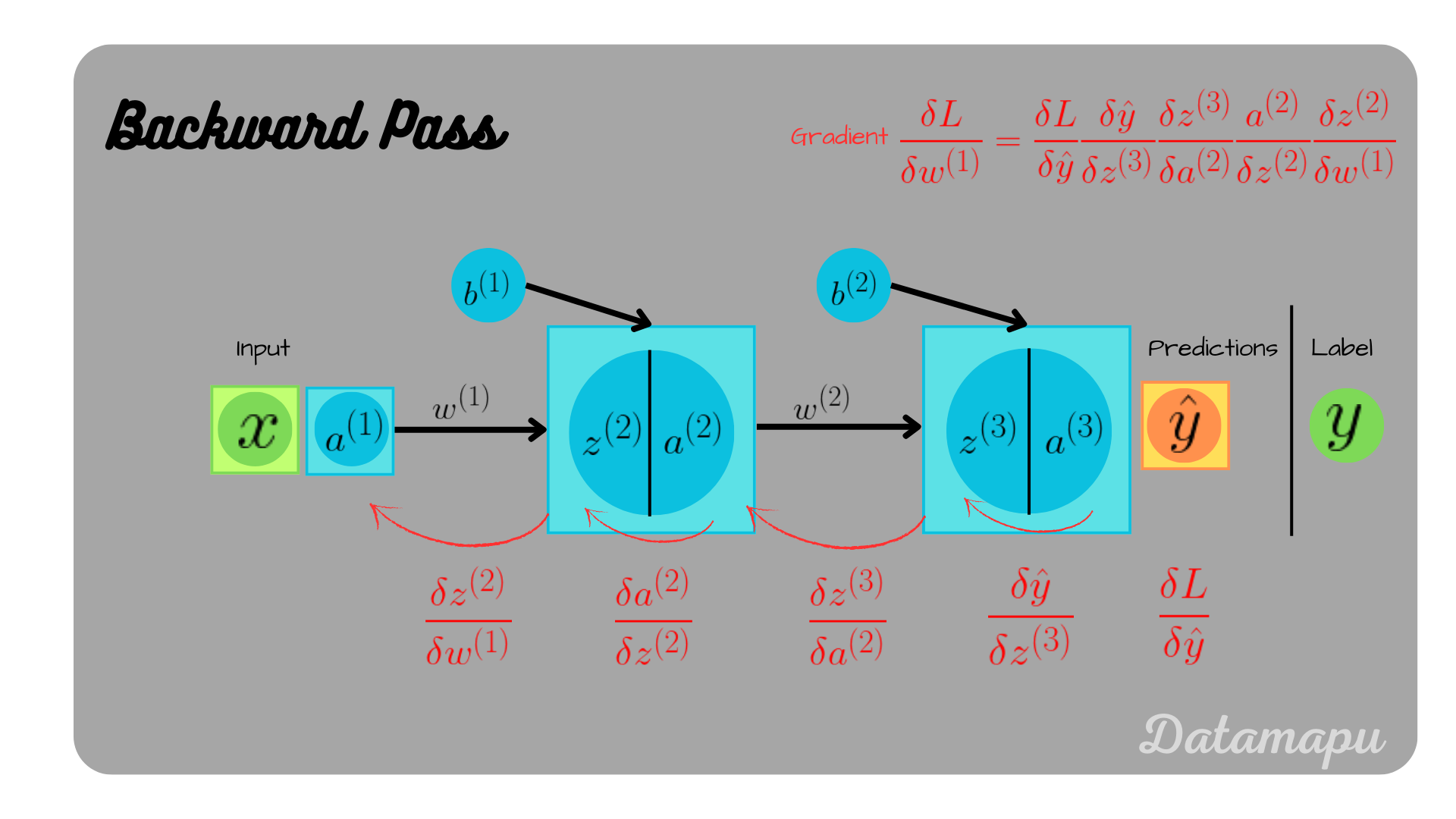

For

We will now focus on the remaining two. The idea is exactly the same, only we now have to apply the chain-rule several times

and

as illustrated in the following plots.

Backpropagation illustrated.

Backpropagation illustrated.

Calculting the individual derivatives, we get

For the detailed development of the derivative of the sigmoid function, please check the appendix of this post. With the values defined, we get for the first equation

For the second equation

with

we get

For the third equation, we get

The fourth equation leads to

with

Replacing this in the above equation leads to

The fifth equation gives

and the last equation is always equal to

Putting the derivatives back together, we get

and

With that we can update the weights

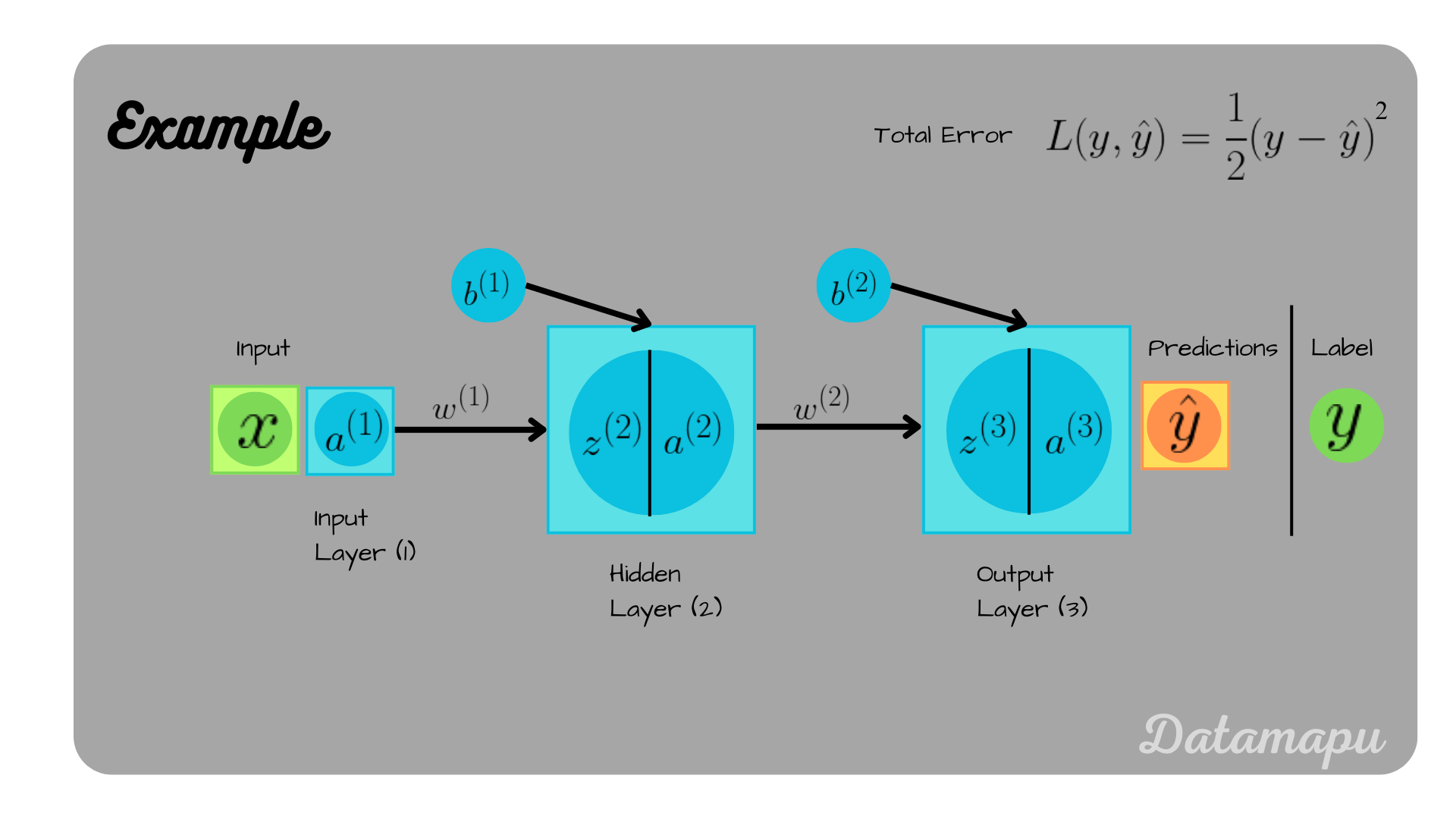

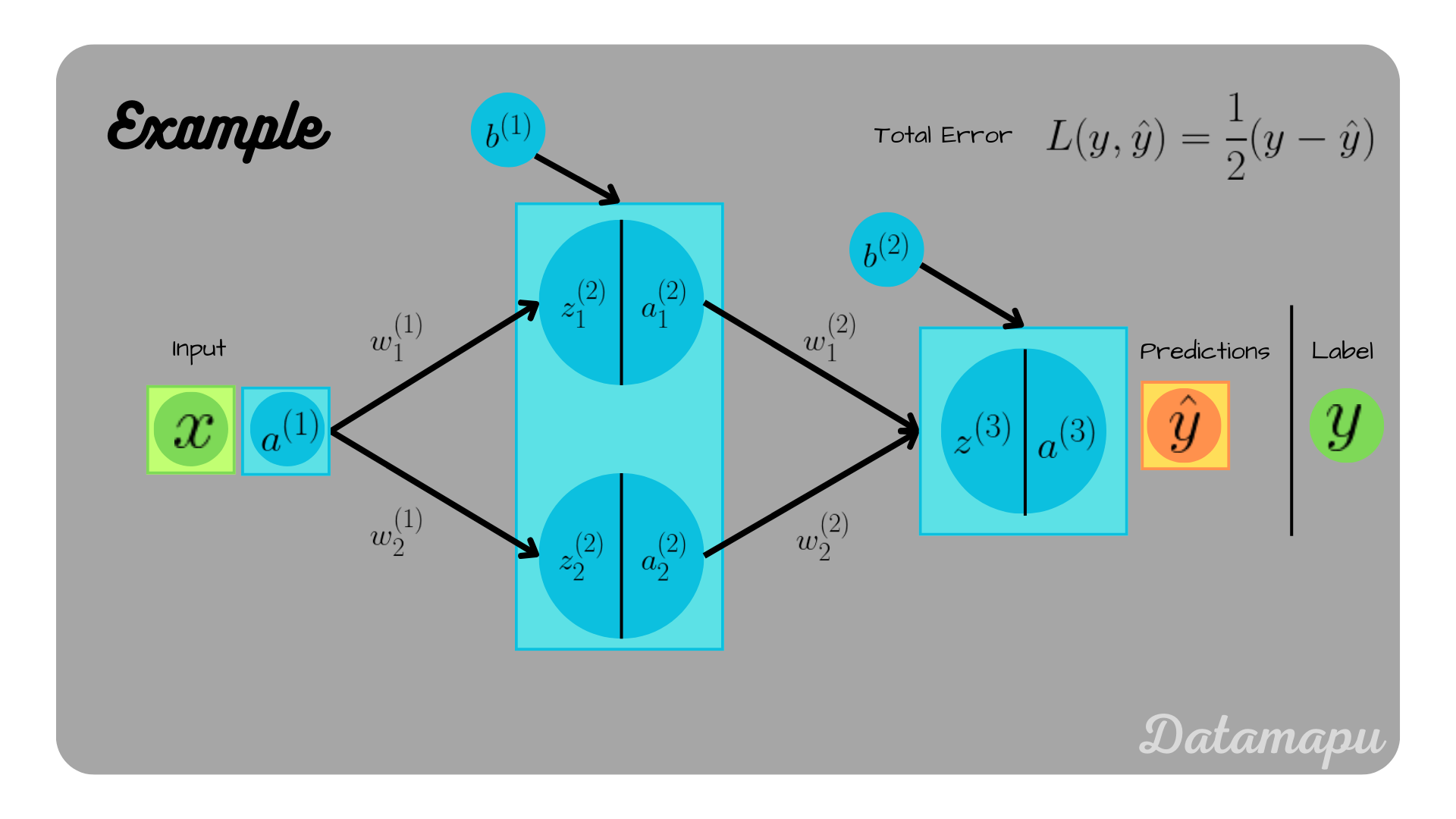

3. Example: Two Neurons in a layer

In this example, we will consider a neural net, that consists of two neurons in the hidden layer. We are not going to cover it in detail, but we will have a look at the equations that need to be calculated. For illustration purposes, the bias term is illustrated as one vector for each layer, i.e. in the below plot

Example with two neurons in one layer.

Example with two neurons in one layer.

Forward Pass

In the forward pass we now have to consider the sum of the two neurons in the layer. It is calculated as

with

this leads to

with

for

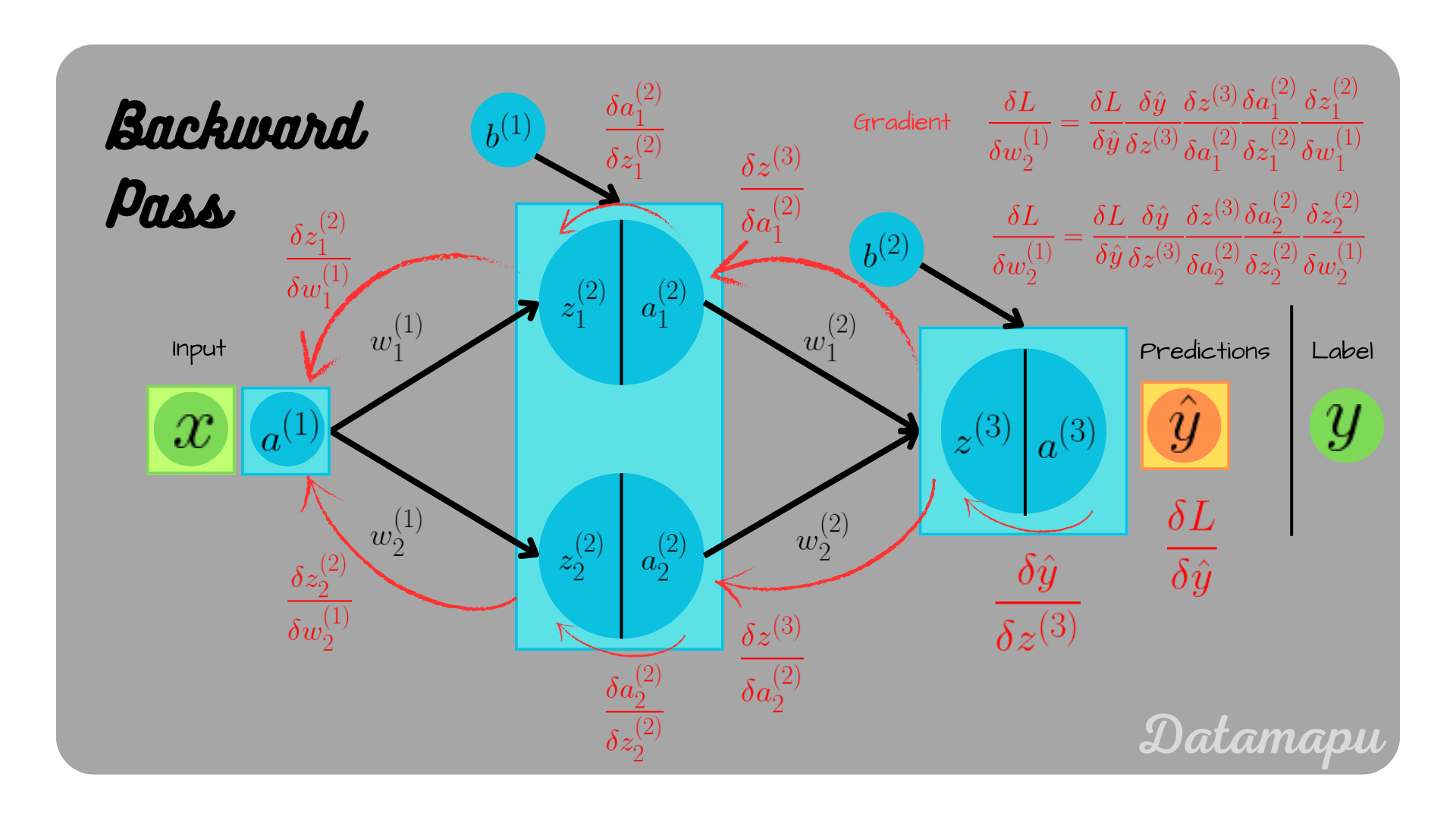

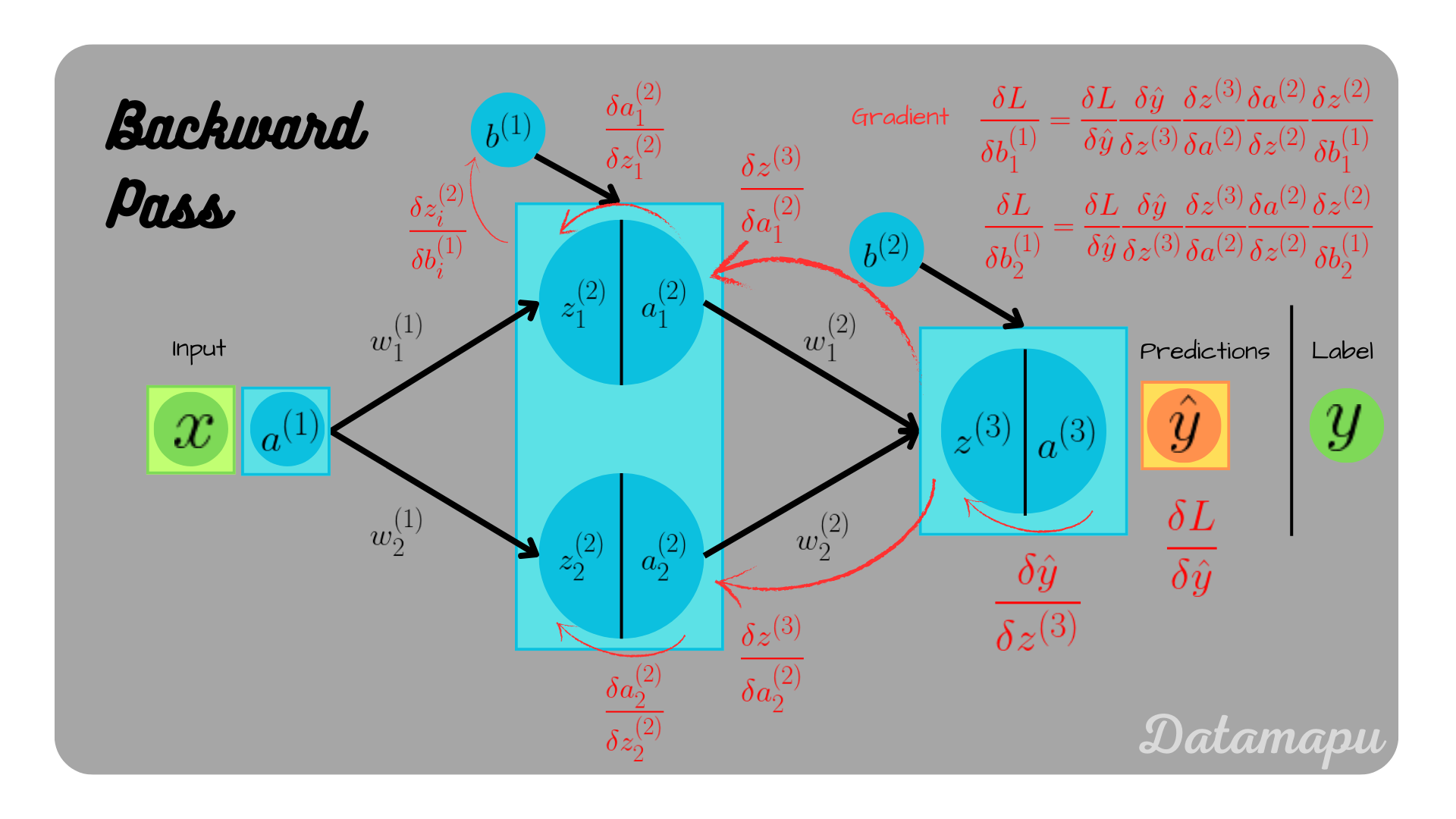

Backward Pass

For the backward pass we need to calculate the partial derivatives as follows

We can calculate all the partial derivatives as shown in the above two examples. The calculations for

Backpropagation illustrated for the weights.

Backpropagation illustrated for the weights.

Backpropagation illustrated for the biases.

Backpropagation illustrated for the biases.

We can see that even for this very small and simple neural net, the calculations easily get overwhelming.

Note

In the above considered examples the data used was one-dimensional, which makes the calculations easier. If the output has

The partial derivative of

with

Summary

To train a neural network the weights and biases need to be optimized. This is done using backpropagation. In this post we calculated the backpropagation algorithm for some simplified examples in detail. Gradient Descent is used to update the model parameters. The general concept of calculating the gradient is calculating the partial derivatives of the loss function using the chain rule.

Further Reading

More general formulations of the backpropagation algorithm can be found in the following links.

- Wikipedia

- Neural Networks and Deep Learning - How the backpropagation algorithm works, Michael Nielsen

- Brilliant - Backpropagation

- Backpropagation, Jorge Leonel

Appendix

Derivative of the Sigmoid Functioni

The Sigmoid Function is defined as

The derivative can be derived using the chain rule

In the last expression we applied the outer derivative, calculating the inner derivative again needs the chain rule.

This can be reformulated to

That is, we can write the derivative as follows

Illustration of the Sigmoid function and its derivative.

Illustration of the Sigmoid function and its derivative.

If this blog is useful for you, please consider supporting.