Gradient Boost for Classification - Explained

- 12 minutes read - 2385 wordsIntroduction

Gradient Boosting is an ensemble machine learning model, that - as the name suggests - is based on boosting. An ensemble model based on boosting refers to a model that sequentially builds models, and the new model depends on the previous model. In Gradient Boosting these models are built such that they improve the error of the previous model. These individual models are so-called weak learners, which means they have low predictive skills. The ensemble of these weak learners builds the final model, which is a strong learner with a high predictive skill. In this post, we go through the algorithm of Gradient Boosting in general and then concretize the individual steps for a classification task using Decision Trees as weak learners and the log-loss function. There will be some overlapping with the article Gradient Boosting for Regression - Explained, where a detailed explanation of Gradient Boosting is given, which is then applied to a regression problem. However, in this article, do not go into the details of the general formulation, for that please refer to the previously mentioned post. If you are interested in a concrete example with detailed calculations, please refer to Gradient Boosting for Regression - Example for a regression problem and Gradient Boosting for Classification - Example for a classification problem.

The Algorithm

Gradient Boosting is a boosting algorithm that aims to build a series of weak learners, which together act as a strong learner. In Gradient Boosting the objective is to improve the error of the preceeding model by minimizing its loss function using Gradient Descent. That means the weak learners are built up on the error and not up on the targets themselves as in other boosting algorithms like AdaBoost.

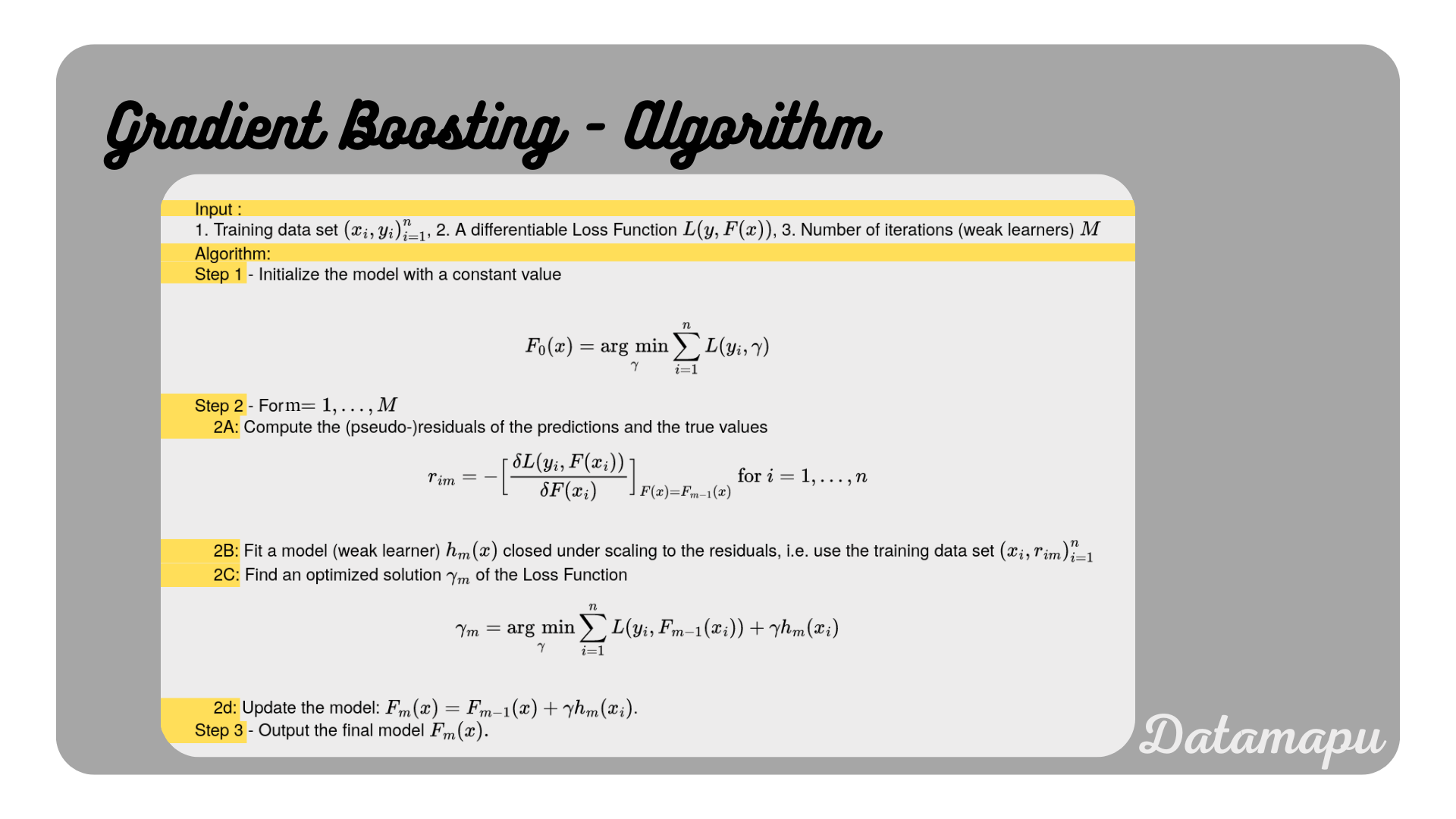

In the following, the algorithm is described for the general case. The notation is adapted from Wikipedia. The general case is explained in Gradient Boosting for Regression - Explained, in this post, we apply them to the special case of a binary classification using Decision Trees as weak learners and the log-loss as a loss function.

Gradient Boosting Algorithm. Adapted from Wikipedia.

Gradient Boosting Algorithm. Adapted from Wikipedia.

Let’s look at the individual steps, for the special case of a binary classification. As loss function, we use the log-loss, which is defined as

with

with

Let

Step 1 - Initialize the model with a constant value

The first initialization of the model is given by

Using the log-loss as formulated above, this turns into

The expression

To calculate this derivative, we need to use the chain rule and we need to remember the derivative of the logarithm, which is.

together with the chain rule

Note, that this is the derivative of the natural logarithm. If the logarithm is to a different base the derivative changes. Further, we need the derivative of the sigmoid function, which is

The derivation of this equation can be found here.

With this we get

This can be simplified to

Now we set the derivative to

This can be transformed to

The right-hand side of this equation corresponds to the probability of the positive class

Applying the logarithm this leads to

Using logarithmic transformations we get

The last transformation is explained in more detail in the appendix.

This expression refers to the log of the odds of the target variable, which is used to initialize the model for the specific case of a binary classification.

The next step is performed

Step 2 - for

2A. Compute (pseudo-)residuals of the predictions and the true values.

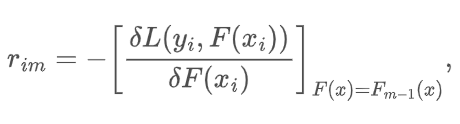

The (pseudo-) residuals are defined as

for

That is we need to calculate the derivative of the loss function with respect to the predictions.

With the loss function

To calculate this derivative we need to use the chain-rule

We calculate the first derivative

Using the derivative of the logarithm as above, this leads to

For the second part of the derivative we again need the derivative of the sigmoid function.

Now, we can calculate the derivative

That is the (pseudo-)residuals are given as

with

2B. Fit a model (weak learner) closed after scaling

In this step, we fit a weak model to the input features and the residuals

2C. Find an optimized solution

In this step the optimization problem

where

where

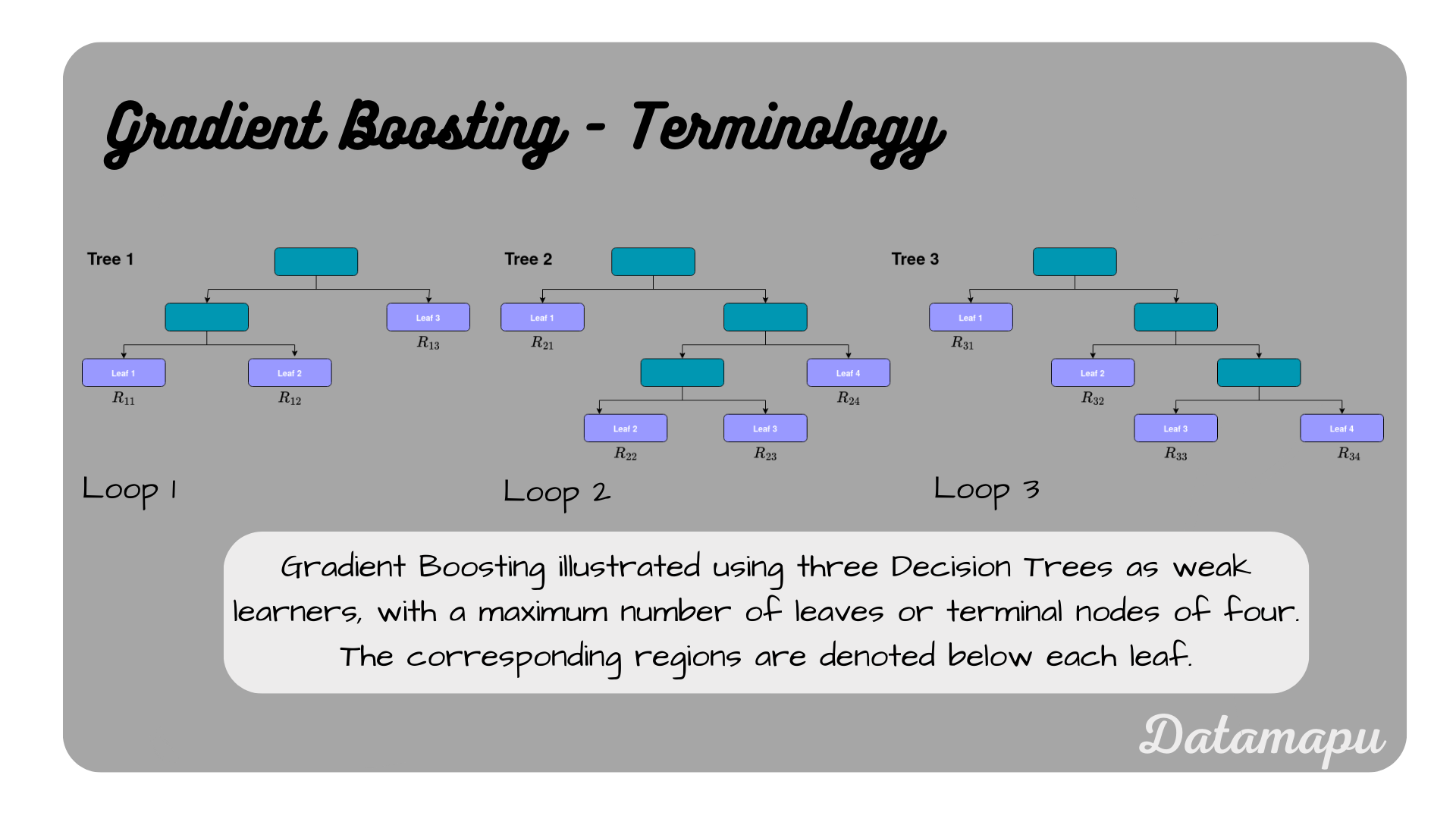

Terminology for Gradient Boosting with Decision Trees.

Terminology for Gradient Boosting with Decision Trees.

Now, let’s consider the predictions

with

Coming back to equation (2a), we aim to find

Note, that the summation is only over the elements of the region. Using the log-loss

with

To solve for this we would need to calculate the derivative with respect to

with

2D. Update the model.

In this step, we update our model

can be rewritten for the special case of using Decision Trees as weak learners to

In this equation

Step 3 - Output final model

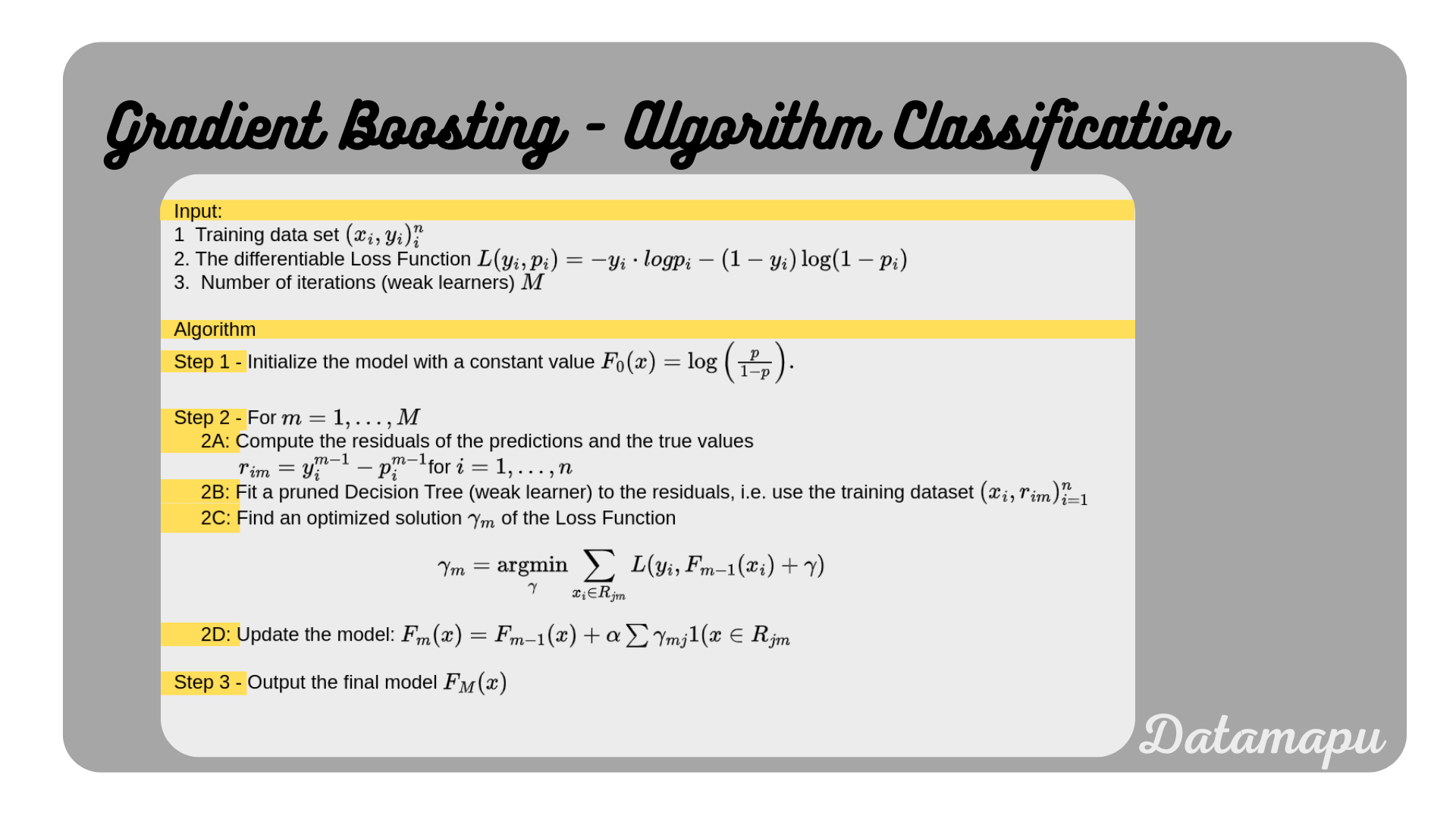

The individual steps of the algorithm for the special case of a binary classification using Decision Trees, and the above specified loss, are summarized below.

Gradient Boosting Algorithm simplified for a binary classification task.

Gradient Boosting Algorithm simplified for a binary classification task.

Gradient Boosting in Python

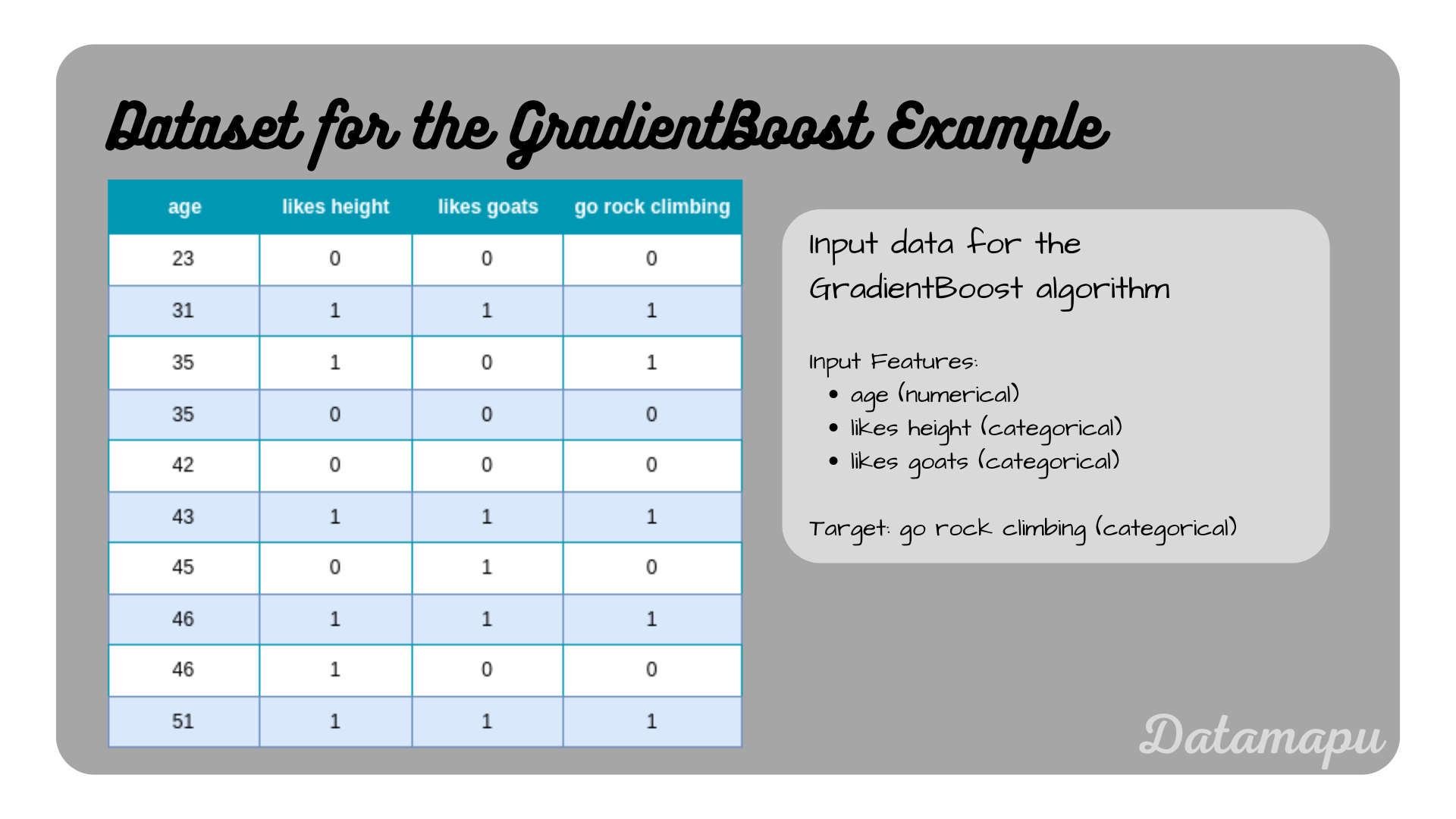

To perform gradient boosting for classification in Python, we can use sklearn. The GradientBoostingClassifer method can be used for binary and multiple classification tasks. The weak learners in sklearn are Decision Trees and cannot be changed. Let’s consider a simple dataset, consisting of 10 data samples. It is the same dataset we use in Decision Trees for Classification - Example.

Dataset considered in this example

Dataset considered in this example

In Python we can read this data into a pandas dataframe.

| |

Now we can fit a model to the data. The GradientBoostingClassifier method from sklearn offers a set of hyperparameters that can be changed to optimize the model. For this example, we set only two hyperparameters. The first one is the number of weak learners, that is the number of Decision Trees - n_estimators. For this simple dataset, we choose

| |

To make the predictions and calculate the score, which in this case is the mean accuracy of all samples, we can also use sklearn.

| |

For this simplified example, we receive the predictions

Summary

In this article, we discussed the Gradient Boosting algorithm for the special case of a binary classification. Gradient Boosting is a powerful ensemble learning method, which is in general based on Decision Trees. However, other weak learners are possible. In this case, the optimization of the loss function is approximated, in contrast to the Gradient Boosting for regression algorithm, where an analytical solution can be found relatively easily. In practice, sklearn can be used to develop and evaluate Gradient Boosting models in Python. For a more detailed example in Python on a larger dataset, please refer to this notebook on kaggle, which describes a regression problem. Adjusting the model and the evaluation metric the application to a classification problem is similar.

Appendix

Derive

Further Reading

- [1] Friedman, J.H. (1999), “Greedy Function Approximation: A Gradient Boosting Machine”

- [2] Wikipedia, “Gradient boosting”, date of citation: January 2024

If this blog is useful for you, please consider supporting.